Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 22 July 2024

Neural general circulation models for weather and climate

- Dmitrii Kochkov ORCID: orcid.org/0000-0003-3846-4911 1 na1 ,

- Janni Yuval ORCID: orcid.org/0000-0001-7519-0118 1 na1 ,

- Ian Langmore 1 na1 ,

- Peter Norgaard 1 na1 ,

- Jamie Smith 1 na1 ,

- Griffin Mooers 1 ,

- Milan Klöwer 2 ,

- James Lottes 1 ,

- Stephan Rasp 1 ,

- Peter Düben ORCID: orcid.org/0000-0002-4610-3326 3 ,

- Sam Hatfield 3 ,

- Peter Battaglia 4 ,

- Alvaro Sanchez-Gonzalez 4 ,

- Matthew Willson ORCID: orcid.org/0000-0002-8730-1927 4 ,

- Michael P. Brenner 1 , 5 &

- Stephan Hoyer ORCID: orcid.org/0000-0002-5207-0380 1 na1

Nature volume 632 , pages 1060–1066 ( 2024 ) Cite this article

54k Accesses

3 Citations

678 Altmetric

Metrics details

- Atmospheric dynamics

- Climate and Earth system modelling

- Computational science

General circulation models (GCMs) are the foundation of weather and climate prediction 1 , 2 . GCMs are physics-based simulators that combine a numerical solver for large-scale dynamics with tuned representations for small-scale processes such as cloud formation. Recently, machine-learning models trained on reanalysis data have achieved comparable or better skill than GCMs for deterministic weather forecasting 3 , 4 . However, these models have not demonstrated improved ensemble forecasts, or shown sufficient stability for long-term weather and climate simulations. Here we present a GCM that combines a differentiable solver for atmospheric dynamics with machine-learning components and show that it can generate forecasts of deterministic weather, ensemble weather and climate on par with the best machine-learning and physics-based methods. NeuralGCM is competitive with machine-learning models for one- to ten-day forecasts, and with the European Centre for Medium-Range Weather Forecasts ensemble prediction for one- to fifteen-day forecasts. With prescribed sea surface temperature, NeuralGCM can accurately track climate metrics for multiple decades, and climate forecasts with 140-kilometre resolution show emergent phenomena such as realistic frequency and trajectories of tropical cyclones. For both weather and climate, our approach offers orders of magnitude computational savings over conventional GCMs, although our model does not extrapolate to substantially different future climates. Our results show that end-to-end deep learning is compatible with tasks performed by conventional GCMs and can enhance the large-scale physical simulations that are essential for understanding and predicting the Earth system.

Similar content being viewed by others

Accurate medium-range global weather forecasting with 3D neural networks

Deep learning for twelve hour precipitation forecasts

Skilful predictions of the Asian summer monsoon one year ahead

Solving the equations for Earth’s atmosphere with general circulation models (GCMs) is the basis of weather and climate prediction 1 , 2 . Over the past 70 years, GCMs have been steadily improved with better numerical methods and more detailed physical models, while exploiting faster computers to run at higher resolution. Inside GCMs, the unresolved physical processes such as clouds, radiation and precipitation are represented by semi-empirical parameterizations. Tuning GCMs to match historical data remains a manual process 5 , and GCMs retain many persistent errors and biases 6 , 7 , 8 . The difficulty of reducing uncertainty in long-term climate projections 9 and estimating distributions of extreme weather events 10 presents major challenges for climate mitigation and adaptation 11 .

Recent advances in machine learning have presented an alternative for weather forecasting 3 , 4 , 12 , 13 . These models rely solely on machine-learning techniques, using roughly 40 years of historical data from the European Center for Medium-Range Weather Forecasts (ECMWF) reanalysis v5 (ERA5) 14 for model training and forecast initialization. Machine-learning methods have been remarkably successful, demonstrating state-of-the-art deterministic forecasts for 1- to 10-day weather prediction at a fraction of the computational cost of traditional models 3 , 4 . Machine-learning atmospheric models also require considerably less code, for example GraphCast 3 has 5,417 lines versus 376,578 lines for the National Oceanic and Atmospheric Administration’s FV3 atmospheric model 15 (see Supplementary Information section A for details).

Nevertheless, machine-learning approaches have noteworthy limitations compared with GCMs. Existing machine-learning models have focused on deterministic prediction, and surpass deterministic numerical weather prediction in terms of the aggregate metrics for which they are trained 3 , 4 . However, they do not produce calibrated uncertainty estimates 4 , which is essential for useful weather forecasts 1 . Deterministic machine-learning models using a mean-squared-error loss are rewarded for averaging over uncertainty, producing unrealistically blurry predictions when optimized for multi-day forecasts 3 , 13 . Unlike physical models, machine-learning models misrepresent derived (diagnostic) variables such as geostrophic wind 16 . Furthermore, although there has been some success in using machine-learning approaches on longer timescales 17 , 18 , these models have not demonstrated the ability to outperform existing GCMs.

Hybrid models that combine GCMs with machine learning are appealing because they build on the interpretability, extensibility and successful track record of traditional atmospheric models 19 , 20 . In the hybrid model approach, a machine-learning component replaces or corrects the traditional physical parameterizations of a GCM. Until now, the machine-learning component in such models has been trained ‘offline’, by learning parameterizations independently of their interaction with dynamics. These components are then inserted into an existing GCM. The lack of coupling between machine-learning components and the governing equations during training potentially causes serious problems, such as instability and climate drift 21 . So far, hybrid models have mostly been limited to idealized scenarios such as aquaplanets 22 , 23 . Under realistic conditions, machine-learning corrections have reduced some biases of very coarse GCMs 24 , 25 , 26 , but performance remains considerably worse than state-of-the-art models.

Here we present NeuralGCM, a fully differentiable hybrid GCM of Earth’s atmosphere. NeuralGCM is trained on forecasting up to 5-day weather trajectories sampled from ERA5. Differentiability enables end-to-end ‘online training’ 27 , with machine-learning components optimized in the context of interactions with the governing equations for large-scale dynamics, which we find enables accurate and stable forecasts. NeuralGCM produces physically consistent forecasts with accuracy comparable to best-in-class models across a range of timescales, from 1- to 15-day weather to decadal climate prediction.

Neural GCMs

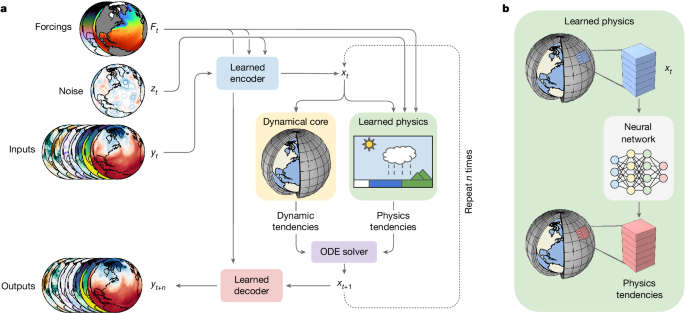

A schematic of NeuralGCM is shown in Fig. 1 . The two key components of NeuralGCM are a differentiable dynamical core for solving the discretized governing dynamical equations and a learned physics module that parameterizes physical processes with a neural network, described in full detail in Methods , Supplementary Information sections B and C , and Supplementary Table 1 . The dynamical core simulates large-scale fluid motion and thermodynamics under the influence of gravity and the Coriolis force. The learned physics module (Supplementary Fig. 1 ) predicts the effect of unresolved processes, such as cloud formation, radiative transport, precipitation and subgrid-scale dynamics, on the simulated fields using a neural network.

a , Overall model structure, showing how forcings F t , noise z t (for stochastic models) and inputs y t are encoded into the model state x t . The model state is fed into the dynamical core, and alongside forcings and noise into the learned physics module. This produces tendencies (rates of change) used by an implicit–explicit ordinary differential equation (ODE) solver to advance the state in time. The new model state x t +1 can then be fed back into another time step, or decoded into model predictions. b , The learned physics module, which feeds data for individual columns of the atmosphere into a neural network used to produce physics tendencies in that vertical column.

The differentiable dynamical core in NeuralGCM allows an end-to-end training approach, whereby we advance the model multiple time steps before employing stochastic gradient descent to minimize discrepancies between model predictions and reanalysis (Supplementary Information section G.2 ). We gradually increase the rollout length from 6 hours to 5 days (Supplementary Information section G and Supplementary Table 5 ), which we found to be critical because our models are not accurate for multi-day prediction or stable for long rollouts early in training (Supplementary Information section H.6.2 and Supplementary Fig. 23 ). The extended back-propagation through hundreds of simulation steps enables our neural networks to take into account interactions between the learned physics and the dynamical core. We train deterministic and stochastic NeuralGCM models, each of which uses a distinct training protocol, described in full detail in Methods and Supplementary Table 4 .

We train a range of NeuralGCM models at horizontal resolutions with grid spacing of 2.8°, 1.4° and 0.7° (Supplementary Fig. 7 ). We evaluate the performance of NeuralGCM at a range of timescales appropriate for weather forecasting and climate simulation. For weather, we compare against the best-in-class conventional physics-based weather models, ECMWF’s high-resolution model (ECMWF-HRES) and ensemble prediction system (ECMWF-ENS), and two of the recent machine-learning-based approaches, GraphCast 3 and Pangu 4 . For climate, we compare against a global cloud-resolving model and Atmospheric Model Intercomparison Project (AMIP) runs.

Medium-range weather forecasting

Our evaluation set-up focuses on quantifying accuracy and physical consistency, following WeatherBench2 12 . We regrid all forecasts to a 1.5° grid using conservative regridding, and average over all 732 forecasts made at noon and midnight UTC in the year 2020, which was held-out from training data for all machine-learning models. NeuralGCM, GraphCast and Pangu compare with ERA5 as the ground truth, whereas ECMWF-ENS and ECMWF-HRES compare with the ECMWF operational analysis (that is, HRES at 0-hour lead time), to avoid penalizing the operational forecasts for different biases than ERA5.

Model accuracy

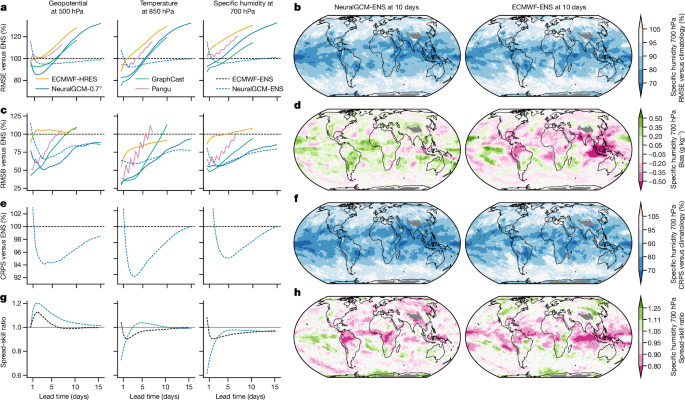

We use ECMWF’s ensemble (ENS) model as a reference baseline as it achieves the best performance across the majority of lead times 12 . We assess accuracy using (1) root-mean-squared error (RMSE), (2) root-mean-squared bias (RMSB), (3) continuous ranked probability score (CRPS) and (4) spread-skill ratio, with the results shown in Fig. 2 . We provide more in-depth evaluations including scorecards, metrics for additional variables and levels and maps in Extended Data Figs. 1 and 2 , Supplementary Information section H and Supplementary Figs. 9 – 22 .

a , c , RMSE ( a ) and RMSB ( c ) for ECMWF-ENS, ECMWF-HRES, NeuralGCM-0.7°, NeuralGCM-ENS, GraphCast 3 and Pangu 4 on headline WeatherBench2 variables, as a percentage of the error of ECMWF-ENS. Deterministic and stochastic models are shown in solid and dashed lines respectively. e , g , CRPS relative to ECMWF-ENS ( e ) and spread-skill ratio for the ENS and NeuralGCM-ENS models ( g ). b , d , f , h , Spatial distributions of RMSE ( b ), bias ( d ), CRPS ( f ) and spread-skill ratio ( h ) for NeuralGCM-ENS and ECMWF-ENS models for 10-day forecasts of specific humidity at 700 hPa. Spatial plots of RMSE and CRPS show skill relative to a probabilistic climatology 12 with an ensemble member for each of the years 1990–2019. The grey areas indicate regions where climatological surface pressure on average is below 700 hPa.

Deterministic models that produce a single weather forecast for given initial conditions can be compared effectively using RMSE skill at short lead times. For the first 1–3 days, depending on the atmospheric variable, RMSE is minimized by forecasts that accurately track the evolution of weather patterns. At this timescale we find that NeuralGCM-0.7° and GraphCast achieve best results, with slight variations across different variables (Fig. 2a ). At longer lead times, RMSE rapidly increases owing to chaotic divergence of nearby weather trajectories, making RMSE less informative for deterministic models. RMSB calculates persistent errors over time, which provides an indication of how models would perform at much longer lead times. Here NeuralGCM models also compare favourably against previous approaches (Fig. 2c ), with notably much less bias for specific humidity in the tropics (Fig. 2d ).

Ensembles are essential for capturing intrinsic uncertainty of weather forecasts, especially at longer lead times. Beyond about 7 days, the ensemble means of ECMWF-ENS and NeuralGCM-ENS forecasts have considerably lower RMSE than the deterministic models, indicating that these models better capture the average of possible weather. A better metric for ensemble models is CRPS, which is a proper scoring rule that is sensitive to full marginal probability distributions 28 . Our stochastic model (NeuralGCM-ENS) running at 1.4° resolution has lower error compared with ECMWF-ENS across almost all variables, lead times and vertical levels for ensemble-mean RMSE, RSMB and CRPS (Fig. 2a,c,e and Supplementary Information section H ), with similar spatial patterns of skill (Fig. 2b,f ). Like ECMWF-ENS, NeuralGCM-ENS has a spread-skill ratio of approximately one (Fig. 2d ), which is a necessary condition for calibrated forecasts 29 .

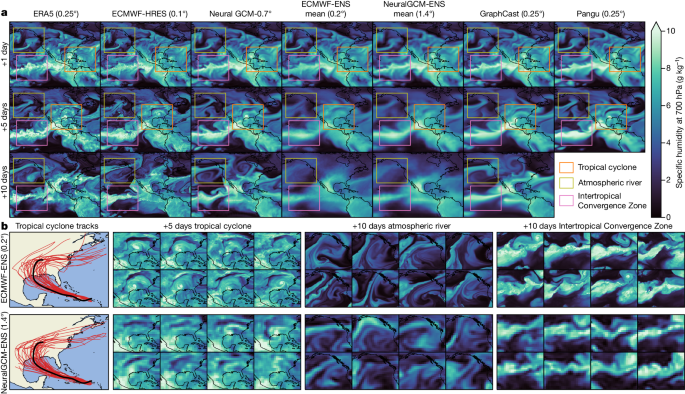

An important characteristic of forecasts is their resemblance to realistic weather patterns. Figure 3 shows a case study that illustrates the performance of NeuralGCM on three types of important weather phenomenon: tropical cyclones, atmospheric rivers and the Intertropical Convergence Zone. Figure 3a shows that all the machine-learning models make significantly blurrier forecasts than the source data ERA5 and physics-based ECMWF-HRES forecast, but NeuralCGM-0.7° outperforms the pure machine-learning models, despite its coarser resolution (0.7° versus 0.25° for GraphCast and Pangu). Blurry forecasts correspond to physically inconsistent atmospheric conditions and misrepresent extreme weather. Similar trends hold for other derived variables of meteorological interest (Supplementary Information section H.2 ). Ensemble-mean predictions, from both NeuralGCM and ECMWF, are closer to ERA5 in an average sense, and thus are inherently smooth at long lead times. In contrast, as shown in Fig. 3 and in Supplementary Information section H.3 , individual realizations from the ECMWF and NeuralGCM ensembles remain sharp, even at long lead times. Like ECMWF-ENS, NeuralGCM-ENS produces a statistically representative range of future weather scenarios for each weather phenomenon, despite its eight-times-coarser resolution.

All forecasts are initialized at 2020-08-22T12z, chosen to highlight Hurricane Laura, the most damaging Atlantic hurricane of 2020. a , Specific humidity at 700 hPa for 1-day, 5-day and 10-day forecasts over North America and the Northeast Pacific Ocean from ERA5 14 , ECMWF-HRES, NeuralGCM-0.7°, ECMWF-ENS (mean), NeuralGCM-ENS (mean), GraphCast 3 and Pangu 4 . b , Forecasts from individual ensemble members from ECMWF-ENS and NeuralGCM-ENS over regions of interest, including predicted tracks of Hurricane Laura from each of the 50 ensemble members (Supplementary Information section I.2 ). The track from ERA5 is plotted in black.

We can quantify the blurriness of different forecast models via their power spectra. Supplementary Figs. 17 and 18 show that the power spectra of NeuralCGM-0.7° is consistently closer to ERA5 than the other machine-learning forecast methods, but is still blurrier than ECMWF’s physical forecasts. The spectra of NeuralGCM forecasts is also roughly constant over the forecast period, in stark contrast to GraphCast, which worsens with lead time. The spectrum of NeuralGCM becomes more accurate with increased resolution (Supplementary Fig. 22 ), which suggests the potential for further improvements of NeuralGCM models trained at higher resolutions.

Water budget

In NeuralGCM, advection is handled by the dynamical core, while the machine-learning parameterization models local processes within vertical columns of the atmosphere. Thus, unlike pure machine-learning methods, local sources and sinks can be isolated from tendencies owing to horizontal transport and other resolved dynamics (Supplementary Fig. 3 ). This makes our results more interpretable and facilitates the diagnosis of the water budget. Specifically, we diagnose precipitation minus evaporation (Supplementary Information section H.5 ) rather than directly predicting these as in machine-learning-based approaches 3 . For short weather forecasts, the mean of precipitation minus evaporation has a realistic spatial distribution that is very close to ERA5 data (Extended Data Fig. 4c–e ). The precipitation-minus-evaporation rate distribution of NeuralGCM-0.7° closely matches the ERA5 distribution in the extratropics (Extended Data Fig. 4b ), although it underestimates extreme events in the tropics (Extended Data Fig. 4a ). It is noted that the current version of NeuralGCM directly predicts tendencies for an atmospheric column, and thus cannot distinguish between precipitation and evaporation.

Geostrophic wind balance

We examined the extent to which NeuralGCM, GraphCast and ECMWF-HRES capture the geostrophic wind balance, the near-equilibrium between the dominant forces that drive large-scale dynamics in the mid-latitudes 30 . A recent study 16 highlighted that Pangu misrepresents the vertical structure of the geostrophic and ageostrophic winds and noted a deterioration at longer lead times. Similarly, we observe that GraphCast shows an error that worsens with lead time. In contrast, NeuralGCM more accurately depicts the vertical structure of the geostrophic and ageostrophic winds, as well as their ratio, compared with GraphCast across various rollouts, when compared against ERA5 data (Extended Data Fig. 3 ). However, ECMWF-HRES still shows a slightly closer alignment to ERA5 data than NeuralGCM does. Within NeuralGCM, the representation of the geostrophic wind’s vertical structure only slightly degrades in the initial few days, showing no noticeable changes thereafter, particularly beyond day 5.

Generalizing to unseen data

Physically consistent weather models should still perform well for weather conditions for which they were not trained. We expect that NeuralGCM may generalize better than machine-learning-only atmospheric models, because NeuralGCM employs neural networks that act locally in space, on individual vertical columns of the atmosphere. To explore this hypothesis, we compare versions of NeuralCGM-0.7° and GraphCast trained to 2017 on 5 years of weather forecasts beyond the training period (2018–2022) in Supplementary Fig. 36 . Unlike GraphCast, NeuralGCM does not show a clear trend of increasing error when initialized further into the future from the training data. To extend this test beyond 5 years, we trained a NeuralGCM-2.8° model using only data before 2000, and tested its skill for over 21 unseen years (Supplementary Fig. 35 ).

Climate simulations

Although our deterministic NeuralGCM models are trained to predict weather up to 3 days ahead, they are generally capable of simulating the atmosphere far beyond medium-range weather timescales. For extended climate simulations, we prescribe historical sea surface temperature (SST) and sea-ice concentration. These simulations feature many emergent phenomena of the atmosphere on timescales from months to decades.

For climate simulations with NeuralGCM, we use 2.8° and 1.4° deterministic models, which are relatively inexpensive to train (Supplementary Information section G.7 ) and allow us to explore a larger parameter space to find stable models. Previous studies found that running extended simulations with hybrid models is challenging due to numerical instabilities and climate drift 21 . To quantify stability in our selected models, we run multiple initial conditions and report how many of them finish without instability.

Seasonal cycle and emergent phenomena

To assess the capability of NeuralGCM to simulate various aspects of the seasonal cycle, we run 2-year simulations with NeuralGCM-1.4°. for 37 different initial conditions spaced every 10 days for the year 2019. Out of these 37 initial conditions, 35 successfully complete the full 2 years without instability; for case studies of instability, see Supplementary Information section H.7 , and Supplementary Figs. 26 and 27 . We compare results from NeuralGCM-1.4° for 2020 with ERA5 data and with outputs from the X-SHiELD global cloud-resolving model, which is coupled to an ocean model nudged towards reanalysis 31 . This X-SHiELD run has been used as a target for training machine-learning climate models 24 . For comparison, we evaluate models after regridding predictions to 1.4° resolution. This comparison slightly favours NeuralGCM because NeuralGCM was tuned to match ERA5, but the discrepancy between ERA5 and the actual atmosphere is small relative to model error.

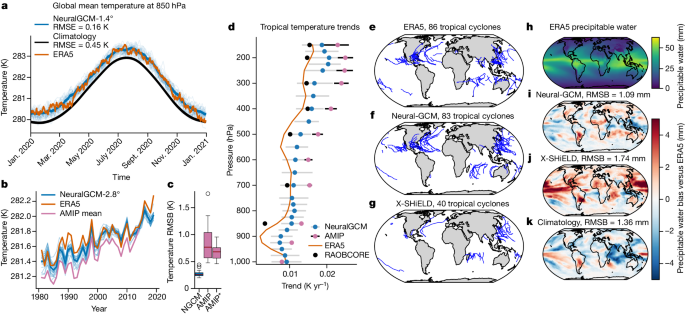

Figure 4a shows the temporal variation of the global mean temperature to 2020, as captured by 35 simulations from NeuralGCM, in comparison with the ERA5 reanalysis and standard climatology benchmarks. The seasonality and variability of the global mean temperature from NeuralGCM are quantitatively similar to those observed in ERA5. The ensemble-mean temperature RMSE for NeuralGCM stands at 0.16 K when benchmarked against ERA5, which is a significant improvement over the climatology’s RMSE of 0.45 K. We find that NeuralGCM accurately simulates the seasonal cycle, as evidenced by metrics such as the annual cycle of the global precipitable water (Supplementary Fig. 30a ) and global total kinetic energy (Supplementary Fig. 30b ). Furthermore, the model captures essential atmospheric dynamics, including the Hadley circulation and the zonal-mean zonal wind (Supplementary Fig. 28 ), as well as the spatial patterns of eddy kinetic energy in different seasons (Supplementary Fig. 31 ), and the distinctive seasonal behaviours of monsoon circulation (Supplementary Fig. 29 ; additional details are provided in Supplementary Information section I.1 ).

a , Global mean temperature for ERA5 14 (orange), 1990–2019 climatology (black) and NeuralGCM-1.4° (blue) for 2020 using 35 simulations initialized every 10 days during 2019 (thick line, ensemble mean; thin lines, different initial conditions). b , Yearly global mean temperature for ERA5 (orange), mean over 22 CMIP6 AMIP experiments 34 (violet; model details are in Supplementary Information section I.3 ) and NeuralGCM-2.8° for 22 AMIP-like simulations with prescribed SST initialized every 10 days during 1980 (thick line, ensemble mean; thin lines, different initial conditions). c , The RMSB of the 850-hPa temperature averaged between 1981 and 2014 for 22 NeuralGCM-2.8° AMIP runs (labelled NGCM), 22 CMIP6 AMIP experiments (labelled AMIP) and debiased 22 CMIP6 AMIP experiments (labelled AMIP*; bias was removed by removing the 850-hPa global temperature bias). In the box plots, the red line represents the median. The box delineates the first to third quartiles; the whiskers extend to 1.5 times the interquartile range (Q1 − 1.5IQR and Q3 + 1.5IQR), and outliers are shown as individual dots. d , Vertical profiles of tropical (20° S–20° N) temperature trends for 1981–2014. Orange, ERA5; black dots, Radiosonde Observation Correction using Reanalyses (RAOBCORE) 41 ; blue dots, mean trends for NeuralGCM; purple dots, mean trends from CMIP6 AMIP runs (grey and black whiskers, 25th and 75th percentiles for NeuralGCM and CMIP6 AMIP runs, respectively). e – g , Tropical cyclone tracks for ERA5 ( e ), NeuralGCM-1.4° ( f ) and X-SHiELD 31 ( g ). h – k , Mean precipitable water for ERA5 ( h ) and the precipitable water bias in NeuralGCM-1.4° ( i ), initialized 90 days before mid-January 2020 similarly to X-SHiELD, X-SHiELD ( j ) and climatology ( k ; averaged between 1990 and 2019). In d – i , quantities are calculated between mid-January 2020 and mid-January 2021 and all models were regridded to a 256 × 128 Gaussian grid before computation and tracking.

Next, we compare the annual biases of a single NeuralGCM realization with a single realization of X-SHiELD (the only one available), both initiated in mid-October 2019. We consider 19 January 2020 to 17 January 2021, the time frame for which X-SHiELD data are available. Global cloud-resolving models, such as X-SHiELD, are considered state of the art, especially for simulating the hydrological cycle, owing to their resolution being capable of resolving deep convection 32 . The annual bias in precipitable water for NeuralGCM (RMSE of 1.09 mm) is substantially smaller than the biases of both X-SHiELD (RMSE of 1.74 mm) and climatology (RMSE of 1.36 mm; Fig. 4i–k ). Moreover, NeuralGCM shows a lower temperature bias in the upper and lower troposphere than X-SHiELD (Extended Data Fig. 6 ). We also indirectly compare precipitation bias in X-SHiELD with precipitation-minus-evaporation bias in NeuralGCM-1.4°, which shows slightly larger bias and grid-scale artefacts for NeuralGCM (Extended Data Fig. 5 ).

Finally, to assess the capability of NeuralGCM to generate tropical cyclones in an annual model integration, we use the tropical cyclone tracker TempestExtremes 33 , as described in Supplementary Information section I.2 , Supplementary Fig. 34 and Supplementary Table 6 . Figure 4e–g shows that NeuralGCM, even at a coarse resolution of 1.4°, produces realistic trajectories and counts of tropical cyclone (83 versus 86 in ERA5 for the corresponding period), whereas X-SHiELD, when regridded to 1.4° resolution, substantially underestimates the tropical cyclone count (40). Additional statistical analyses of tropical cyclones can be found in Extended Data Figs. 7 and 8 .

Decadal simulations

To assess the capability of NeuralGCM to simulate historical temperature trends, we conduct AMIP-like simulations over a duration of 40 years with NeuralGCM-2.8°. Out of 37 different runs with initial conditions spaced every 10 days during the year 1980, 22 simulations were stable for the entire 40-year period, and our analysis focuses on these results. We compare with 22 simulations run with prescribed SST from the Coupled Model Intercomparison Project Phase 6 (CMIP6) 34 , listed in Supplementary Information section I.3 .

We find that all 40-year simulations of NeuralGCM, as well as the mean of the 22 AMIP runs, accurately capture the global warming trends observed in ERA5 data (Fig. 4b ). There is a strong correlation in the year-to-year temperature trends with ERA5 data, suggesting that NeuralGCM effectively captures the impact of SST forcing on climate. When comparing spatial biases averaged over 1981–2014, we find that all 22 NeuralGCM-2.8° runs have smaller bias than the CMIP6 AMIP runs, and this result remains even when removing the global temperature bias in CMIP6 AMIP runs (Fig. 4c and Supplementary Figs. 32 and 33 ).

Next, we investigated the vertical structure of tropical warming trends, which climate models tend to overestimate in the upper troposphere 35 . As shown in Fig. 4d , the trends, calculated by linear regression, of NeuralGCM are closer to ERA5 than those of AMIP runs. In particular, the bias in the upper troposphere is reduced. However, NeuralGCM does show a wider spread in its predictions than the AMIP runs, even at levels near the surface where temperatures are typically more constrained by prescribed SST.

Lastly, we evaluated NeuralGCM’s capability to generalize to unseen warmer climates by conducting AMIP simulations with increased SST (Supplementary Information section I.4.2 ). We find that NeuralGCM shows some of the robust features of climate warming response to modest SST increases (+1 K and +2 K); however, for more substantial SST increases (+4 K), NeuralGCM’s response diverges from expectations (Supplementary Fig. 37 ). In addition, AMIP simulations with increased SST show climate drift, underscoring NeuralGCM’s limitations in this context (Supplementary Fig. 38 ).

NeuralGCM is a differentiable hybrid atmospheric model that combines the strengths of traditional GCMs with machine learning for weather forecasting and climate simulation. To our knowledge, NeuralGCM is the first machine-learning-based model to make accurate ensemble weather forecasts, with better CRPS than state-of-the-art physics-based models. It is also, to our knowledge, the first hybrid model that achieves comparable spatial bias to global cloud-resolving models, can simulate realistic tropical cyclone tracks and can run AMIP-like simulations with realistic historical temperature trends. Overall, NeuralGCM demonstrates that incorporating machine learning is a viable alternative to building increasingly detailed physical models 32 for improving GCMs.

Compared with traditional GCMs with similar skill, NeuralGCM is computationally efficient and low complexity. NeuralGCM runs at 8- to 40-times-coarser horizontal resolution than ECMWF’s Integrated Forecasting System and global cloud-resolving models, which enables 3 to 5 orders of magnitude savings in computational resources. For example, NeuralGCM-1.4° simulates 70,000 simulation days in 24 hours using a single tensor-processing-unit versus 19 simulated days on 13,824 central-processing-unit cores with X-SHiELD (Extended Data Table 1 ). This can be leveraged for previously impractical tasks such as large ensemble forecasting. NeuralGCM’s dynamical core uses global spectral methods 36 , and learned physics is parameterized with fully connected neural networks acting on single vertical columns. Substantial headroom exists to pursue higher accuracy using advanced numerical methods and machine-learning architectures.

Our results provide strong evidence for the disputed hypothesis 37 , 38 , 39 that learning to predict short-term weather is an effective way to tune parameterizations for climate. NeuralGCM models trained on 72-hour forecasts are capable of realistic multi-year simulation. When provided with historical SSTs, they capture essential atmospheric dynamics such as seasonal circulation, monsoons and tropical cyclones. However, we will probably need alternative training strategies 38 , 39 to learn important processes for climate with subtle impacts on weather timescales, such as a cloud feedback.

The NeuralGCM approach is compatible with incorporating either more physics or more machine learning, as required for operational weather forecasts and climate simulations. For weather forecasting, we expect that end-to-end learning 40 with observational data will allow for better and more relevant predictions, including key variables such as precipitation. Such models could include neural networks acting as corrections to traditional data assimilation and model diagnostics. For climate projection, NeuralGCM will need to be reformulated to enable coupling with other Earth-system components (for example, ocean and land), and integrating data on the atmospheric chemical composition (for example, greenhouse gases and aerosols). There are also research challenges common to current machine-learning-based climate models 19 , including the capability to simulate unprecedented climates (that is, generalization), adhering to physical constraints, and resolving numerical instabilities and climate drift. NeuralGCM’s flexibility to incorporate physics-based models (for example, radiation) offers a promising avenue to address these challenges.

Models based on physical laws and empirical relationships are ubiquitous in science. We believe the differentiable hybrid modelling approach of NeuralGCM has the potential to transform simulation for a wide range of applications, such as materials discovery, protein folding and multiphysics engineering design.

Differentiable atmospheric model

NeuralGCM combines components of the numerical solver and flexible neural network parameterizations. Simulation in time is carried out in a coordinate system suitable for solving the dynamical equations of the atmosphere, describing large-scale fluid motion and thermodynamics under the influence of gravity and the Coriolis force.

Our differentiable dynamical core is implemented in JAX, a library for high-performance code in Python that supports automatic differentiation 42 . The dynamical core solves the hydrostatic primitive equations with moisture, using a horizontal pseudo-spectral discretization and vertical sigma coordinates 36 , 43 . We evolve seven prognostic variables: vorticity and divergence of horizontal wind, temperature, surface pressure, and three water species (specific humidity, and specific ice and liquid cloud water content).

Our learned physics module uses the single-column approach of GCMs 2 , whereby information from only a single atmospheric column is used to predict the impact of unresolved processes occurring within that column. These effects are predicted using a fully connected neural network with residual connections, with weights shared across all atmospheric columns (Supplementary Information section C.4 ).

The inputs to the neural network include the prognostic variables in the atmospheric column, total incident solar radiation, sea-ice concentration and SST (Supplementary Information section C.1 ). We also provide horizontal gradients of the prognostic variables, which we found improves performance 44 . All inputs are standardized to have zero mean and unit variance using statistics precomputed during model initialization. The outputs are the prognostic variable tendencies scaled by the fixed unconditional standard deviation of the target field (Supplementary Information section C.5 ).

To interface between ERA5 14 data stored in pressure coordinates and the sigma coordinate system of our dynamical core, we introduce encoder and decoder components (Supplementary Information section D ). These components perform linear interpolation between pressure levels and sigma coordinate levels. We additionally introduce learned corrections to both encoder and decoder steps (Supplementary Figs. 4–6 ), using the same column-based neural network architecture as the learned physics module. Importantly, the encoder enables us to eliminate the gravity waves from initialization shock 45 , which otherwise contaminate forecasts.

Figure 1a shows the sequence of steps that NeuralGCM takes to make a forecast. First, it encodes ERA5 data at t = t 0 on pressure levels to initial conditions on sigma coordinates. To perform a time step, the dynamical core and learned physics (Fig. 1b ) then compute tendencies, which are integrated in time using an implicit–explicit ordinary differential equation solver 46 (Supplementary Information section E and Supplementary Table 2 ). This is repeated to advance the model from t = t 0 to t = t final . Finally, the decoder converts predictions back to pressure levels.

The time-step size of the ODE solver (Supplementary Table 3 ) is limited by the Courant–Friedrichs–Lewy condition on dynamics, and can be small relative to the timescale of atmospheric change. Evaluating learned physics is approximately 1.5 times as expensive as a time step of the dynamical core. Accordingly, following the typical practice for GCMs, we hold learned physics tendencies constant for multiple ODE time steps to reduce computational expense, typically corresponding to 30 minutes of simulation time.

Deterministic and stochastic models

We train deterministic NeuralGCM models using a combination of three loss functions (Supplementary Information section G.4 ) to encourage accuracy and sharpness while penalizing bias. During the main training phase, all losses are defined in a spherical harmonics basis. We use a standard mean squared error loss for prompting accuracy, modified to progressively filter out contributions from higher total wavenumbers at longer lead times (Supplementary Fig. 8 ). This filtering approach tackles the ‘double penalty problem’ 47 as it prevents the model from being penalized for predicting high-wavenumber features in incorrect locations at later times, especially beyond the predictability horizon. A second loss term encourages the spectrum to match the training data using squared loss on the total wavenumber spectrum of prognostic variables. These first two losses are evaluated on both sigma and pressure levels. Finally, a third loss term discourages bias by adding mean squared error on the batch-averaged mean amplitude of each spherical harmonic coefficient. For analysis of the impact that various loss functions have, refer to Supplementary Information section H.6.1 , and Supplementary Figs. 23 and 24 . The combined action of the three training losses allow the resulting models trained on 3-day rollouts to remain stable during years-to-decades-long climate simulations. Before final evaluations, we perform additional fine-tuning of just the decoder component on short rollouts of 24 hours (Supplementary Information section G.5 ).

Stochastic NeuralGCM models incorporate inherent randomness in the form of additional random fields passed as inputs to neural network components. Our stochastic loss is based on the CRPS 28 , 48 , 49 . CRPS consists of mean absolute error that encourages accuracy, balanced by a similar term that encourages ensemble spread. For each variable we use a sum of CRPS in grid space and CRPS in the spherical harmonic basis below a maximum cut-off wavenumber (Supplementary Information section G.6 ). We compute CRPS on rollout lengths from 6 hours to 5 days. As illustrated in Fig. 1 , we inject noise to the learned encoder and the learned physics module by sampling from Gaussian random fields with learned spatial and temporal correlation (Supplementary Information section C.2 and Supplementary Fig. 2 ). For training, we generate two ensemble members per forecast, which suffices for an unbiased estimate of CRPS.

Data availability

For training and evaluating the NeuralGCM models, we used the publicly available ERA5 dataset 14 , originally downloaded from https://cds.climate.copernicus.eu/ and available via Google Cloud Storage in Zarr format at gs://gcp-public-data-arco-era5/ar/full_37-1h-0p25deg-chunk-1.zarr-v3. To compare NeuralGCM with operational and data-driven weather models, we used forecast datasets distributed as part of WeatherBench2 12 at https://weatherbench2.readthedocs.io/en/latest/data-guide.html , to which we have added NeuralGCM forecasts for 2020. To compare NeuralGCM with atmospheric models in climate settings, we used CMIP6 data available at https://catalog.pangeo.io/browse/master/climate/ , as well as X-SHiELD 24 outputs available on Google Cloud storage in a ‘requester pays’ bucket at gs://ai2cm-public-requester-pays/C3072-to-C384-res-diagnostics. The Radiosonde Observation Correction using Reanalyses (RAOBCORE) V1.9 that was used as reference tropical temperature trends was downloaded from https://webdata.wolke.img.univie.ac.at/haimberger/v1.9/ . Base maps use freely available data from https://www.naturalearthdata.com/downloads/ .

Code availability

The NeuralGCM code base is separated into two open source projects: Dinosaur and NeuralGCM, both publicly available on GitHub at https://github.com/google-research/dinosaur (ref. 50 ) and https://github.com/google-research/neuralgcm (ref. 51 ). The Dinosaur package implements a differentiable dynamical core used by NeuralGCM, whereas the NeuralGCM package provides machine-learning models and checkpoints of trained models. Evaluation code for NeuralGCM weather forecasts is included in WeatherBench2 12 , available at https://github.com/google-research/weatherbench2 (ref. 52 ).

Bauer, P., Thorpe, A. & Brunet, G. The quiet revolution of numerical weather prediction. Nature 525 , 47–55 (2015).

Article ADS CAS PubMed Google Scholar

Balaji, V. et al. Are general circulation models obsolete? Proc. Natl Acad. Sci. USA 119 , e2202075119 (2022).

Article CAS PubMed PubMed Central Google Scholar

Lam, R. et al. Learning skillful medium-range global weather forecasting. Science 382 , 1416–1421 (2023).

Article ADS MathSciNet CAS PubMed Google Scholar

Bi, K. et al. Accurate medium-range global weather forecasting with 3D neural networks. Nature 619 , 533–538 (2023).

Article ADS CAS PubMed PubMed Central Google Scholar

Hourdin, F. et al. The art and science of climate model tuning. Bull. Am. Meteorol. Soc. 98 , 589–602 (2017).

Article ADS Google Scholar

Bony, S. & Dufresne, J.-L. Marine boundary layer clouds at the heart of tropical cloud feedback uncertainties in climate models. Geophys. Res. Lett. 32 , L20806 (2005).

Webb, M. J., Lambert, F. H. & Gregory, J. M. Origins of differences in climate sensitivity, forcing and feedback in climate models. Clim. Dyn. 40 , 677–707 (2013).

Article Google Scholar

Sherwood, S. C., Bony, S. & Dufresne, J.-L. Spread in model climate sensitivity traced to atmospheric convective mixing. Nature 505 , 37–42 (2014).

Article ADS PubMed Google Scholar

Palmer, T. & Stevens, B. The scientific challenge of understanding and estimating climate change. Proc. Natl Acad. Sci. USA 116 , 24390–24395 (2019).

Fischer, E. M., Beyerle, U. & Knutti, R. Robust spatially aggregated projections of climate extremes. Nat. Clim. Change 3 , 1033–1038 (2013).

Field, C. B. Managing the Risks of Extreme Events and Disasters to Advance Climate Change Adaptation: Special Report of the Intergovernmental Panel on Climate Change (Cambridge Univ. Press, 2012).

Rasp, S. et al. WeatherBench 2: A benchmark for the next generation of data-driven global weather models. J. Adv. Model. Earth Syst. 16 , e2023MS004019 (2024).

Keisler, R. Forecasting global weather with graph neural networks. Preprint at https://arxiv.org/abs/2202.07575 (2022).

Hersbach, H. et al. The ERA5 global reanalysis. Q. J. R. Meteorol. Soc. 146 , 1999–2049 (2020).

Zhou, L. et al. Toward convective-scale prediction within the next generation global prediction system. Bull. Am. Meteorol. Soc. 100 , 1225–1243 (2019).

Bonavita, M. On some limitations of current machine learning weather prediction models. Geophys. Res. Lett. 51 , e2023GL107377 (2024).

Weyn, J. A., Durran, D. R. & Caruana, R. Improving data-driven global weather prediction using deep convolutional neural networks on a cubed sphere. J. Adv. Model. Earth Syst. 12 , e2020MS002109 (2020).

Watt-Meyer, O. et al. ACE: a fast, skillful learned global atmospheric model for climate prediction. Preprint at https://arxiv.org/abs/2310.02074 (2023).

Bretherton, C. S. Old dog, new trick: reservoir computing advances machine learning for climate modeling. Geophys. Res. Lett. 50 , e2023GL104174 (2023).

Reichstein, M. et al. Deep learning and process understanding for data-driven Earth system science. Nature 566 , 195–204 (2019).

Brenowitz, N. D. & Bretherton, C. S. Spatially extended tests of a neural network parametrization trained by coarse-graining. J. Adv. Model. Earth Syst. 11 , 2728–2744 (2019).

Rasp, S., Pritchard, M. S. & Gentine, P. Deep learning to represent subgrid processes in climate models. Proc. Natl Acad. Sci. USA 115 , 9684–9689 (2018).

Yuval, J. & O’Gorman, P. A. Stable machine-learning parameterization of subgrid processes for climate modeling at a range of resolutions. Nat. Commun. 11 , 3295 (2020).

Kwa, A. et al. Machine-learned climate model corrections from a global storm-resolving model: performance across the annual cycle. J. Adv. Model. Earth Syst. 15 , e2022MS003400 (2023).

Arcomano, T., Szunyogh, I., Wikner, A., Hunt, B. R. & Ott, E. A hybrid atmospheric model incorporating machine learning can capture dynamical processes not captured by its physics-based component. Geophys. Res. Lett. 50 , e2022GL102649 (2023).

Han, Y., Zhang, G. J. & Wang, Y. An ensemble of neural networks for moist physics processes, its generalizability and stable integration. J. Adv. Model. Earth Syst. 15 , e2022MS003508 (2023).

Gelbrecht, M., White, A., Bathiany, S. & Boers, N. Differentiable programming for Earth system modeling. Geosci. Model Dev. 16 , 3123–3135 (2023).

Gneiting, T. & Raftery, A. E. Strictly proper scoring rules, prediction, and estimation. J. Am. Stat. Assoc. 102 , 359–378 (2007).

Article MathSciNet CAS Google Scholar

Fortin, V., Abaza, M., Anctil, F. & Turcotte, R. Why should ensemble spread match the RMSE of the ensemble mean? J. Hydrometeorol. 15 , 1708–1713 (2014).

Holton, J. R. An introduction to Dynamic Meteorology 5th edn (Elsevier, 2004).

Cheng, K.-Y. et al. Impact of warmer sea surface temperature on the global pattern of intense convection: insights from a global storm resolving model. Geophys. Res. Lett. 49 , e2022GL099796 (2022).

Stevens, B. et al. DYAMOND: the dynamics of the atmospheric general circulation modeled on non-hydrostatic domains. Prog. Earth Planet. Sci. 6 , 61 (2019).

Ullrich, P. A. et al. TempestExtremes v2.1: a community framework for feature detection, tracking, and analysis in large datasets. Geosc. Model Dev. 14 , 5023–5048 (2021).

Eyring, V. et al. Overview of the Coupled Model Intercomparison Project Phase 6 (CMIP6) experimental design and organization. Geosci. Model Dev. 9 , 1937–1958 (2016).

Mitchell, D. M., Lo, Y. E., Seviour, W. J., Haimberger, L. & Polvani, L. M. The vertical profile of recent tropical temperature trends: persistent model biases in the context of internal variability. Environ. Res. Lett. 15 , 1040b4 (2020).

Bourke, W. A multi-level spectral model. I. Formulation and hemispheric integrations. Mon. Weather Rev. 102 , 687–701 (1974).

Ruiz, J. J., Pulido, M. & Miyoshi, T. Estimating model parameters with ensemble-based data assimilation: a review. J. Meteorol. Soc. Jpn Ser. II 91 , 79–99 (2013).

Schneider, T., Lan, S., Stuart, A. & Teixeira, J. Earth system modeling 2.0: a blueprint for models that learn from observations and targeted high-resolution simulations. Geophys. Res. Lett. 44 , 12–396 (2017).

Schneider, T., Leung, L. R. & Wills, R. C. J. Opinion: Optimizing climate models with process knowledge, resolution, and artificial intelligence. Atmos. Chem. Phys. 24 , 7041–7062 (2024).

Sutskever, I., Vinyals, O. & Le, Q. V. Sequence to sequence learning with neural networks. Adv. Neural Inf. Process. Syst. 27 , 3104–3112 (2014).

Haimberger, L., Tavolato, C. & Sperka, S. Toward elimination of the warm bias in historic radiosonde temperature records—some new results from a comprehensive intercomparison of upper-air data. J. Clim. 21 , 4587–4606 (2008).

Bradbury, J. et al. JAX: composable transformations of Python+NumPy programs. GitHub http://github.com/google/jax (2018).

Durran, D. R. Numerical Methods for Fluid Dynamics: With Applications to Geophysics Vol. 32, 2nd edn (Springer, 2010).

Wang, P., Yuval, J. & O’Gorman, P. A. Non-local parameterization of atmospheric subgrid processes with neural networks. J. Adv. Model. Earth Syst. 14 , e2022MS002984 (2022).

Daley, R. Normal mode initialization. Rev. Geophys. 19 , 450–468 (1981).

Whitaker, J. S. & Kar, S. K. Implicit–explicit Runge–Kutta methods for fast–slow wave problems. Mon. Weather Rev. 141 , 3426–3434 (2013).

Gilleland, E., Ahijevych, D., Brown, B. G., Casati, B. & Ebert, E. E. Intercomparison of spatial forecast verification methods. Weather Forecast. 24 , 1416–1430 (2009).

Rasp, S. & Lerch, S. Neural networks for postprocessing ensemble weather forecasts. Month. Weather Rev. 146 , 3885–3900 (2018).

Pacchiardi, L., Adewoyin, R., Dueben, P. & Dutta, R. Probabilistic forecasting with generative networks via scoring rule minimization. J. Mach. Learn. Res. 25 , 1–64 (2024).

Smith, J. A., Kochkov, D., Norgaard, P., Yuval, J. & Hoyer, S. google-research/dinosaur: 1.0.0. Zenodo https://doi.org/10.5281/zenodo.11376145 (2024).

Kochkov, D. et al. google-research/neuralgcm: 1.0.0. Zenodo https://doi.org/10.5281/zenodo.11376143 (2024).

Rasp, S. et al. google-research/weatherbench2: v0.2.0. Zenodo https://doi.org/10.5281/zenodo.11376271 (2023).

Download references

Acknowledgements

We thank A. Kwa, A. Merose and K. Shah for assistance with data acquisition and handling; L. Zepeda-Núñez for feedback on the paper; and J. Anderson, C. Van Arsdale, R. Chemke, G. Dresdner, J. Gilmer, J. Hickey, N. Lutsko, G. Nearing, A. Paszke, J. Platt, S. Ponda, M. Pritchard, D. Rothenberg, F. Sha, T. Schneider and O. Voicu for discussions.

Author information

These authors contributed equally: Dmitrii Kochkov, Janni Yuval, Ian Langmore, Peter Norgaard, Jamie Smith, Stephan Hoyer

Authors and Affiliations

Google Research, Mountain View, CA, USA

Dmitrii Kochkov, Janni Yuval, Ian Langmore, Peter Norgaard, Jamie Smith, Griffin Mooers, James Lottes, Stephan Rasp, Michael P. Brenner & Stephan Hoyer

Earth, Atmospheric and Planetary Sciences, Massachusetts Institute of Technology, Cambridge, MA, USA

Milan Klöwer

European Centre for Medium-Range Weather Forecasts, Reading, UK

Peter Düben & Sam Hatfield

Google DeepMind, London, UK

Peter Battaglia, Alvaro Sanchez-Gonzalez & Matthew Willson

School of Engineering and Applied Sciences, Harvard University, Cambridge, MA, USA

Michael P. Brenner

You can also search for this author in PubMed Google Scholar

Contributions

D.K., J.Y., I.L., P.N., J.S. and S. Hoyer contributed equally to this work. D.K., J.Y., I.L., P.N., J.S., G.M., J.L. and S. Hoyer wrote the code. D.K., J.Y., I.L., P.N., G.M. and S. Hoyer trained models and analysed the data. M.P.B. and S. Hoyer managed and oversaw the research project. M.K., S.R., P.D., S. Hatfield, P.B. and M.P.B. contributed technical advice and ideas. M.W. ran experiments with GraphCast for comparison with NeuralGCM. A.S.-G. assisted with data preparation. D.K., J.Y., I.L., P.N. and S. Hoyer wrote the paper. All authors gave feedback and contributed to editing the paper.

Corresponding authors

Correspondence to Dmitrii Kochkov , Janni Yuval or Stephan Hoyer .

Ethics declarations

Competing interests.

D.K., J.Y., I.L., P.N., J.S., J.L., S.R., P.B., A.S.-G., M.W., M.P.B. and S. Hoyer are employees of Google. S. Hoyer, D.K., I.L., J.Y., G.M., P.N., J.S. and M.B. have filed international patent application PCT/US2023/035420 in the name of Google LLC, currently pending, relating to neural general circulation models.

Peer review

Peer review information.

Nature thanks Karthik Kashinath and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended data fig. 1 maps of bias for neuralgcm-ens and ecmwf-ens forecasts..

Bias is averaged over all forecasts initialized in 2020.

Extended Data Fig. 2 Maps of spread-skill ratio for NeuralGCM-ENS and ECMWF-ENS forecasts.

Spread-skill ratio is averaged over all forecasts initialized in 2020.

Extended Data Fig. 3 Geostrophic balance in NeuralGCM, GraphCast 3 and ECMWF-HRES.

Vertical profiles of the extratropical intensity (averaged between latitude 30°–70° in both hemispheres) and over all forecasts initialized in 2020 of (a,d,g) geostrophic wind, (b,e,h) ageostrophic wind and (c,f,i) the ratio of the intensity of ageostrophic wind over geostrophic wind for ERA5 (black continuous line in all panels), (a,b,c) NeuralGCM-0.7°, (d,e,f) GraphCast and (g,h,i) ECMWF-HRES at lead times of 1 day, 5 days and 10 days.

Extended Data Fig. 4 Precipitation minus evaporation calculated from the third day of weather forecasts.

(a) Tropical (latitudes −20° to 20°) precipitation minus evaporation (P minus E) rate distribution, (b) Extratropical (latitudes 30° to 70° in both hemispheres) P minus E, (c) mean P minus E for 2020 ERA5 14 and (d) NeuralGCM-0.7° (calculated from the third day of forecasts and averaged over all forecasts initialized in 2020), (e) the bias between NeuralGCM-0.7° and ERA5, (f-g) Snapshot of daily precipitation minus evaporation for 2020-01-04 for (f) NeuralGCM-0.7° (forecast initialized on 2020-01-02) and (g) ERA5.

Extended Data Fig. 5 Indirect comparison between precipitation bias in X-SHiELD and precipitation minus evaporation bias in NeuralGCM-1.4°.

Mean precipitation calculated between 2020-01-19 and 2021-01-17 for (a) ERA5 14 (c) X-SHiELD 31 and the biases in (e) X-SHiELD and (g) climatology (ERA5 data averaged over 1990-2019). Mean precipitation minus evaporation calculated between 2020-01-19 and 2021-01-17 for (b) ERA5 (d) NeuralGCM-1.4° (initialized in October 18th 2019) and the biases in (f) NeuralGCM-1.4° and (h) climatology (data averaged over 1990–2019).

Extended Data Fig. 6 Yearly temperature bias for NeuralGCM and X-SHiELD 31 .

Mean temperature between 2020-01-19 to 2020-01-17 for (a) ERA5 at 200hPa and (b) 850hPa. (c,d) the bias in the temperature for NeuralGCM-1.4°, (e,f) the bias in X-SHiELD and (g,h) the bias in climatology (calculated from 1990–2019). NeuralGCM-1.4° was initialized in 18th of October (similar to X-SHiELD).

Extended Data Fig. 7 Tropical Cyclone densities and annual regional counts.

(a) Tropical Cyclone (TC) density from ERA5 14 data spanning 1987–2020. (b) TC density from NeuralGCM-1.4° for 2020, generated using 34 different initial conditions all initialized in 2019. (c) Box plot depicting the annual number of TCs across different regions, based on ERA5 data (1987–2020), NeuralGCM-1.4° for 2020 (34 initial conditions), and orange markers show ERA5 for 2020. In the box plots, the red line represents the median; the box delineates the first to third quartiles; the whiskers extend to 1.5 times the interquartile range (Q1 − 1.5IQR and Q3 + 1.5IQR), and outliers are shown as individual dots. Each year is defined from January 19th to January 17th of the following year, aligning with data availability from X-SHiELD. For NeuralGCM simulations, the 3 initial conditions starting in January 2019 exclude data for January 17th, 2021, as these runs spanned only two years.

Extended Data Fig. 8 Tropical Cyclone maximum wind distribution in NeuralGCM vs. ERA5 14 .

Number of Tropical Cyclones (TCs) as a function of maximum wind speed at 850hPa across different regions, based on ERA5 data (1987–2020; in orange), and NeuralGCM-1.4° for 2020 (34 initial conditions; in blue). Each year is defined from January 19th to January 17th of the following year, aligning with data availability from X-SHiELD. For NeuralGCM simulations, the 3 initial conditions starting in January 2019 exclude data for January 17th, 2021, as these runs spanned only two years.

Supplementary information

Supplementary information.

Supplementary Information (38 figures, 6 tables): (A) Lines of code in atmospheric models; (B) Dynamical core of NeuralGCM; (C) Learned physics of NeuralGCM; (D) Encoder and decoder of NeuralGCM; (E) Time integration; (F) Evaluation metrics; (G) Training; (H) Additional weather evaluations; (I) Additional climate evaluations.

Peer Review File

Rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Kochkov, D., Yuval, J., Langmore, I. et al. Neural general circulation models for weather and climate. Nature 632 , 1060–1066 (2024). https://doi.org/10.1038/s41586-024-07744-y

Download citation

Received : 13 November 2023

Accepted : 15 June 2024

Published : 22 July 2024

Issue Date : 29 August 2024

DOI : https://doi.org/10.1038/s41586-024-07744-y

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Google ai predicts long-term climate trends and weather — in minutes.

- Helena Kudiabor

Nature (2024)

Weather and climate predicted accurately — without using a supercomputer

- Oliver Watt-Meyer

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing: AI and Robotics newsletter — what matters in AI and robotics research, free to your inbox weekly.

- Publications

- News and Events

- Education and Outreach

Software Engineering Institute

Sei digital library, latest publications, embracing ai: unlocking scalability and transformation through generative text, imagery, and synthetic audio, august 28, 2024 • webcast, by tyler brooks , shannon gallagher , dominic a. ross.

In this webcast, Tyler Brooks, Shannon Gallagher, and Dominic Ross aim to demystify AI and illustrate its transformative power in achieving scalability, adapting to changing landscapes, and driving digital innovation.

Counter AI: What Is It and What Can You Do About It?

August 27, 2024 • white paper, by nathan m. vanhoudnos , carol j. smith , matt churilla , shing-hon lau , lauren mcilvenny , greg touhill.

This paper describes counter artificial intelligence (AI) and provides recommendations on what can be done about it.

Using Quality Attribute Scenarios for ML Model Test Case Generation

August 27, 2024 • conference paper, by rachel brower-sinning , grace lewis , sebastián echeverría , ipek ozkaya.

This paper presents an approach based on quality attribute (QA) scenarios to elicit and define system- and model-relevant test cases for ML models.

3 API Security Risks (and How to Protect Against Them)

August 27, 2024 • podcast, by mckinley sconiers-hasan.

McKinley Sconiers-Hasan discusses three API risks and how to address them through the lens of zero trust.

Lessons Learned in Coordinated Disclosure for Artificial Intelligence and Machine Learning Systems

August 20, 2024 • white paper, by allen d. householder , vijay s. sarvepalli , jeff havrilla , matt churilla , lena pons , shing-hon lau , nathan m. vanhoudnos , andrew kompanek , lauren mcilvenny.

In this paper, the authors describe lessons learned from coordinating AI and ML vulnerabilities at the SEI's CERT/CC.

On the Design, Development, and Testing of Modern APIs

July 30, 2024 • white paper, by alejandro gomez , alex vesey.

This white paper discusses the design, desired qualities, development, testing, support, and security of modern application programming interfaces (APIs).

Evaluating Large Language Models for Cybersecurity Tasks: Challenges and Best Practices

July 26, 2024 • podcast, by jeff gennari , samuel j. perl.

Jeff Gennari and Sam Perl discuss applications for LLMs in cybersecurity, potential challenges, and recommendations for evaluating LLMs.

Capability-based Planning for Early-Stage Software Development

July 24, 2024 • podcast, by anandi hira , bill nichols.

This SEI podcast introduces capability-based planning (CBP) and its use and application in software acquisition.

A Model Problem for Assurance Research: An Autonomous Humanitarian Mission Scenario

July 23, 2024 • technical note, by gabriel moreno , anton hristozov , john e. robert , mark h. klein.

This report describes a model problem to support research in large-scale assurance.

Safeguarding Against Recent Vulnerabilities Related to Rust

June 28, 2024 • podcast, by david svoboda.

David Svoboda discusses two vulnerabilities related to Rust, their sources, and how to mitigate them.

NTRS - NASA Technical Reports Server

Available downloads, related records.

- All Research Labs

- 3D Deep Learning

- Applied Research

- Autonomous Vehicles

- Deep Imagination

- New and Featured

- AI Art Gallery

- AI & Machine Learning

- Computer Vision

- Academic Collaborations

- Government Collaborations

- Graduate Fellowship

- Internships

- Research Openings

- Research Scientists

- Meet the Team

- Publications

Kilometer-Scale Convection Allowing Model Emulation using Generative Diffusion Modeling

Storm-scale convection-allowing models (CAMs) are an important tool for predicting the evolution of thunderstorms and mesoscale convective systems that result in damaging extreme weather. By explicitly resolving convective dynamics within the atmosphere they afford meteorologists the nuance needed to provide outlook on hazard. Deep learning models have thus far not proven skilful at km-scale atmospheric simulation, despite being competitive at coarser resolution with state-of-the-art global, medium-range weather forecasting. We present a generative diffusion model called StormCast, which emulates the high-resolution rapid refresh (HRRR) model—NOAA’s state-of-the-art 3km operational CAM. StormCast autoregressively predicts 99 state variables at km scale using a 1-hour time step, with dense vertical resolution in the atmospheric boundary layer, conditioned on 26 synoptic variables. We present evidence of successfully learnt km-scale dynamics including competitive 1-6 hour forecast skill for composite radar reflectivity alongside physically realistic convective cluster evolution, moist updrafts, and cold pool morphology. StormCast predictions maintain realistic power spectra for multiple predicted variables across multi-hour forecasts. Together, these results establish the potential for autoregressive ML to emulate CAMs – opening up new km-scale frontiers for regional ML weather prediction and future climate hazard dynamical downscaling.

Publication Date

Research area, uploaded files.

Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

Predicting economic trends and stock market prices with deep learning and advanced machine learning techniques.

1. Introduction

2. theoretical background, 2.1. review of the lstm and gru architecture, lstm and gru networks, 2.2. attention mechanism, 2.3. time series forecasting methods, 2.3.1. autoregressive integrated moving averages (arimas), 2.3.2. xgboost (extreme gradient boost), 2.3.3. facebook prophet, 2.4. other recent advancements in the area, 3. preliminary considerations and development of the approach, 3.1. historical context and progression, 3.2. data collection, exploration, and preparation, 3.2.1. train and test split, 3.2.2. data shaping for lstm and gru models, 3.3. preprocessing and normalization, 3.4. model evaluation, 3.5. the architectural diagram, 4. numerical results, 4.1. data preprocessing and exploratory analysis, 4.2. hyperparameter selection process.

- Units: The optimization strategy sets the number of units in each LSTM and GRU model to 128 and 64 in the first and second layers, respectively.

- Batch size: For tuning the model, the batch size is set to 1.

- Learning rate: The learning rate of the Adam optimizer is set at 0.1.

- Dropout layer: During model training, it is common to observe a pattern where the model performs well on the training data but fails to replicate this success on the testing and validation data. This discrepancy, often due to overfitting, is a major concern, especially in deep learning models that require a substantial amount of data for training. Dropout is a simple but effective regularization strategy used in neural networks to mitigate this overfitting problem. The cells of the recurrent neural network are dropped at random. The dropout rate is around 0.2.

4.3. Results of the Models

4.3.1. apple stock prediction, 4.3.2. google stock prediction, 4.3.3. microsoft stock prediction, 4.3.4. amazon stock prediction, 4.4. predicted risk–return tradeoff, 5. additional experiments and validations, 5.1. performance of four selected models on apple stock, 5.2. performance of four selected models on amazon stock, 5.3. performance of four selected models on google stock, 5.4. performance of four selected models on microsoft stock, 5.5. discussion: forecasting accuracy, 5.6. implications of this research, 6. discussions, 6.1. contributions, 6.2. limitations of the study, 6.3. future research, 7. conclusions, author contributions, data availability statement, acknowledgments, conflicts of interest.

| OPEN | HIGH | LOW | CLOSE | ADJ CLOSE | VOLUME | |

|---|---|---|---|---|---|---|

| COUNT | 2268 | 2268 | 2268 | 2268 | 2268 | 2268 |

| MEAN | 57.2297 | 57.84394 | 56.63649 | 57.2662 | 55.63746 | 166,807,034 |

| STD | 44.14435 | 44.68723 | 43.6103 | 44.17467 | 44.78484 | 107,981,557 |

| MIN | 13.97714 | 14.29536 | 13.88821 | 14.06357 | 12.30091 | 41,000,000 |

| 25% | 27.14937 | 27.35375 | 26.86813 | 27.14625 | 24.96922 | 95,148,700 |

| 50% | 39.47 | 39.845 | 39.02875 | 39.36125 | 37.76188 | 133,553,600 |

| 75% | 68.54375 | 69.69688 | 67.44 | 68.765 | 67.7272 | 202,644,200 |

| MAX | 182.63 | 182.94 | 179.12 | 182.01 | 181.7784 | 1,065,523,200 |

| OPEN | HIGH | LOW | CLOSE | ADJ CLOSE | VOLUME | |

|---|---|---|---|---|---|---|

| COUNT | 2268 | 2268 | 2268 | 2268 | 2268 | 2268 |

| MEAN | 1139.757 | 1150.551 | 1128.898 | 1139.985 | 1139.985 | 1,932,454 |

| STD | 674.3742 | 681.2976 | 667.1945 | 674.1677 | 674.1677 | 1,261,224 |

| MIN | 398.8052 | 400.4789 | 386.053 | 398.5611 | 398.5611 | 7922 |

| 25% | 607.7186 | 611.8685 | 602.0832 | 607.2103 | 607.2103 | 1,222,225 |

| 50% | 986.725 | 990.855 | 976.655 | 984.065 | 984.065 | 1,557,583 |

| 75% | 1307.397 | 1319.705 | 1304.069 | 1313.13 | 1313.13 | 2,191,964 |

| MAX | 3037.27 | 3042 | 2997.75 | 3014.18 | 3014.18 | 23,219,507 |

| OPEN | HIGH | LOW | CLOSE | ADJ CLOSE | VOLUME | |

|---|---|---|---|---|---|---|

| COUNT | 2268 | 2268 | 2268 | 2268 | 2268 | 2268 |

| MEAN | 114.5614 | 115.6476 | 113.4146 | 114.5935 | 110.6238 | 31,954,477.16 |

| STD | 84.94871 | 85.80088 | 84.00433 | 84.94511 | 86.28801 | 16,886,826.19 |

| MIN | 30.3 | 30.9 | 30.27 | 30.6 | 25.62621 | 7,425,600 |

| 25% | 47.1775 | 47.6675 | 46.695 | 47.26 | 41.86476 | 22,205,600 |

| 50% | 77.63 | 77.9 | 77.36 | 77.78 | 73.41168 | 28,010,550 |

| 75% | 157.185 | 158.8025 | 156.0725 | 157.6175 | 154.3496 | 36,433,425 |

| MAX | 344.62 | 349.67 | 342.2 | 343.11 | 342.402 | 248,428,500 |

| OPEN | HIGH | LOW | CLOSE | ADJ CLOSE | VOLUME | |

|---|---|---|---|---|---|---|

| COUNT | 2268 | 2268 | 2268 | 2268 | 2268 | 2268 |

| MEAN | 1454.869 | 1470.281 | 1437.496 | 1454.153 | 1454.153 | 4,047,869 |

| STD | 1075.819 | 1088.213 | 1061.821 | 1074.703 | 1074.703 | 2,153,351 |

| MIN | 248.94 | 252.93 | 245.75 | 248.23 | 248.23 | 881,300 |

| 25% | 482.5175 | 489.3 | 474.9075 | 482.1525 | 482.1525 | 2,686,300 |

| 50% | 1023.14 | 1032.22 | 1016.75 | 1026.27 | 1026.27 | 3,464,050 |

| 75% | 1949 | 1975.377 | 1931.703 | 1954.335 | 1954.335 | 4,693,025 |

| MAX | 3744 | 3773.08 | 3696.79 | 3731.41 | 3731.41 | 23,856,100 |

- Ariyo, A.A.; Adewumi, A.O.; Ayo, C.K. Stock Price Prediction Using the ARIMA Model. In Proceedings of the 2014 UKSim-AMSS 16th International Conference on Computer Modelling and Simulation, Cambridge, UK, 26–28 March 2014; pp. 106–112. [ Google Scholar ]

- Nicholas Refenes, A.; Zapranis, A.; Francis, G. Stock performance modeling using neural networks: A comparative study with regression models. Neural Netw. 1994 , 7 , 375–388. [ Google Scholar ] [ CrossRef ]

- Malki, A.; Atlam, E.-S.; Hassanien, A.E.; Ewis, A.; Dagnew, G.; Gad, I. SARIMA model-based forecasting required number of COVID-19 vaccines globally and empirical analysis of peoples’ view towards the vaccines. Alex. Eng. J. 2022 , 61 , 12091–12110. [ Google Scholar ] [ CrossRef ]

- Paliari, I.; Karanikola, A.; Kotsiantis, S. A comparison of the optimized LSTM, XGBOOST and ARIMA in Time Series forecasting. In Proceedings of the 2021 12th International Conference on Information, Intelligence, Systems & Applications (IISA), Chania, Crete, Greece, 12–14 July 2021; pp. 1–7. [ Google Scholar ]

- Cheng, Y.; Yi, J.; Yang, X.; Lai, K.K.; Seco, L. A CEEMD-ARIMA-SVM model with structural breaks to forecast the crude oil prices linked with extreme events. Soft Comput. 2022 , 26 , 8537–8551. [ Google Scholar ] [ CrossRef ]

- Chatterjee, A.; Bhowmick, H.; Sen, J. Stock Price Prediction Using Time Series, Econometric, Machine Learning, and Deep Learning Models. In Proceedings of the 2021 IEEE Mysore Sub Section International Conference (MysuruCon), Hassan, India, 24–25 October 2021; pp. 289–296. [ Google Scholar ]

- Escudero, P.; Alcocer, W.; Paredes, J. Recurrent Neural Networks and ARIMA Models for Euro/Dollar Exchange Rate Forecasting. Appl. Sci. 2021 , 11 , 5658. [ Google Scholar ] [ CrossRef ]

- Liang, F.; Liang, F.; Zhang, H.; Zhang, H.; Fang, Y.; Fang, Y. The Analysis of Global RMB Exchange Rate Forecasting and Risk Early Warning Using ARIMA and CNN Model. J. Organ. End User Comput. (JOEUC) 2022 , 34 , 1–25. [ Google Scholar ] [ CrossRef ]

- Rao, A.R.; Reimherr, M. Modern non-linear function-on-function regression. Stat. Comput. 2023 , 33 , 130. [ Google Scholar ] [ CrossRef ]

- Thakkar, A.; Chaudhari, K. A comprehensive survey on deep neural networks for stock market: The need, challenges, and future directions. Expert Syst. Appl. 2021 , 177 , 114800. [ Google Scholar ] [ CrossRef ]

- White, H. Economic prediction using neural networks: The case of IBM daily stock returns. In Proceedings of the IEEE 1988 International Conference on Neural Networks, San Diego, CA, USA, 24–27 July 1988; Volume 452, pp. 451–458. [ Google Scholar ]

- Sonkiya, P.; Bajpai, V.; Bansal, A. Stock price prediction using BERT and GAN. arXiv 2021 , arXiv:2107.09055. [ Google Scholar ]

- Maqsood, H.; Mehmood, I.; Maqsood, M.; Yasir, M.; Afzal, S.; Aadil, F.; Selim, M.M.; Muhammad, K. A local and global event sentiment based efficient stock exchange forecasting using deep learning. Int. J. Inf. Manag. 2020 , 50 , 432–451. [ Google Scholar ] [ CrossRef ]

- Patil, P.; Wu, C.-S.M.; Potika, K.; Orang, M. Stock Market Prediction Using Ensemble of Graph Theory, Machine Learning and Deep Learning Models. In Proceedings of the 3rd International Conference on Software Engineering and Information Management, Sydney, NSW, Australia, 12–15 January 2020; pp. 85–92. [ Google Scholar ]

- Hochreiter, S. Untersuchungen zu Dynamischen Neuronalen Netzen. Bachelor’s Thesis, Technische Universität München, München, Germany, 1991. [ Google Scholar ]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994 , 5 , 157–166. [ Google Scholar ] [ CrossRef ]

- Hochreiter, S. The Vanishing Gradient Problem During Learning Recurrent Neural Nets and Problem Solutions. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 1998 , 6 , 107–116. [ Google Scholar ] [ CrossRef ]

- Schmidhuber, J.; Wierstra, D.; Gagliolo, M.; Gomez, F. Training recurrent networks by Evolino. Neural Comput. 2007 , 19 , 757–779. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Chen, J.; Chaudhari, N.S. Segmented-Memory Recurrent Neural Networks. IEEE Trans. Neural Netw. 2009 , 20 , 1267–1280. [ Google Scholar ] [ CrossRef ]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction ; A Bradford Book; The MIT Press: Cambridge, MA, USA; London, UK, 2018. [ Google Scholar ]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [ Google Scholar ]

- Jiang, L.; Subramanian, P. Forecasting of Stock Price Using Autoregressive Integrated Moving Average Model. J. Comput. Theor. Nanosci. 2019 , 16 , 3519–3524. [ Google Scholar ] [ CrossRef ]

- Kavzoglu, T.; Teke, A. Predictive Performances of Ensemble Machine Learning Algorithms in Landslide Susceptibility Mapping Using Random Forest, Extreme Gradient Boosting (XGBoost) and Natural Gradient Boosting (NGBoost). Arab. J. Sci. Eng. 2022 , 47 , 7367–7385. [ Google Scholar ] [ CrossRef ]

- Lilly Sheeba, S.; Neha, G.; Anirudh Ragavender, R.M.; Divya, D. Time Series Model for Stock Market Prediction Utilising Prophet. Turk. J. Comput. Math. Educ. (TURCOMAT) 2021 , 12 , 4529–4534. [ Google Scholar ]

- Yun, K.K.; Yoon, S.W.; Won, D. Interpretable stock price forecasting model using genetic algorithm-machine learning regressions and best feature subset selection. Expert Syst. Appl. 2023 , 213 , 118803. [ Google Scholar ] [ CrossRef ]

- Han, C.; Fu, X. Challenge and Opportunity: Deep Learning-Based Stock Price Prediction by Using Bi-Directional LSTM Model. Front. Bus. Econ. Manag. 2023 , 8 , 51–54. [ Google Scholar ] [ CrossRef ]

- Zhao, Y.; Yang, G. Deep Learning-based Integrated Framework for stock price movement prediction. Appl. Soft Comput. 2023 , 133 , 109921. [ Google Scholar ] [ CrossRef ]

- Quadir, A.; Kapoor, S.; Junni, A.V.C.; Sivaraman, A.K.; Tee, K.F.; Sabireen, H.; Janakiraman, N. Novel optimization approach for stock price forecasting using multi-layered sequential LSTM. Appl. Soft Comput. 2023 , 134 , 109830. [ Google Scholar ] [ CrossRef ]

- Lu, M.; Xu, X. TRNN: An efficient time-series recurrent neural network for stock price prediction. Inf. Sci. 2024 , 657 , 119951. [ Google Scholar ] [ CrossRef ]

- Salah, S.; Alsamamra, H.R.; Shoqeir, J.H. Exploring Wind Speed for Energy Considerations in Eastern Jerusalem-Palestine Using Machine-Learning Algorithms. Energies 2022 , 15 , 2602. [ Google Scholar ] [ CrossRef ]

- Li, Z.; Yu, H.; Xu, J.; Liu, J.; Mo, Y. Stock Market Analysis and Prediction Using LSTM: A Case Study on Technology Stocks. Innov. Appl. Eng. Technol. 2023 , 2 , 1–6. [ Google Scholar ] [ CrossRef ]