T Test (Student’s T-Test): Definition and Examples

T Test: Contents :

- What is a T Test?

- The T Score

- T Values and P Values

- Calculating the T Test

- What is a Paired T Test (Paired Samples T Test)?

What is a T test?

The t test tells you how significant the differences between group means are. It lets you know if those differences in means could have happened by chance. The t test is usually used when data sets follow a normal distribution but you don’t know the population variance .

For example, you might flip a coin 1,000 times and find the number of heads follows a normal distribution for all trials. So you can calculate the sample variance from this data, but the population variance is unknown. Or, a drug company may want to test a new cancer drug to find out if it improves life expectancy. In an experiment, there’s always a control group (a group who are given a placebo, or “sugar pill”). So while the control group may show an average life expectancy of +5 years, the group taking the new drug might have a life expectancy of +6 years. It would seem that the drug might work. But it could be due to a fluke. To test this, researchers would use a Student’s t-test to find out if the results are repeatable for an entire population.

In addition, a t test uses a t-statistic and compares this to t-distribution values to determine if the results are statistically significant .

However, note that you can only uses a t test to compare two means. If you want to compare three or more means, use an ANOVA instead.

The T Score.

The t score is a ratio between the difference between two groups and the difference within the groups .

- Larger t scores = more difference between groups.

- Smaller t score = more similarity between groups.

A t score of 3 tells you that the groups are three times as different from each other as they are within each other. So when you run a t test, bigger t-values equal a greater probability that the results are repeatable.

T-Values and P-values

How big is “big enough”? Every t-value has a p-value to go with it. A p-value from a t test is the probability that the results from your sample data occurred by chance. P-values are from 0% to 100% and are usually written as a decimal (for example, a p value of 5% is 0.05). Low p-values indicate your data did not occur by chance . For example, a p-value of .01 means there is only a 1% probability that the results from an experiment happened by chance.

Calculating the Statistic / Test Types

There are three main types of t-test:

- An Independent Samples t-test compares the means for two groups.

- A Paired sample t-test compares means from the same group at different times (say, one year apart).

- A One sample t-test tests the mean of a single group against a known mean.

You can find the steps for an independent samples t test here . But you probably don’t want to calculate the test by hand (the math can get very messy. Use the following tools to calculate the t test:

- How to do a T test in Excel.

- T test in SPSS.

- T-distribution on the TI 89.

- T distribution on the TI 83.

What is a Paired T Test (Paired Samples T Test / Dependent Samples T Test)?

A paired t test (also called a correlated pairs t-test , a paired samples t test or dependent samples t test ) is where you run a t test on dependent samples. Dependent samples are essentially connected — they are tests on the same person or thing. For example:

- Knee MRI costs at two different hospitals,

- Two tests on the same person before and after training,

- Two blood pressure measurements on the same person using different equipment.

When to Choose a Paired T Test / Paired Samples T Test / Dependent Samples T Test

Choose the paired t-test if you have two measurements on the same item, person or thing. But you should also choose this test if you have two items that are being measured with a unique condition. For example, you might be measuring car safety performance in vehicle research and testing and subject the cars to a series of crash tests. Although the manufacturers are different, you might be subjecting them to the same conditions.

With a “regular” two sample t test , you’re comparing the means for two different samples . For example, you might test two different groups of customer service associates on a business-related test or testing students from two universities on their English skills. But if you take a random sample each group separately and they have different conditions, your samples are independent and you should run an independent samples t test (also called between-samples and unpaired-samples).

The null hypothesis for the independent samples t-test is μ 1 = μ 2 . So it assumes the means are equal. With the paired t test, the null hypothesis is that the pairwise difference between the two tests is equal (H 0 : µ d = 0).

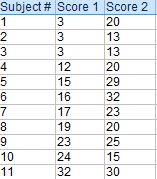

Paired Samples T Test By hand

- The “ΣD” is the sum of X-Y from Step 2.

- ΣD 2 : Sum of the squared differences (from Step 4).

- (ΣD) 2 : Sum of the differences (from Step 2), squared.

If you’re unfamiliar with the Σ notation used in the t test, it basically means to “add everything up”. You may find this article useful: summation notation .

Step 6: Subtract 1 from the sample size to get the degrees of freedom. We have 11 items. So 11 – 1 = 10.

Step 7: Find the p-value in the t-table , using the degrees of freedom in Step 6. But if you don’t have a specified alpha level , use 0.05 (5%).

So for this example t test problem, with df = 10, the t-value is 2.228.

Step 8: In conclusion, compare your t-table value from Step 7 (2.228) to your calculated t-value (-2.74). The calculated t-value is greater than the table value at an alpha level of .05. In addition, note that the p-value is less than the alpha level: p <.05. So we can reject the null hypothesis that there is no difference between means.

However, note that you can ignore the minus sign when comparing the two t-values as ± indicates the direction; the p-value remains the same for both directions.

In addition, check out our YouTube channel for more stats help and tips!

Goulden, C. H. Methods of Statistical Analysis, 2nd ed. New York: Wiley, pp. 50-55, 1956.

- Search Search Please fill out this field.

What Is a T-Test?

Understanding the t-test, using a t-test, which t-test to use.

- T-Test FAQs

- Fundamental Analysis

T-Test: What It Is With Multiple Formulas and When To Use Them

Read how this calculation can be used for hypothesis testing in statistics

Adam Hayes, Ph.D., CFA, is a financial writer with 15+ years Wall Street experience as a derivatives trader. Besides his extensive derivative trading expertise, Adam is an expert in economics and behavioral finance. Adam received his master's in economics from The New School for Social Research and his Ph.D. from the University of Wisconsin-Madison in sociology. He is a CFA charterholder as well as holding FINRA Series 7, 55 & 63 licenses. He currently researches and teaches economic sociology and the social studies of finance at the Hebrew University in Jerusalem.

:max_bytes(150000):strip_icc():format(webp)/adam_hayes-5bfc262a46e0fb005118b414.jpg)

A t-test is an inferential statistic used to determine if there is a significant difference between the means of two groups and how they are related. T-tests are used when the data sets follow a normal distribution and have unknown variances, like the data set recorded from flipping a coin 100 times.

The t-test is a test used for hypothesis testing in statistics and uses the t-statistic, the t-distribution values, and the degrees of freedom to determine statistical significance.

Key Takeaways

- A t-test is an inferential statistic used to determine if there is a statistically significant difference between the means of two variables.

- The t-test is a test used for hypothesis testing in statistics.

- Calculating a t-test requires three fundamental data values including the difference between the mean values from each data set, the standard deviation of each group, and the number of data values.

- T-tests can be dependent or independent.

Investopedia / Sabrina Jiang

A t-test compares the average values of two data sets and determines if they came from the same population. In the above examples, a sample of students from class A and a sample of students from class B would not likely have the same mean and standard deviation. Similarly, samples taken from the placebo-fed control group and those taken from the drug prescribed group should have a slightly different mean and standard deviation.

Mathematically, the t-test takes a sample from each of the two sets and establishes the problem statement. It assumes a null hypothesis that the two means are equal.

Using the formulas, values are calculated and compared against the standard values. The assumed null hypothesis is accepted or rejected accordingly. If the null hypothesis qualifies to be rejected, it indicates that data readings are strong and are probably not due to chance.

The t-test is just one of many tests used for this purpose. Statisticians use additional tests other than the t-test to examine more variables and larger sample sizes. For a large sample size, statisticians use a z-test . Other testing options include the chi-square test and the f-test.

Consider that a drug manufacturer tests a new medicine. Following standard procedure, the drug is given to one group of patients and a placebo to another group called the control group. The placebo is a substance with no therapeutic value and serves as a benchmark to measure how the other group, administered the actual drug, responds.

After the drug trial, the members of the placebo-fed control group reported an increase in average life expectancy of three years, while the members of the group who are prescribed the new drug reported an increase in average life expectancy of four years.

Initial observation indicates that the drug is working. However, it is also possible that the observation may be due to chance. A t-test can be used to determine if the results are correct and applicable to the entire population.

Four assumptions are made while using a t-test. The data collected must follow a continuous or ordinal scale, such as the scores for an IQ test, the data is collected from a randomly selected portion of the total population, the data will result in a normal distribution of a bell-shaped curve, and equal or homogenous variance exists when the standard variations are equal.

T-Test Formula

Calculating a t-test requires three fundamental data values. They include the difference between the mean values from each data set, or the mean difference, the standard deviation of each group, and the number of data values of each group.

This comparison helps to determine the effect of chance on the difference, and whether the difference is outside that chance range. The t-test questions whether the difference between the groups represents a true difference in the study or merely a random difference.

The t-test produces two values as its output: t-value and degrees of freedom . The t-value, or t-score, is a ratio of the difference between the mean of the two sample sets and the variation that exists within the sample sets.

The numerator value is the difference between the mean of the two sample sets. The denominator is the variation that exists within the sample sets and is a measurement of the dispersion or variability.

This calculated t-value is then compared against a value obtained from a critical value table called the T-distribution table. Higher values of the t-score indicate that a large difference exists between the two sample sets. The smaller the t-value, the more similarity exists between the two sample sets.

A large t-score, or t-value, indicates that the groups are different while a small t-score indicates that the groups are similar.

Degrees of freedom refer to the values in a study that has the freedom to vary and are essential for assessing the importance and the validity of the null hypothesis. Computation of these values usually depends upon the number of data records available in the sample set.

Paired Sample T-Test

The correlated t-test, or paired t-test, is a dependent type of test and is performed when the samples consist of matched pairs of similar units, or when there are cases of repeated measures. For example, there may be instances where the same patients are repeatedly tested before and after receiving a particular treatment. Each patient is being used as a control sample against themselves.

This method also applies to cases where the samples are related or have matching characteristics, like a comparative analysis involving children, parents, or siblings.

The formula for computing the t-value and degrees of freedom for a paired t-test is:

T = mean 1 − mean 2 s ( diff ) ( n ) where: mean 1 and mean 2 = The average values of each of the sample sets s ( diff ) = The standard deviation of the differences of the paired data values n = The sample size (the number of paired differences) n − 1 = The degrees of freedom \begin{aligned}&T=\frac{\textit{mean}1 - \textit{mean}2}{\frac{s(\text{diff})}{\sqrt{(n)}}}\\&\textbf{where:}\\&\textit{mean}1\text{ and }\textit{mean}2=\text{The average values of each of the sample sets}\\&s(\text{diff})=\text{The standard deviation of the differences of the paired data values}\\&n=\text{The sample size (the number of paired differences)}\\&n-1=\text{The degrees of freedom}\end{aligned} T = ( n ) s ( diff ) mean 1 − mean 2 where: mean 1 and mean 2 = The average values of each of the sample sets s ( diff ) = The standard deviation of the differences of the paired data values n = The sample size (the number of paired differences) n − 1 = The degrees of freedom

Equal Variance or Pooled T-Test

The equal variance t-test is an independent t-test and is used when the number of samples in each group is the same, or the variance of the two data sets is similar.

The formula used for calculating t-value and degrees of freedom for equal variance t-test is:

T-value = mean 1 − mean 2 ( n 1 − 1 ) × var 1 2 + ( n 2 − 1 ) × var 2 2 n 1 + n 2 − 2 × 1 n 1 + 1 n 2 where: mean 1 and mean 2 = Average values of each of the sample sets var 1 and var 2 = Variance of each of the sample sets n 1 and n 2 = Number of records in each sample set \begin{aligned}&\text{T-value}=\frac{\textit{mean}1-\textit{mean}2}{\sqrt{\frac{(n1-1)\times\textit{var}1^2+(n2-1)\times\textit{var}2^2}{n1+n2-2}\times\frac{1}{n1}+\frac{1}{n2}}}\\&\textbf{where:}\\&\textit{mean}1 \text{ and } \textit{mean}2=\text{Average values of each}\\&\text{of the sample sets}\\&\textit{var}1\text{ and }\textit{var}2=\text{Variance of each of the sample sets}\\&n1\text{ and }n2=\text{Number of records in each sample set}\end{aligned} T-value = n 1 + n 2 − 2 ( n 1 − 1 ) × var 1 2 + ( n 2 − 1 ) × var 2 2 × n 1 1 + n 2 1 mean 1 − mean 2 where: mean 1 and mean 2 = Average values of each of the sample sets var 1 and var 2 = Variance of each of the sample sets n 1 and n 2 = Number of records in each sample set

Degrees of Freedom = n 1 + n 2 − 2 where: n 1 and n 2 = Number of records in each sample set \begin{aligned} &\text{Degrees of Freedom} = n1 + n2 - 2 \\ &\textbf{where:}\\ &n1 \text{ and } n2 = \text{Number of records in each sample set} \\ \end{aligned} Degrees of Freedom = n 1 + n 2 − 2 where: n 1 and n 2 = Number of records in each sample set

Unequal Variance T-Test

The unequal variance t-test is an independent t-test and is used when the number of samples in each group is different, and the variance of the two data sets is also different. This test is also called Welch's t-test.

The formula used for calculating t-value and degrees of freedom for an unequal variance t-test is:

T-value = m e a n 1 − m e a n 2 ( v a r 1 n 1 + v a r 2 n 2 ) where: m e a n 1 and m e a n 2 = Average values of each of the sample sets v a r 1 and v a r 2 = Variance of each of the sample sets n 1 and n 2 = Number of records in each sample set \begin{aligned}&\text{T-value}=\frac{mean1-mean2}{\sqrt{\bigg(\frac{var1}{n1}{+\frac{var2}{n2}\bigg)}}}\\&\textbf{where:}\\&mean1 \text{ and } mean2 = \text{Average values of each} \\&\text{of the sample sets} \\&var1 \text{ and } var2 = \text{Variance of each of the sample sets} \\&n1 \text{ and } n2 = \text{Number of records in each sample set} \end{aligned} T-value = ( n 1 v a r 1 + n 2 v a r 2 ) m e an 1 − m e an 2 where: m e an 1 and m e an 2 = Average values of each of the sample sets v a r 1 and v a r 2 = Variance of each of the sample sets n 1 and n 2 = Number of records in each sample set

Degrees of Freedom = ( v a r 1 2 n 1 + v a r 2 2 n 2 ) 2 ( v a r 1 2 n 1 ) 2 n 1 − 1 + ( v a r 2 2 n 2 ) 2 n 2 − 1 where: v a r 1 and v a r 2 = Variance of each of the sample sets n 1 and n 2 = Number of records in each sample set \begin{aligned} &\text{Degrees of Freedom} = \frac{ \left ( \frac{ var1^2 }{ n1 } + \frac{ var2^2 }{ n2 } \right )^2 }{ \frac{ \left ( \frac{ var1^2 }{ n1 } \right )^2 }{ n1 - 1 } + \frac{ \left ( \frac{ var2^2 }{ n2 } \right )^2 }{ n2 - 1}} \\ &\textbf{where:}\\ &var1 \text{ and } var2 = \text{Variance of each of the sample sets} \\ &n1 \text{ and } n2 = \text{Number of records in each sample set} \\ \end{aligned} Degrees of Freedom = n 1 − 1 ( n 1 v a r 1 2 ) 2 + n 2 − 1 ( n 2 v a r 2 2 ) 2 ( n 1 v a r 1 2 + n 2 v a r 2 2 ) 2 where: v a r 1 and v a r 2 = Variance of each of the sample sets n 1 and n 2 = Number of records in each sample set

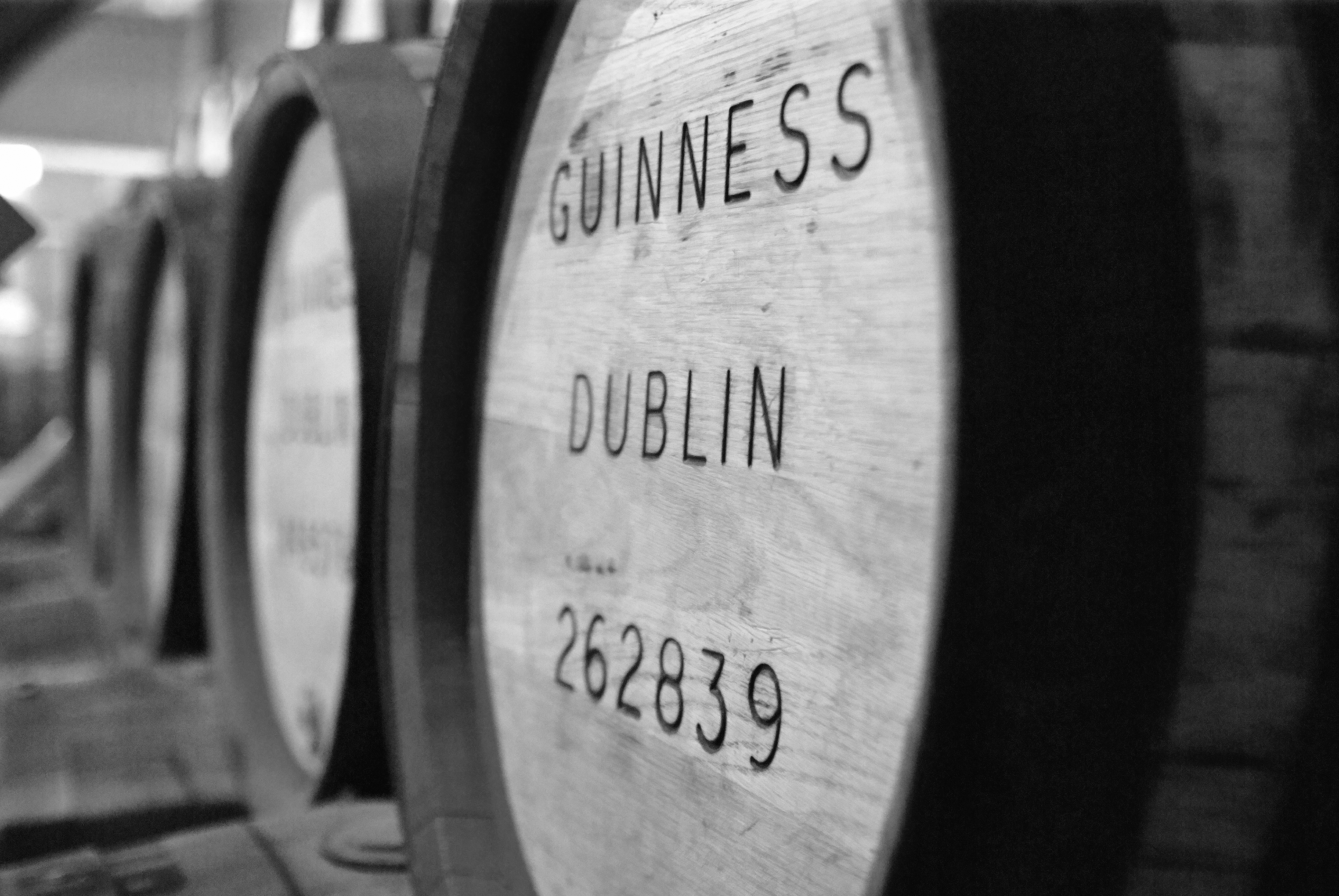

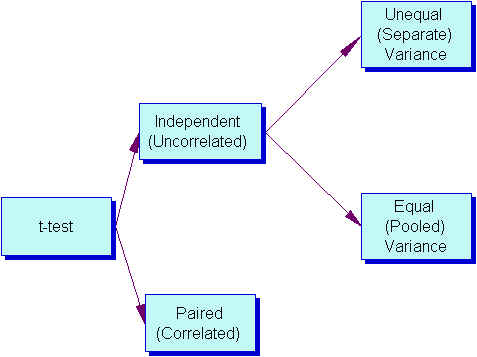

The following flowchart can be used to determine which t-test to use based on the characteristics of the sample sets. The key items to consider include the similarity of the sample records, the number of data records in each sample set, and the variance of each sample set.

Image by Julie Bang © Investopedia 2019

Example of an Unequal Variance T-Test

Assume that the diagonal measurement of paintings received in an art gallery is taken. One group of samples includes 10 paintings, while the other includes 20 paintings. The data sets, with the corresponding mean and variance values, are as follows:

| Set 1 | Set 2 | |

|---|---|---|

| 19.7 | 28.3 | |

| 20.4 | 26.7 | |

| 19.6 | 20.1 | |

| 17.8 | 23.3 | |

| 18.5 | 25.2 | |

| 18.9 | 22.1 | |

| 18.3 | 17.7 | |

| 18.9 | 27.6 | |

| 19.5 | 20.6 | |

| 21.95 | 13.7 | |

| 23.2 | ||

| 17.5 | ||

| 20.6 | ||

| 18 | ||

| 23.9 | ||

| 21.6 | ||

| 24.3 | ||

| 20.4 | ||

| 23.9 | ||

| 13.3 | ||

| 19.4 | 21.6 | |

| 1.4 | 17.1 |

Though the mean of Set 2 is higher than that of Set 1, we cannot conclude that the population corresponding to Set 2 has a higher mean than the population corresponding to Set 1.

Is the difference from 19.4 to 21.6 due to chance alone, or do differences exist in the overall populations of all the paintings received in the art gallery? We establish the problem by assuming the null hypothesis that the mean is the same between the two sample sets and conduct a t-test to test if the hypothesis is plausible.

Since the number of data records is different (n1 = 10 and n2 = 20) and the variance is also different, the t-value and degrees of freedom are computed for the above data set using the formula mentioned in the Unequal Variance T-Test section.

The t-value is -2.24787. Since the minus sign can be ignored when comparing the two t-values, the computed value is 2.24787.

The degrees of freedom value is 24.38 and is reduced to 24, owing to the formula definition requiring rounding down of the value to the least possible integer value.

One can specify a level of probability (alpha level, level of significance, p ) as a criterion for acceptance. In most cases, a 5% value can be assumed.

Using the degree of freedom value as 24 and a 5% level of significance, a look at the t-value distribution table gives a value of 2.064. Comparing this value against the computed value of 2.247 indicates that the calculated t-value is greater than the table value at a significance level of 5%. Therefore, it is safe to reject the null hypothesis that there is no difference between means. The population set has intrinsic differences, and they are not by chance.

How Is the T-Distribution Table Used?

The T-Distribution Table is available in one-tail and two-tails formats. The former is used for assessing cases that have a fixed value or range with a clear direction, either positive or negative. For instance, what is the probability of the output value remaining below -3, or getting more than seven when rolling a pair of dice? The latter is used for range-bound analysis, such as asking if the coordinates fall between -2 and +2.

What Is an Independent T-Test?

The samples of independent t-tests are selected independent of each other where the data sets in the two groups don’t refer to the same values. They may include a group of 100 randomly unrelated patients split into two groups of 50 patients each. One of the groups becomes the control group and is administered a placebo, while the other group receives a prescribed treatment. This constitutes two independent sample groups that are unpaired and unrelated to each other.

What Does a T-Test Explain and How Are They Used?

A t-test is a statistical test that is used to compare the means of two groups. It is often used in hypothesis testing to determine whether a process or treatment has an effect on the population of interest, or whether two groups are different from one another.

:max_bytes(150000):strip_icc():format(webp)/Confidence-Interval-088dc77f639a4f71a9f00297d0db5a10.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

An open portfolio of interoperable, industry leading products

The Dotmatics digital science platform provides the first true end-to-end solution for scientific R&D, combining an enterprise data platform with the most widely used applications for data analysis, biologics, flow cytometry, chemicals innovation, and more.

Statistical analysis and graphing software for scientists

Bioinformatics, cloning, and antibody discovery software

Plan, visualize, & document core molecular biology procedures

Electronic Lab Notebook to organize, search and share data

Proteomics software for analysis of mass spec data

Modern cytometry analysis platform

Analysis, statistics, graphing and reporting of flow cytometry data

Software to optimize designs of clinical trials

POPULAR USE CASES

The Ultimate Guide to T Tests

Get all of your t test questions answered here

The ultimate guide to t tests

The t test is one of the simplest statistical techniques that is used to evaluate whether there is a statistical difference between the means from up to two different samples. The t test is especially useful when you have a small number of sample observations (under 30 or so), and you want to make conclusions about the larger population.

The characteristics of the data dictate the appropriate type of t test to run. All t tests are used as standalone analyses for very simple experiments and research questions as well as to perform individual tests within more complicated statistical models such as linear regression. In this guide, we’ll lay out everything you need to know about t tests, including providing a simple workflow to determine what t test is appropriate for your particular data or if you’d be better suited using a different model.

What is a t test?

A t test is a statistical technique used to quantify the difference between the mean (average value) of a variable from up to two samples (datasets). The variable must be numeric. Some examples are height, gross income, and amount of weight lost on a particular diet.

A t test tells you if the difference you observe is “surprising” based on the expected difference. They use t-distributions to evaluate the expected variability. When you have a reasonable-sized sample (over 30 or so observations), the t test can still be used, but other tests that use the normal distribution (the z test) can be used in its place.

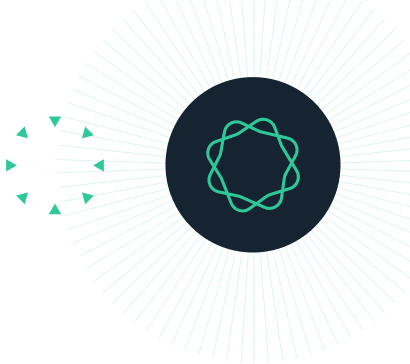

Sometimes t tests are called “Student’s” t tests, which is simply a reference to their unusual history.

It got its name because a brewer from the Guinness Brewery, William Gosset , published about the method under the pseudonym "Student". He wanted to get information out of very small sample sizes (often 3-5) because it took so much effort to brew each keg for his samples.

When should I use a t test?

A t test is appropriate to use when you’ve collected a small, random sample from some statistical “population” and want to compare the mean from your sample to another value. The value for comparison could be a fixed value (e.g., 10) or the mean of a second sample.

For example, if your variable of interest is the average height of sixth graders in your region, then you might measure the height of 25 or 30 randomly-selected sixth graders. A t test could be used to answer questions such as, “Is the average height greater than four feet?”

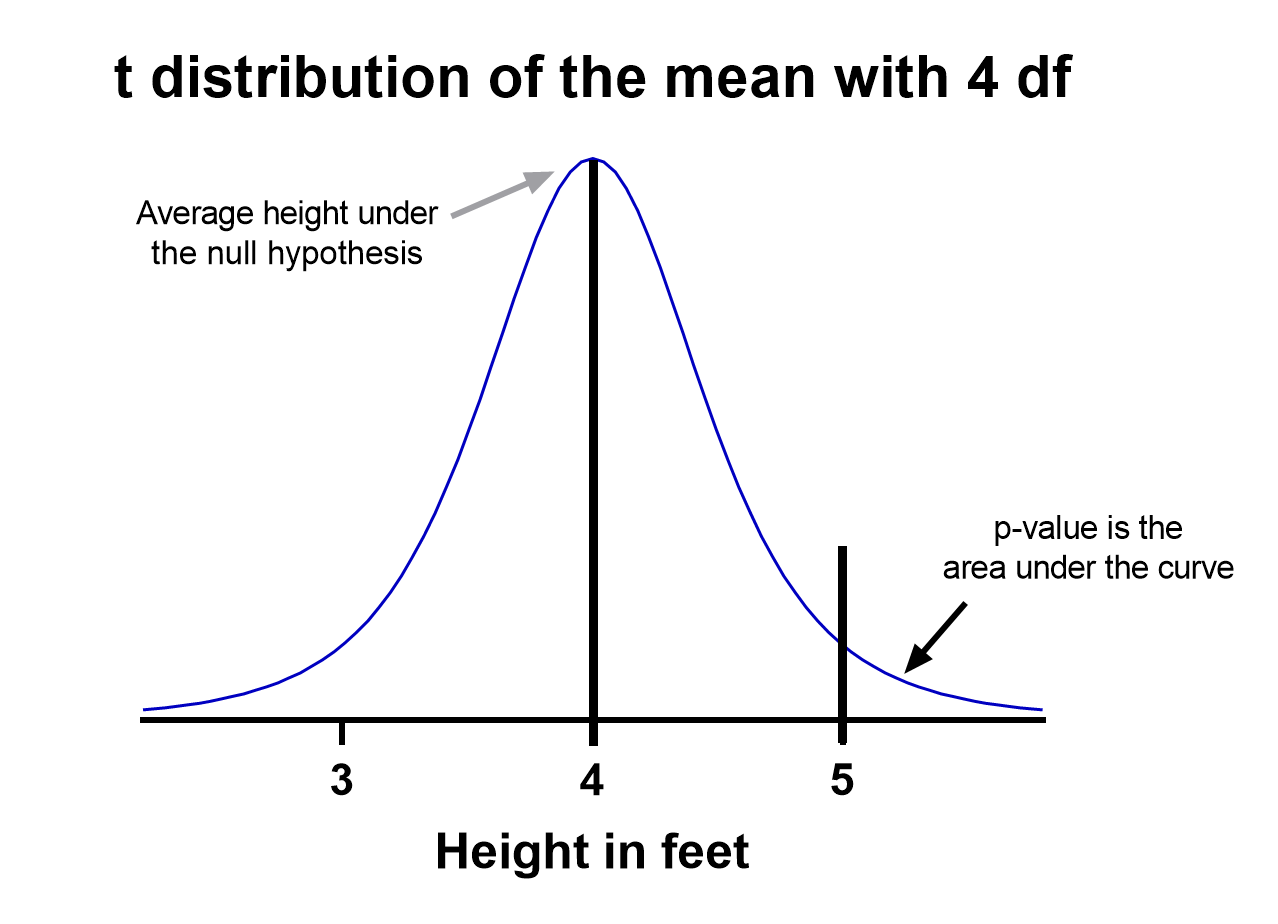

How does a t test work?

Based on your experiment, t tests make enough assumptions about your experiment to calculate an expected variability, and then they use that to determine if the observed data is statistically significant. To do this, t tests rely on an assumed “null hypothesis.” With the above example, the null hypothesis is that the average height is less than or equal to four feet.

Say that we measure the height of 5 randomly selected sixth graders and the average height is five feet. Does that mean that the “true” average height of all sixth graders is greater than four feet or did we randomly happen to measure taller than average students?

To evaluate this, we need a distribution that shows every possible average value resulting from a sample of five individuals in a population where the true mean is four. That may seem impossible to do, which is why there are particular assumptions that need to be made to perform a t test.

With those assumptions, then all that’s needed to determine the “sampling distribution of the mean” is the sample size (5 students in this case) and standard deviation of the data (let’s say it’s 1 foot).

That’s enough to create a graphic of the distribution of the mean, which is:

Notice the vertical line at x = 5, which was our sample mean. We (use software to) calculate the area to the right of the vertical line, which gives us the P value (0.09 in this case). Note that because our research question was asking if the average student is greater than four feet, the distribution is centered at four. Since we’re only interested in knowing if the average is greater than four feet, we use a one-tailed test in this case.

Using the standard confidence level of 0.05 with this example, we don’t have evidence that the true average height of sixth graders is taller than 4 feet.

What are the assumptions for t tests?

- One variable of interest : This is not correlation or regression, where you are interested in the relationship between multiple variables. With a t test, you can have different samples, but they are all measuring the same variable (e.g., height).

- Numeric data: You are dealing with a list of measurements that can be averaged. This means you aren’t just counting occurrences in various categories (e.g., eye color or political affiliation).

- Two groups or less: If you have more than two samples of data, a t test is the wrong technique. You most likely need to try ANOVA.

- Random sample : You need a random sample from your statistical “population of interest” in order to draw valid conclusions about the larger population. If your population is so small that you can measure everything, then you have a “census” and don’t need statistics. This is because you don’t need to estimate the truth, since you have measured the truth without variability.

- Normally Distributed : The smaller your sample size, the more important it is that your data come from a normal, Gaussian distribution bell curve. If you have reason to believe that your data are not normally distributed, consider nonparametric t test alternatives . This isn’t necessary for larger samples (usually 25 or 30 unless the data is heavily skewed). The reason is that the Central Limit Theorem applies in this case, which says that even if the distribution of your data is not normal, the distribution of the mean of your data is, so you can use a z-test rather than a t test.

How do I know which t test to use?

There are many types of t tests to choose from, but you don’t necessarily have to understand every detail behind each option.

You just need to be able to answer a few questions, which will lead you to pick the right t test. To that end, we put together this workflow for you to figure out which test is appropriate for your data.

Do you have one or two samples?

Are you comparing the means of two different samples, or comparing the mean from one sample to a fixed value? An example research question is, “Is the average height of my sample of sixth grade students greater than four feet?”

If you only have one sample of data, you can click here to skip to a one-sample t test example, otherwise your next step is to ask:

Are observations in the two samples matched up or related in some way?

This could be as before-and-after measurements of the same exact subjects, or perhaps your study split up “pairs” of subjects (who are technically different but share certain characteristics of interest) into the two samples. The same variable is measured in both cases.

If so, you are looking at some kind of paired samples t test . The linked section will help you dial in exactly which one in that family is best for you, either difference (most common) or ratio.

If you aren’t sure paired is right, ask yourself another question:

Are you comparing different observations in each of the two samples?

If the answer is yes, then you have an unpaired or independent samples t test. The two samples should measure the same variable (e.g., height), but are samples from two distinct groups (e.g., team A and team B).

The goal is to compare the means to see if the groups are significantly different. For example, “Is the average height of team A greater than team B?” Unlike paired, the only relationship between the groups in this case is that we measured the same variable for both. There are two versions of unpaired samples t tests (pooled and unpooled) depending on whether you assume the same variance for each sample.

Have you run the same experiment multiple times on the same subject/observational unit?

If so, then you have a nested t test (unless you have more than two sample groups). This is a trickier concept to understand. One example is if you are measuring how well Fertilizer A works against Fertilizer B. Let’s say you have 12 pots to grow plants in (6 pots for each fertilizer), and you grow 3 plants in each pot.

In this case you have 6 observational units for each fertilizer, with 3 subsamples from each pot. You would want to analyze this with a nested t test . The “nested” factor in this case is the pots. It’s important to note that we aren’t interested in estimating the variability within each pot, we just want to take it into account.

You might be tempted to run an unpaired samples t test here, but that assumes you have 6*3 = 18 replicates for each fertilizer. However, the three replicates within each pot are related, and an unpaired samples t test wouldn’t take that into account.

What if none of these sound like my experiment?

If you’re not seeing your research question above, note that t tests are very basic statistical tools. Many experiments require more sophisticated techniques to evaluate differences. If the variable of interest is a proportion (e.g., 10 of 100 manufactured products were defective), then you’d use z-tests. If you take before and after measurements and have more than one treatment (e.g., control vs a treatment diet), then you need ANOVA.

How do I perform a t test using software?

If you’re wondering how to do a t test, the easiest way is with statistical software such as Prism or an online t test calculator .

If you’re using software, then all you need to know is which t test is appropriate ( use the workflow here ) and understand how to interpret the output. To do that, you’ll also need to:

- Determine whether your test is one or two-tailed

- Choose the level of significance

Is my test one or two-tailed?

Whether or not you have a one- or two-tailed test depends on your research hypothesis. Choosing the appropriately tailed test is very important and requires integrity from the researcher. This is because you have more “power” with one-tailed tests, meaning that you can detect a statistically significant difference more easily. Unless you have written out your research hypothesis as one directional before you run your experiment, you should use a two-tailed test.

Two-tailed tests

Two-tailed tests are the most common, and they are applicable when your research question is simply asking, “is there a difference?”

One-tailed tests

Contrast that with one-tailed tests, where the research questions are directional, meaning that either the question is, “is it greater than ” or the question is, “is it less than ”. These tests can only detect a difference in one direction.

Choosing the level of significance

All t tests estimate whether a mean of a population is different than some other value, and with all estimates come some variability, or what statisticians call “error.” Before analyzing your data, you want to choose a level of significance, usually denoted by the Greek letter alpha, 𝛼. The scientific standard is setting alpha to be 0.05.

An alpha of 0.05 results in 95% confidence intervals, and determines the cutoff for when P values are considered statistically significant.

One sample t test

If you only have one sample of a list of numbers, you are doing a one-sample t test. All you are interested in doing is comparing the mean from this group with some known value to test if there is evidence, that it is significantly different from that standard. Use our free one-sample t test calculator for this.

A one sample t test example research question is, “Is the average fifth grader taller than four feet?”

It is the simplest version of a t test, and has all sorts of applications within hypothesis testing. Sometimes the “known value” is called the “null value”. While the null value in t tests is often 0, it could be any value. The name comes from being the value which exactly represents the null hypothesis, where no significant difference exists.

Any time you know the exact number you are trying to compare your sample of data against, this could work well. And of course: it can be either one or two-tailed.

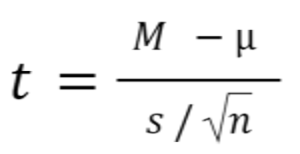

One sample t test formula

Statistical software handles this for you, but if you want the details, the formula for a one sample t test is:

- M: Calculated mean of your sample

- μ: Hypothetical mean you are testing against

- s: The standard deviation of your sample

- n: The number of observations in your sample.

In a one-sample t test, calculating degrees of freedom is simple: one less than the number of objects in your dataset (you’ll see it written as n-1 ).

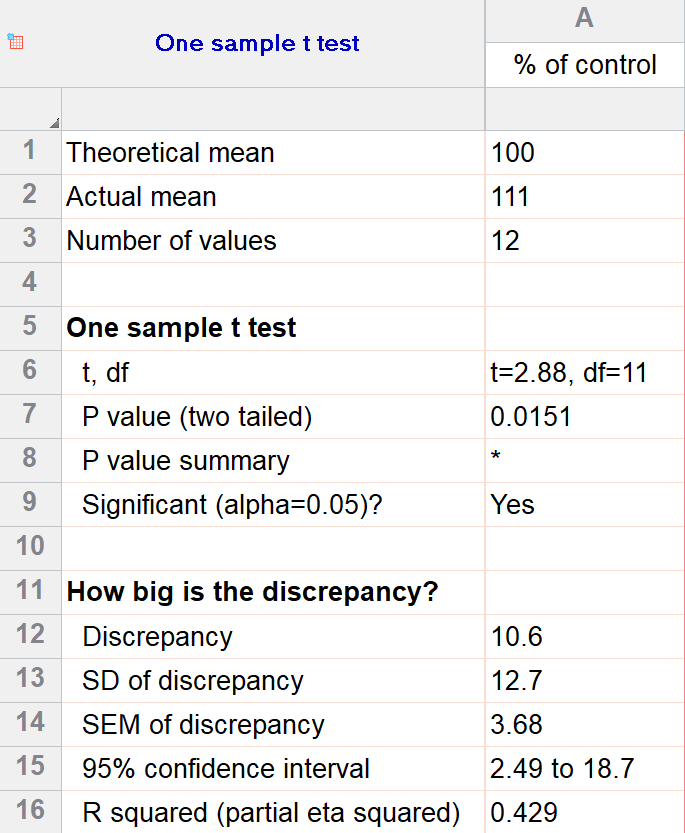

Example of a one sample t test

For our example within Prism, we have a dataset of 12 values from an experiment labeled “% of control”. Perhaps these are heights of a sample of plants that have been treated with a new fertilizer. A value of 100 represents the industry-standard control height. Likewise, 123 represents a plant with a height 123% that of the control (that is, 23% larger).

We’ll perform a two-tailed, one-sample t test to see if plants are shorter or taller on average with the fertilizer. We will use a significance threshold of 0.05. Here is the output:

You can see in the output that the actual sample mean was 111. Is that different enough from the industry standard (100) to conclude that there is a statistical difference?

The quick answer is yes, there’s strong evidence that the height of the plants with the fertilizer is greater than the industry standard (p=0.015). The nice thing about using software is that it handles some of the trickier steps for you. In this case, it calculates your test statistic (t=2.88), determines the appropriate degrees of freedom (11), and outputs a P value.

More informative than the P value is the confidence interval of the difference, which is 2.49 to 18.7. The confidence interval tells us that, based on our data, we are confident that the true difference between our sample and the baseline value of 100 is somewhere between 2.49 and 18.7. As long as the difference is statistically significant, the interval will not contain zero.

You can follow these tips for interpreting your own one-sample test.

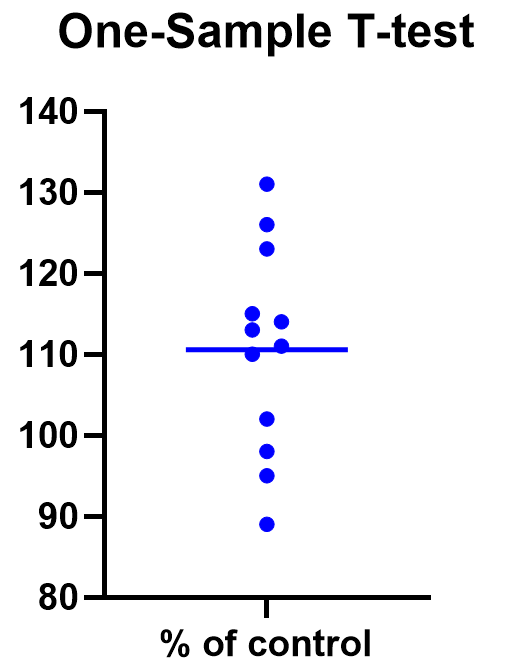

Graphing a one-sample t test

For some techniques (like regression), graphing the data is a very helpful part of the analysis. For t tests, making a chart of your data is still useful to spot any strange patterns or outliers, but the small sample size means you may already be familiar with any strange things in your data.

Here we have a simple plot of the data points, perhaps with a mark for the average. We’ve made this as an example, but the truth is that graphing is usually more visually telling for two-sample t tests than for just one sample.

Two sample t tests

There are several kinds of two sample t tests, with the two main categories being paired and unpaired (independent) samples.

Paired samples t test

In a paired samples t test, also called dependent samples t test, there are two samples of data, and each observation in one sample is “paired” with an observation in the second sample. The most common example is when measurements are taken on each subject before and after a treatment. A paired t test example research question is, “Is there a statistical difference between the average red blood cell counts before and after a treatment?”

Having two samples that are closely related simplifies the analysis. Statistical software, such as this paired t test calculator , will simply take a difference between the two values, and then compare that difference to 0.

In some (rare) situations, taking a difference between the pairs violates the assumptions of a t test, because the average difference changes based on the size of the before value (e.g., there’s a larger difference between before and after when there were more to start with). In this case, instead of using a difference test, use a ratio of the before and after values, which is referred to as ratio t tests .

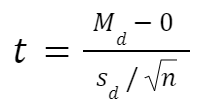

Paired t test formula

The formula for paired samples t test is:

- Md: Mean difference between the samples

- sd: The standard deviation of the differences

- n: The number of differences

Degrees of freedom are the same as before. If you’re studying for an exam, you can remember that the degrees of freedom are still n-1 (not n-2) because we are converting the data into a single column of differences rather than considering the two groups independently.

Also note that the null value here is simply 0. There is no real reason to include “minus 0” in an equation other than to illustrate that we are still doing a hypothesis test. After you take the difference between the two means, you are comparing that difference to 0.

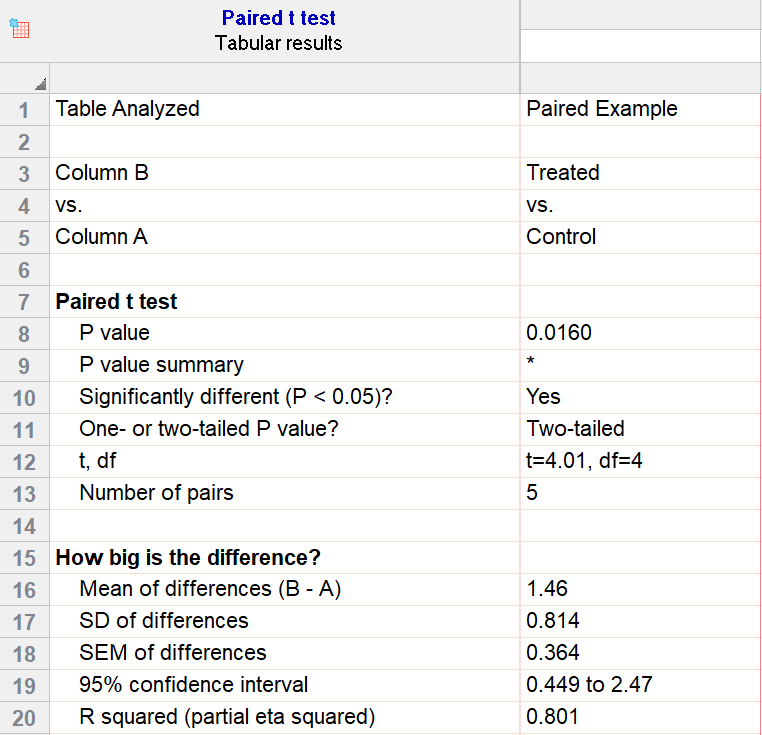

For our example data, we have five test subjects and have taken two measurements from each: before (“control”) and after a treatment (“treated”). If we set alpha = 0.05 and perform a two-tailed test, we observe a statistically significant difference between the treated and control group (p=0.0160, t=4.01, df = 4). We are 95% confident that the true mean difference between the treated and control group is between 0.449 and 2.47.

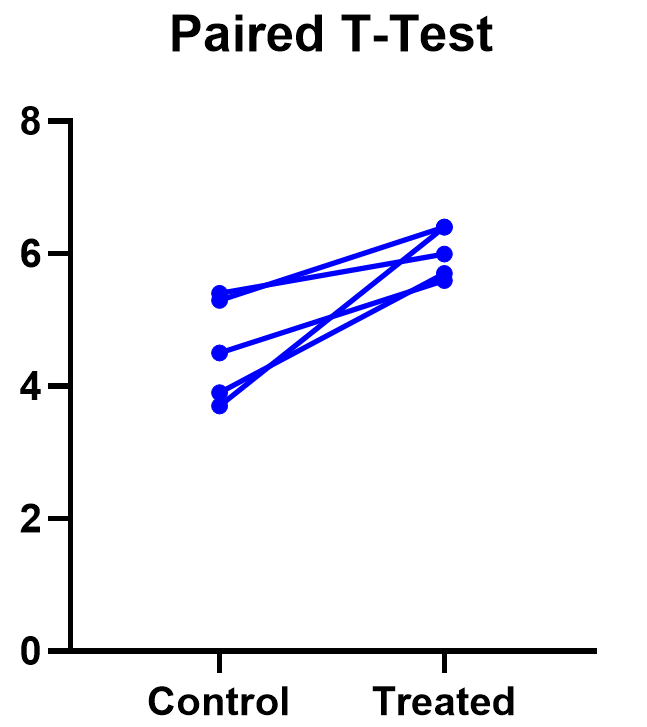

Graphing a paired t test

The significant result of the P value suggests evidence that the treatment had some effect, and we can also look at this graphically. The lines that connect the observations can help us spot a pattern, if it exists. In this case the lines show that all observations increased after treatment. While not all graphics are this straightforward, here it is very consistent with the outcome of the t test.

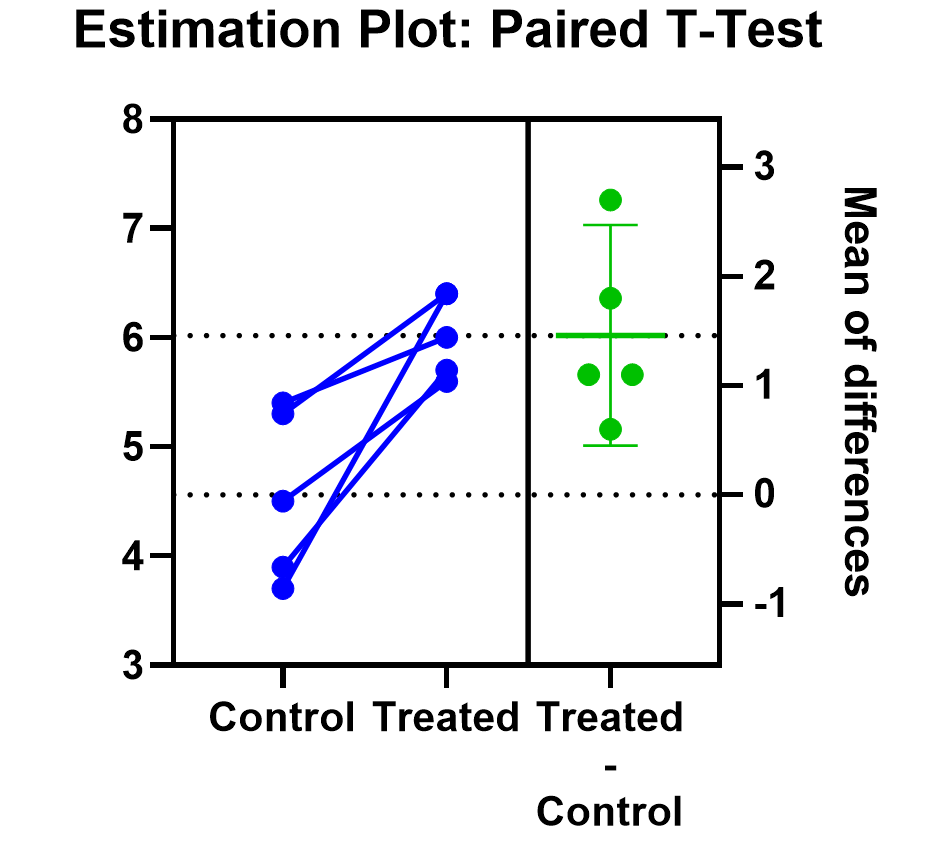

Prism’s estimation plot is even more helpful because it shows both the data (like above) and the confidence interval for the difference between means. You can easily see the evidence of significance since the confidence interval on the right does not contain zero.

Here are some more graphing tips for paired t tests .

Unpaired samples t test

Unpaired samples t test, also called independent samples t test, is appropriate when you have two sample groups that aren’t correlated with one another. A pharma example is testing a treatment group against a control group of different subjects. Compare that with a paired sample, which might be recording the same subjects before and after a treatment.

With unpaired t tests, in addition to choosing your level of significance and a one or two tailed test, you need to determine whether or not to assume that the variances between the groups are the same or not. If you assume equal variances, then you can “pool” the calculation of the standard error between the two samples. Otherwise, the standard choice is Welch’s t test which corrects for unequal variances. This choice affects the calculation of the test statistic and the power of the test, which is the test’s sensitivity to detect statistical significance.

It’s best to choose whether or not you’ll use a pooled or unpooled (Welch’s) standard error before running your experiment, because the standard statistical test is notoriously problematic. See more details about unequal variances here .

As long as you’re using statistical software, such as this two-sample t test calculator , it’s just as easy to calculate a test statistic whether or not you assume that the variances of your two samples are the same. If you’re doing it by hand, however, the calculations get more complicated with unequal variances.

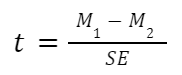

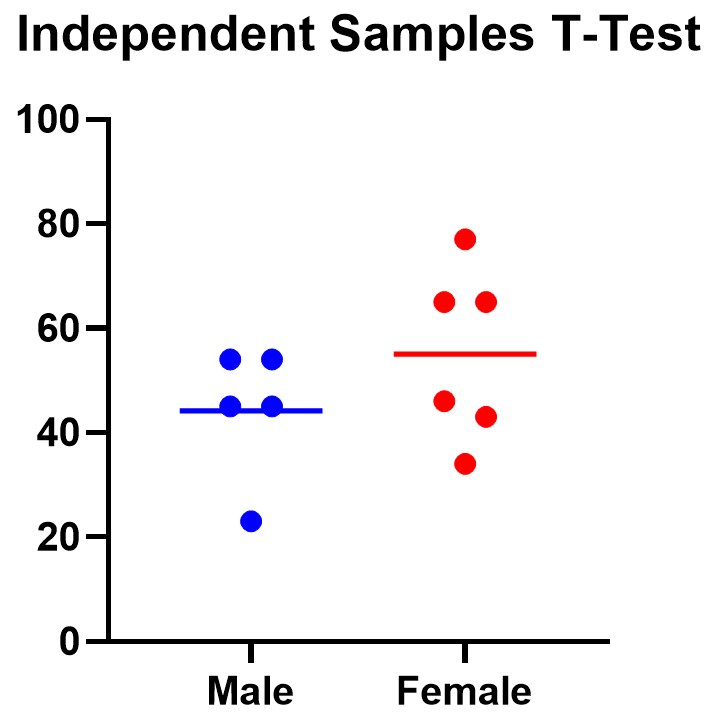

Unpaired (independent) samples t test formula

The general two-sample t test formula is:

- M1 and M2: Two means you are comparing, one from each dataset

- SE : The combined standard error of the two samples (calculated using pooled or unpooled standard error)

The denominator (standard error) calculation can be complicated, as can the degrees of freedom. If the groups are not balanced (the same number of observations in each), you will need to account for both when determining n for the test as a whole.

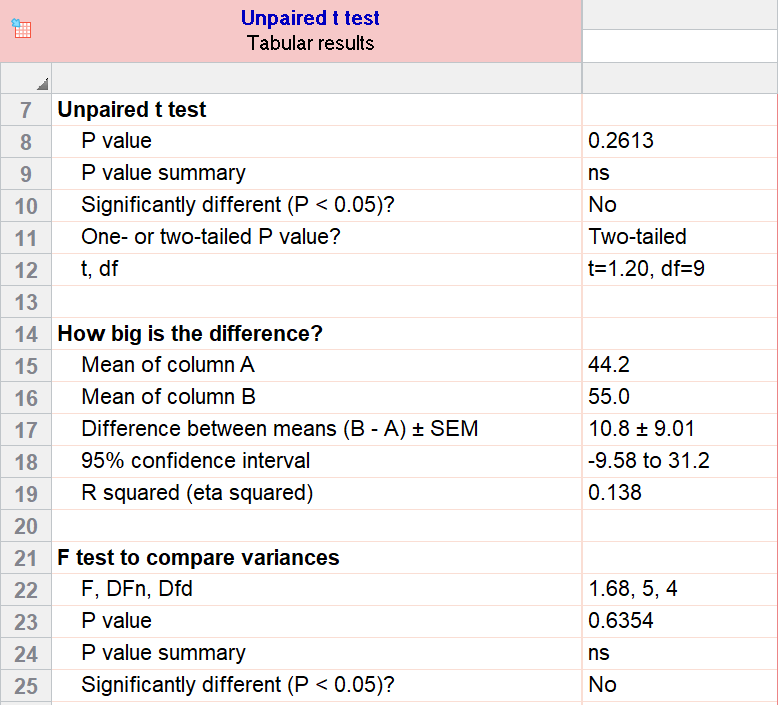

As an example for this family, we conduct a paired samples t test assuming equal variances (pooled). Based on our research hypothesis, we’ll conduct a two-tailed test, and use alpha=0.05 for our level of significance. Our samples were unbalanced, with two samples of 6 and 5 observations respectively.

The P value (p=0.261, t = 1.20, df = 9) is higher than our threshold of 0.05. We have not found sufficient evidence to suggest a significant difference. You can see the confidence interval of the difference of the means is -9.58 to 31.2.

Note that the F-test result shows that the variances of the two groups are not significantly different from each other.

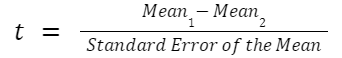

Graphing an unpaired samples t test

For an unpaired samples t test, graphing the data can quickly help you get a handle on the two groups and how similar or different they are. Like the paired example, this helps confirm the evidence (or lack thereof) that is found by doing the t test itself.

Below you can see that the observed mean for females is higher than that for males. But because of the variability in the data, we can’t tell if the means are actually different or if the difference is just by chance.

Nonparametric alternatives for t tests

If your data comes from a normal distribution (or something close enough to a normal distribution), then a t test is valid. If that assumption is violated, you can use nonparametric alternatives.

T tests evaluate whether the mean is different from another value, whereas nonparametric alternatives compare either the median or the rank. Medians are well-known to be much more robust to outliers than the mean.

The downside to nonparametric tests is that they don’t have as much statistical power, meaning a larger difference is required in order to determine that it’s statistically significant.

Wilcoxon signed-rank test

The Wilcoxon signed-rank test is the nonparametric cousin to the one-sample t test. This compares a sample median to a hypothetical median value. It is sometimes erroneously even called the Wilcoxon t test (even though it calculates a “W” statistic).

And if you have two related samples, you should use the Wilcoxon matched pairs test instead. The two versions of Wilcoxon are different, and the matched pairs version is specifically for comparing the median difference for paired samples.

Mann-Whitney and Kolmogorov-Smirnov tests

For unpaired (independent) samples, there are multiple options for nonparametric testing. Mann-Whitney is more popular and compares the mean ranks (the ordering of values from smallest to largest) of the two samples. Mann-Whitney is often misrepresented as a comparison of medians, but that’s not always the case. Kolmogorov-Smirnov tests if the overall distributions differ between the two samples.

More t test FAQs

What is the formula for a t test.

The exact formula depends on which type of t test you are running, although there is a basic structure that all t tests have in common. All t test statistics will have the form:

- t : The t test statistic you calculate for your test

- Mean1 and Mean2: Two means you are comparing, at least 1 from your own dataset

- Standard Error of the Mean : The standard error of the mean , also called the standard deviation of the mean, which takes into account the variance and size of your dataset

The exact formula for any t test can be slightly different, particularly the calculation of the standard error. Not only does it matter whether one or two samples are being compared, the relationship between the samples can make a difference too.

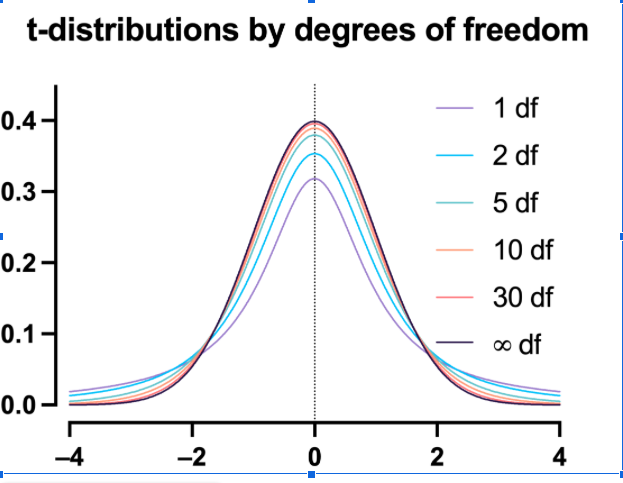

What is a t-distribution?

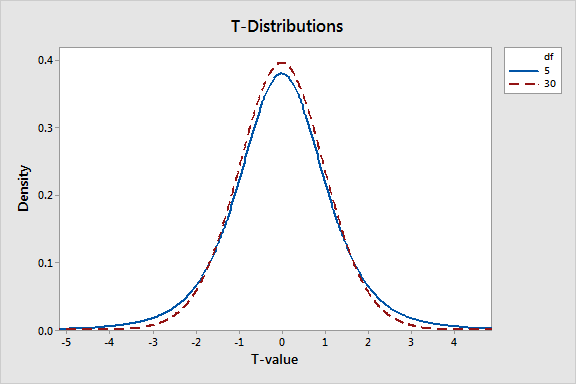

A t-distribution is similar to a normal distribution. It’s a bell-shaped curve, but compared to a normal it has fatter tails, which means that it’s more common to observe extremes. T-distributions are identified by the number of degrees of freedom. The higher the number, the closer the t-distribution gets to a normal distribution. After about 30 degrees of freedom, a t and a standard normal are practically the same.

What are degrees of freedom?

Degrees of freedom are a measure of how large your dataset is. They aren’t exactly the number of observations, because they also take into account the number of parameters (e.g., mean, variance) that you have estimated.

What is the difference between paired vs unpaired t tests?

Both paired and unpaired t tests involve two sample groups of data. With a paired t test, the values in each group are related (usually they are before and after values measured on the same test subject). In contrast, with unpaired t tests, the observed values aren’t related between groups. An unpaired, or independent t test, example is comparing the average height of children at school A vs school B.

When do I use a z-test versus a t test?

Z-tests, which compare data using a normal distribution rather than a t-distribution, are primarily used for two situations. The first is when you’re evaluating proportions (number of failures on an assembly line). The second is when your sample size is large enough (usually around 30) that you can use a normal approximation to evaluate the means.

When should I use ANOVA instead of a t test?

Use ANOVA if you have more than two group means to compare.

What are the differences between t test vs chi square?

Chi square tests are used to evaluate contingency tables , which record a count of the number of subjects that fall into particular categories (e.g., truck, SUV, car). t tests compare the mean(s) of a variable of interest (e.g., height, weight).

What are P values?

P values are the probability that you would get data as or more extreme than the observed data given that the null hypothesis is true. It’s a mouthful, and there are a lot of issues to be aware of with P values.

What are t test critical values?

Critical values are a classical form (they aren’t used directly with modern computing) of determining if a statistical test is significant or not. Historically you could calculate your test statistic from your data, and then use a t-table to look up the cutoff value (critical value) that represented a “significant” result. You would then compare your observed statistic against the critical value.

How do I calculate degrees of freedom for my t test?

In most practical usage, degrees of freedom are the number of observations you have minus the number of parameters you are trying to estimate. The calculation isn’t always straightforward and is approximated for some t tests.

Statistical software calculates degrees of freedom automatically as part of the analysis, so understanding them in more detail isn’t needed beyond assuaging any curiosity.

Perform your own t test

Are you ready to calculate your own t test? Start your 30 day free trial of Prism and get access to:

- A step by step guide on how to perform a t test

- Sample data to save you time

- More tips on how Prism can help your research

With Prism, in a matter of minutes you learn how to go from entering data to performing statistical analyses and generating high-quality graphs.

JMP | Statistical Discovery.™ From SAS.

Statistics Knowledge Portal

A free online introduction to statistics

What is a t- test?

A t -test (also known as Student's t -test) is a tool for evaluating the means of one or two populations using hypothesis testing. A t-test may be used to evaluate whether a single group differs from a known value (a one-sample t-test), whether two groups differ from each other (an independent two-sample t-test), or whether there is a significant difference in paired measurements (a paired, or dependent samples t-test).

How are t -tests used?

First, you define the hypothesis you are going to test and specify an acceptable risk of drawing a faulty conclusion. For example, when comparing two populations, you might hypothesize that their means are the same, and you decide on an acceptable probability of concluding that a difference exists when that is not true. Next, you calculate a test statistic from your data and compare it to a theoretical value from a t- distribution. Depending on the outcome, you either reject or fail to reject your null hypothesis.

What if I have more than two groups?

You cannot use a t -test. Use a multiple comparison method. Examples are analysis of variance ( ANOVA ) , Tukey-Kramer pairwise comparison, Dunnett's comparison to a control, and analysis of means (ANOM).

t -Test assumptions

While t -tests are relatively robust to deviations from assumptions, t -tests do assume that:

- The data are continuous.

- The sample data have been randomly sampled from a population.

- There is homogeneity of variance (i.e., the variability of the data in each group is similar).

- The distribution is approximately normal.

For two-sample t -tests, we must have independent samples. If the samples are not independent, then a paired t -test may be appropriate.

Types of t -tests

There are three t -tests to compare means: a one-sample t -test, a two-sample t -test and a paired t -test. The table below summarizes the characteristics of each and provides guidance on how to choose the correct test. Visit the individual pages for each type of t -test for examples along with details on assumptions and calculations.

| test | test | test | |

|---|---|---|---|

| Synonyms | Student’s -test | -test test -test -test -test | test -test |

| Number of variables | One | Two | Two |

| Type of variable | |||

| Purpose of test | Decide if the population mean is equal to a specific value or not | Decide if the population means for two different groups are equal or not | Decide if the difference between paired measurements for a population is zero or not |

| Example: test if... | Mean heart rate of a group of people is equal to 65 or not | Mean heart rates for two groups of people are the same or not | Mean difference in heart rate for a group of people before and after exercise is zero or not |

| Estimate of population mean | Sample average | Sample average for each group | Sample average of the differences in paired measurements |

| Population standard deviation | Unknown, use sample standard deviation | Unknown, use sample standard deviations for each group | Unknown, use sample standard deviation of differences in paired measurements |

| Degrees of freedom | Number of observations in sample minus 1, or: n–1 | Sum of observations in each sample minus 2, or: n + n – 2 | Number of paired observations in sample minus 1, or: n–1 |

The table above shows only the t -tests for population means. Another common t -test is for correlation coefficients . You use this t -test to decide if the correlation coefficient is significantly different from zero.

One-tailed vs. two-tailed tests

When you define the hypothesis, you also define whether you have a one-tailed or a two-tailed test. You should make this decision before collecting your data or doing any calculations. You make this decision for all three of the t -tests for means.

To explain, let’s use the one-sample t -test. Suppose we have a random sample of protein bars, and the label for the bars advertises 20 grams of protein per bar. The null hypothesis is that the unknown population mean is 20. Suppose we simply want to know if the data shows we have a different population mean. In this situation, our hypotheses are:

$ \mathrm H_o: \mu = 20 $

$ \mathrm H_a: \mu \neq 20 $

Here, we have a two-tailed test. We will use the data to see if the sample average differs sufficiently from 20 – either higher or lower – to conclude that the unknown population mean is different from 20.

Suppose instead that we want to know whether the advertising on the label is correct. Does the data support the idea that the unknown population mean is at least 20? Or not? In this situation, our hypotheses are:

$ \mathrm H_o: \mu >= 20 $

$ \mathrm H_a: \mu < 20 $

Here, we have a one-tailed test. We will use the data to see if the sample average is sufficiently less than 20 to reject the hypothesis that the unknown population mean is 20 or higher.

See the "tails for hypotheses tests" section on the t -distribution page for images that illustrate the concepts for one-tailed and two-tailed tests.

How to perform a t -test

For all of the t -tests involving means, you perform the same steps in analysis:

- Define your null ($ \mathrm H_o $) and alternative ($ \mathrm H_a $) hypotheses before collecting your data.

- Decide on the alpha value (or α value). This involves determining the risk you are willing to take of drawing the wrong conclusion. For example, suppose you set α=0.05 when comparing two independent groups. Here, you have decided on a 5% risk of concluding the unknown population means are different when they are not.

- Check the data for errors.

- Check the assumptions for the test.

- Perform the test and draw your conclusion. All t -tests for means involve calculating a test statistic. You compare the test statistic to a theoretical value from the t- distribution . The theoretical value involves both the α value and the degrees of freedom for your data. For more detail, visit the pages for one-sample t -test , two-sample t -test and paired t -test .

Uncomplicated Reviews of Educational Research Methods

- Significance Testing (t-tests)

.pdf version of this page

In this review, we’ll look at significance testing, using mostly the t -test as a guide. As you read educational research, you’ll encounter t -test and ANOVA statistics frequently. Part I reviews the basics of significance testing as related to the null hypothesis and p values. Part II shows you how to conduct a t -test, using an online calculator. Part III deal s with interpreting t -test results. Part IV is about reporting t -test results in both text and table formats and concludes with a guide to interpreting confidence intervals.

What is Statistical Significance?

The terms “significance level” or “level of significance” refer to the likelihood that the random sample you choose (for example, test scores) is not representative of the population. The lower the significance level, the more confident you can be in replicating your results. Significance levels most commonly used in educational research are the .05 and .01 levels. If it helps, think of .05 as another way of saying 95/100 times that you sample from the population, you will get this result. Similarly, .01 suggests that 99/100 times that you sample from the population, you will get the same result. These numbers and signs (more on that later) come from Significance Testing, which begins with the Null Hypothesis.

Part I: The Null Hypothesis

We start by revisiting familiar territory, the scientific method . We’ll start with a basic research question: How does variable A affect variable B? The traditional way to test this question involves:

Step 1. Develop a research question.

Step 2. Find previous research to support, refute, or suggest ways of testing the question.

Step 3. Construct a hypothesis by revising your research question:

| H1: A = B | There is no relationship between A and B | Null |

| H2: A ≠ B | There is a relationship between A and B. Here, there is a relationship, but we don’t know if it is positive or negative. | Alternate |

| H3: A < B | There is a negative relationship between A and B. Here, the < suggests that the less A is involved, the better B. | Alternate |

| H4: A > B | There is a positive relationship between A and B. Here, the > suggests that the more B is involved, the better A. | Alternate |

Step 4. Test the null hypothesis. To test the null hypothesis, A = B, we use a significance test. The italicized lowercase p you often see, followed by > or < sign and a decimal ( p ≤ .05) indicate significance. In most cases, the researcher tests the null hypothesis, A = B , because is it easier to show there is some sort of effect of A on B, than to have to determine a positive or negative effect prior to conducting the research. This way, you leave yourself room without having the burden of proof on your study from the beginning.

Step 5. Analyze data and draw a conclusion. Testing the null hypothesis leaves two possibilities:

| A = B | Fail to reject the null. We find no relationship between A and B. | Null |

| A =, <, or > B | Reject the null. We find a relationship between A and B. | Alternate |

Step 6. Communicate results. See Wording results, below.

Part II: Conducting a t -test (for Independent Means)

So how do we test a null hypothesis? One way is with a t -test. A t -test asks the question,

“Is the difference between the means of two samples different (significant) enough to say that some other characteristic (teaching method, teacher, gender, etc.) could have caused it?”

To conduct a t-test using an online calculator, complete the following steps:

Step 1. Compose the Research Question.

Step 2. Compose a Null and an Alternative Hypothesis.

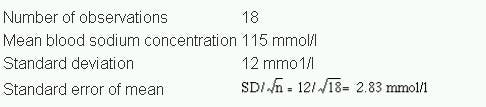

Step 3. Obtain two random samples of at least 30, preferably 50, from each group.

Step 4. Conduct a t -test:

- Go to http://www.graphpad.com/quickcalcs/ttest1.cfm

- For #1, check “Enter mean, SD and N.”

- For #2, label your groups and enter data. You will need to have mean and SD. N is group size.

- For #3, check “Unpaired t test.”

- For #4, click “Calculate now.”

Step 5. Interpret the results (see below).

Step 6. Report results in text or table format (see below).

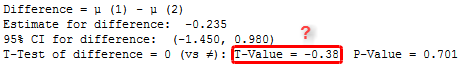

- Get p from “P value and statistical significance:” Note that this is the actual value.

- Get the confidence interval from “Confidence interval:”

- Get the t and df values from “Intermediate values used in calculations:”

- Get Mean , and SD from “Review your data.”

Part III. Interpreting a t-test (Understanding the Numbers)

| tells you a test was used. | |

| (98) | tells you the (the sample of tests performed). |

| 3.09 | is the “ statistic” – the result of the calculation. |

| ≤ .05 | is the probability of getting the observed score from the sample groups. |

| .05 | likely to be a result of chance (same as saying A = B) |

| difference is not significant | |

| null is correct | |

| “fail to reject the null” | |

| There is no relationship between A and B. | |

| ≤ .05 | not likely to be a result of chance (same as saying A ≠ B) |

| difference is significant | |

| null is incorrect | |

| “reject the null” | |

| There is a relationship between A and B. |

Note: We acknowledge that the average scores are different. With a t -test we are deciding if that difference is significant (is it due to sampling error or something else?).

Understanding the Confidence Interval (CI)

The Confidence Interval (CI) of a mean is a region within which a score (like mean test score) may be said to fall with a certain amount of “confidence.” The CI uses sample size and standard deviation to generate a lower and upper number that you can be 95% sure will include any sample you take from a set of data.

Consider Georgia’s AYP measure, the CRCT . For a science CRCT score, we take several samples and compare the different means. After a few calculations, we could determine something like. . .the average difference (mean) between samples is -7.5, with a 95% CI of -22.08 to 6.72. In other words, among all students’ science CRCT scores, 95 out of 100 times we take group samples for comparison (for example by year, or gender, etc.), one of the groups, on average will be 7.5 points lower than the other group. We can be fairly certain that the difference in scores will be between -22.08 and 6.72 points.

Part IV. Wording Results

Wording Results in Text

In text, the basic format is to report: population ( N ), mean ( M ) and standard deviation ( SD ) for both samples, t value, degrees freedom ( df ), significance ( p ), and confidence interval (CI .95 )* .

Example 1: p ≤ .05, or Significant Results

Among 7th graders in Lowndes County Schools taking the CRCT reading exam ( N = 336), there was a statistically significant difference between the two teaching teams, team 1 ( M = 818.92, SD = 16.11) and team 2 ( M = 828.28, SD = 14.09), t (98) = 3.09, p ≤ .05, CI .95 -15.37, -3.35. Therefore, we reject the null hypothesis that there is no difference in reading scores between teaching teams 1 and 2.

Example 2: p ≥ .05, or Not Significant Results

Among 7th graders in Lowndes County Schools taking the CRCT science exam ( N = 336), there was no statistically significant difference between female students (M = 834.00, SD = 32.81) and male students (841.08, SD = 28.76), t (98) = 1.15 p ≥ .05, CI .95 -19.32, 5.16. Therefore, we fail to reject the null hypothesis that there is no difference in science scores between females and males.

Wording Results in APA Table Format

Table 1. Comparison of CRCT 7 th Grade Science Scores by Gender

|

|

|

|

|

|

|

| |

| Female |

50

|

834.00 |

32.81 |

– |

– |

– |

– |

| Male |

50

|

841.08 |

28.76 |

– |

– |

– |

– |

| Total |

100

|

837.54 |

30.90 |

1.14 |

98 |

.2540 |

-19.32 – 5.16 |

Note: On the Web site, this appears blocked and should not be. See the .pdf for the correct format.

Share this:

About research rundowns.

Research Rundowns was made possible by support from the Dewar College of Education at Valdosta State University .

- Experimental Design

- What is Educational Research?

- Writing Research Questions

- Mixed Methods Research Designs

- Qualitative Coding & Analysis

- Qualitative Research Design

- Correlation

- Effect Size

- Instrument, Validity, Reliability

- Mean & Standard Deviation

- Steps 1-4: Finding Research

- Steps 5-6: Analyzing & Organizing

- Steps 7-9: Citing & Writing

- Writing a Research Report

Create a free website or blog at WordPress.com.

- Already have a WordPress.com account? Log in now.

- Subscribe Subscribed

- Copy shortlink

- Report this content

- View post in Reader

- Manage subscriptions

- Collapse this bar

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

How t-Tests Work: t-Values, t-Distributions, and Probabilities

By Jim Frost 12 Comments

T-tests are statistical hypothesis tests that you use to analyze one or two sample means. Depending on the t-test that you use, you can compare a sample mean to a hypothesized value, the means of two independent samples, or the difference between paired samples. In this post, I show you how t-tests use t-values and t-distributions to calculate probabilities and test hypotheses.

As usual, I’ll provide clear explanations of t-values and t-distributions using concepts and graphs rather than formulas! If you need a primer on the basics, read my hypothesis testing overview .

What Are t-Values?

The term “t-test” refers to the fact that these hypothesis tests use t-values to evaluate your sample data. T-values are a type of test statistic. Hypothesis tests use the test statistic that is calculated from your sample to compare your sample to the null hypothesis. If the test statistic is extreme enough, this indicates that your data are so incompatible with the null hypothesis that you can reject the null. Learn more about Test Statistics .

Don’t worry. I find these technical definitions of statistical terms are easier to explain with graphs, and we’ll get to that!

When you analyze your data with any t-test, the procedure reduces your entire sample to a single value, the t-value. These calculations factor in your sample size and the variation in your data. Then, the t-test compares your sample means(s) to the null hypothesis condition in the following manner:

- If the sample data equals the null hypothesis precisely, the t-test produces a t-value of 0.

- As the sample data become progressively dissimilar from the null hypothesis, the absolute value of the t-value increases.

Read the companion post where I explain how t-tests calculate t-values .

The tricky thing about t-values is that they are a unitless statistic, which makes them difficult to interpret on their own. Imagine that we performed a t-test, and it produced a t-value of 2. What does this t-value mean exactly? We know that the sample mean doesn’t equal the null hypothesis value because this t-value doesn’t equal zero. However, we don’t know how exceptional our value is if the null hypothesis is correct.

To be able to interpret individual t-values, we have to place them in a larger context. T-distributions provide this broader context so we can determine the unusualness of an individual t-value.

What Are t-Distributions?

A single t-test produces a single t-value. Now, imagine the following process. First, let’s assume that the null hypothesis is true for the population. Now, suppose we repeat our study many times by drawing many random samples of the same size from this population. Next, we perform t-tests on all of the samples and plot the distribution of the t-values. This distribution is known as a sampling distribution, which is a type of probability distribution.

Related posts : Sampling Distributions and Understanding Probability Distributions

If we follow this procedure, we produce a graph that displays the distribution of t-values that we obtain from a population where the null hypothesis is true. We use sampling distributions to calculate probabilities for how unusual our sample statistic is if the null hypothesis is true.

Luckily, we don’t need to go through the hassle of collecting numerous random samples to create this graph! Statisticians understand the properties of t-distributions so we can estimate the sampling distribution using the t-distribution and our sample size.

The degrees of freedom (DF) for the statistical design define the t-distribution for a particular study. The DF are closely related to the sample size. For t-tests, there is a different t-distribution for each sample size.

Related posts : Degrees of Freedom in Statistics and T Distribution: Definition and Uses .

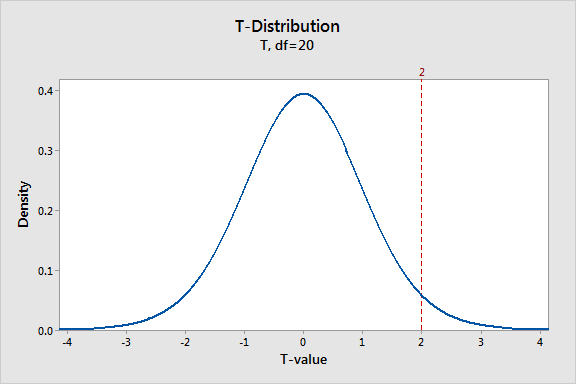

Use the t-Distribution to Compare Your Sample Results to the Null Hypothesis

T-distributions assume that the null hypothesis is correct for the population from which you draw your random samples. To evaluate how compatible your sample data are with the null hypothesis, place your study’s t-value in the t-distribution and determine how unusual it is.

The sampling distribution below displays a t-distribution with 20 degrees of freedom, which equates to a sample size of 21 for a 1-sample t-test. The t-distribution centers on zero because it assumes that the null hypothesis is true. When the null is true, your study is most likely to obtain a t-value near zero and less liable to produce t-values further from zero in either direction.

On the graph, I’ve displayed the t-value of 2 from our hypothetical study to see how our sample data compares to the null hypothesis. Under the assumption that the null is true, the t-distribution indicates that our t-value is not the most likely value. However, there still appears to be a realistic chance of observing t-values from -2 to +2.

We know that our t-value of 2 is rare when the null hypothesis is true. How rare is it exactly? Our final goal is to evaluate whether our sample t-value is so rare that it justifies rejecting the null hypothesis for the entire population based on our sample data. To proceed, we need to quantify the probability of observing our t-value.

Related post : What are Critical Values?

t-Tests Use t-Values and t-Distributions to Calculate Probabilities

Hypothesis tests work by taking the observed test statistic from a sample and using the sampling distribution to calculate the probability of obtaining that test statistic if the null hypothesis is correct. In the context of how t-tests work, you assess the likelihood of a t-value using the t-distribution. If a t-value is sufficiently improbable when the null hypothesis is true, you can reject the null hypothesis.

I have two crucial points to explain before we calculate the probability linked to our t-value of 2.

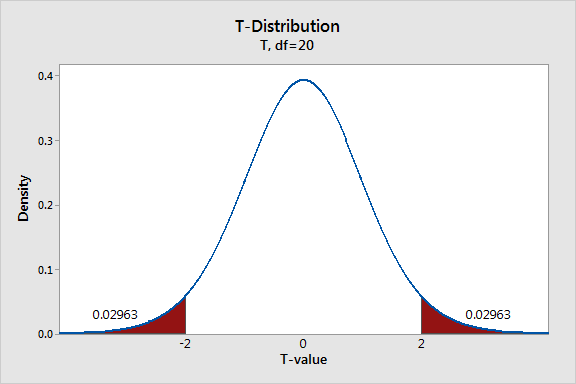

Because I’m showing the results of a two-tailed test, we’ll use the t-values of +2 and -2. Two-tailed tests allow you to assess whether the sample mean is greater than or less than the target value in a 1-sample t-test. A one-tailed hypothesis test can only determine statistical significance for one or the other.

Additionally, it is possible to calculate a probability only for a range of t-values. On a probability distribution plot, probabilities are represented by the shaded area under a distribution curve. Without a range of values, there is no area under the curve and, hence, no probability.

Related posts : One-Tailed and Two-Tailed Tests Explained and T-Distribution Table of Critical Values

t-Test Results for Our Hypothetical Study

Considering these points, the graph below finds the probability associated with t-values less than -2 and greater than +2 using the area under the curve. This graph is specific to our t-test design (1-sample t-test with N = 21).

The probability distribution plot indicates that each of the two shaded regions has a probability of 0.02963—for a total of 0.05926. This graph shows that t-values fall within these areas almost 6% of the time when the null hypothesis is true.

There is a chance that you’ve heard of this type of probability before—it’s the P value! While the likelihood of t-values falling within these regions seems small, it’s not quite unlikely enough to justify rejecting the null under the standard significance level of 0.05.

Learn how to interpret the P value correctly and avoid a common mistake!

Related posts : How to Find the P value: Process and Calculations and Types of Errors in Hypothesis Testing

t-Distributions and Sample Size

The sample size for a t-test determines the degrees of freedom (DF) for that test, which specifies the t-distribution. The overall effect is that as the sample size decreases, the tails of the t-distribution become thicker. Thicker tails indicate that t-values are more likely to be far from zero even when the null hypothesis is correct. The changing shapes are how t-distributions factor in the greater uncertainty when you have a smaller sample.

You can see this effect in the probability distribution plot below that displays t-distributions for 5 and 30 DF.

Sample means from smaller samples tend to be less precise. In other words, with a smaller sample, it’s less surprising to have an extreme t-value, which affects the probabilities and p-values. A t-value of 2 has a P value of 10.2% and 5.4% for 5 and 30 DF, respectively. Use larger samples!

Click here for step-by-step instructions for how to do t-tests in Excel !

If you like this approach and want to learn about other hypothesis tests, read my posts about:

- How the F-test Works in ANOVA .

- How Chi-Squared Tests of Independence Work

To see an alternative to traditional hypothesis testing that does not use probability distributions and test statistics, learn about bootstrapping in statistics !

Share this:

Reader Interactions

May 25, 2021 at 10:42 pm

what statistical tools, is recommended for measuring the level of satisfaction

May 26, 2021 at 3:55 pm

Hi McKienze,

The correct analysis depends on the nature of the data you have and what you want to learn. You don’t provide enough information to be able to answer the question. However, read my hypothesis testing overview to learn about the options.

August 23, 2020 at 1:33 am

Hi Jim, I want to ask about standardizing data before the t test.. For example I have USD prices of a big Mac across the world and this varies by quite a bit. Doing the t-test here would be misleading since some countries would have a higher mean… Should the approach be standardizing all the usd values? Or perhaps even local values?

August 24, 2020 at 12:37 am

Yes, that makes complete sense. I don’t know what method is best. If you can find a common scale to use for all prices, I’d do that. You’re basically using a data transformation before analysis, which is totally acceptable when you have a good reason.

April 3, 2020 at 4:47 am

Hey Jim. Your blog is one of the only few ones where everything is explained in a simple and well structured manner, in a way that both an absolute beginner and a geek can equally benefit from your writing. Both this article as well as your article on one tailed and two tailed hypothesis tests have been super helpful. Thank you for this post

March 6, 2020 at 11:04 am

Thank you, Jim, for sharing your knowledge with us.

I have a 2 part question. I am testing the difference in walking distance within a busy environment compared with a simple environment. I am also testing walking time within the 2 environments. I am using the same individuals for both scenarios. I was planning to do a paired ttest for distance difference between busy and simple environments and a 2nd paired ttest for time difference between the environments.

My question(s) for you is: 1. Do you feel that a paired ttest is the best choice for these? 2. Do you feel that, because there are 2 tests, I should do a bonferroni correction or do you believe that because the data is completely different (distance as opposed to time), it is okay not to do a multiple comparison test?

August 13, 2019 at 12:43 pm

thank you very eye opening on the use of two or one tailed test

April 19, 2019 at 3:49 pm

Hi Mr. Frost,

Thanks for the breakdown. I have a question … if I wanted to run a test to show that the medical professionals could use more training with data set consisting of questions which in your opinion would be my best route?

January 14, 2019 at 2:22 pm

Hello Jim, I find this statement in this excellent write up contradicting : 1)This graph shows that t-values fall within these areas almost 6% of the time when the null hypothesis is true I mean if this is true the t-value =0 hypothesis is rejected.

January 14, 2019 at 2:51 pm

I can see how that statement sounds contradictory, but I can assure that it is quite accurate. It’s often forgotten but the underlying assumption for the calculations surrounding hypothesis testing, significance levels, and p-values is that the null hypothesis is true.

So, the probabilities shown in the graph that you refer to are based on the assumption that the null hypothesis is true. Further, t-values for this study design have a 6% chance of falling in those critical areas assuming the null is true (a false positive).