What is Research Methodology? Definition, Types, and Examples

Research methodology 1,2 is a structured and scientific approach used to collect, analyze, and interpret quantitative or qualitative data to answer research questions or test hypotheses. A research methodology is like a plan for carrying out research and helps keep researchers on track by limiting the scope of the research. Several aspects must be considered before selecting an appropriate research methodology, such as research limitations and ethical concerns that may affect your research.

The research methodology section in a scientific paper describes the different methodological choices made, such as the data collection and analysis methods, and why these choices were selected. The reasons should explain why the methods chosen are the most appropriate to answer the research question. A good research methodology also helps ensure the reliability and validity of the research findings. There are three types of research methodology—quantitative, qualitative, and mixed-method, which can be chosen based on the research objectives.

What is research methodology ?

A research methodology describes the techniques and procedures used to identify and analyze information regarding a specific research topic. It is a process by which researchers design their study so that they can achieve their objectives using the selected research instruments. It includes all the important aspects of research, including research design, data collection methods, data analysis methods, and the overall framework within which the research is conducted. While these points can help you understand what is research methodology, you also need to know why it is important to pick the right methodology.

Having a good research methodology in place has the following advantages: 3

- Helps other researchers who may want to replicate your research; the explanations will be of benefit to them.

- You can easily answer any questions about your research if they arise at a later stage.

- A research methodology provides a framework and guidelines for researchers to clearly define research questions, hypotheses, and objectives.

- It helps researchers identify the most appropriate research design, sampling technique, and data collection and analysis methods.

- A sound research methodology helps researchers ensure that their findings are valid and reliable and free from biases and errors.

- It also helps ensure that ethical guidelines are followed while conducting research.

- A good research methodology helps researchers in planning their research efficiently, by ensuring optimum usage of their time and resources.

Writing the methods section of a research paper? Let Paperpal help you achieve perfection

Types of research methodology.

There are three types of research methodology based on the type of research and the data required. 1

- Quantitative research methodology focuses on measuring and testing numerical data. This approach is good for reaching a large number of people in a short amount of time. This type of research helps in testing the causal relationships between variables, making predictions, and generalizing results to wider populations.

- Qualitative research methodology examines the opinions, behaviors, and experiences of people. It collects and analyzes words and textual data. This research methodology requires fewer participants but is still more time consuming because the time spent per participant is quite large. This method is used in exploratory research where the research problem being investigated is not clearly defined.

- Mixed-method research methodology uses the characteristics of both quantitative and qualitative research methodologies in the same study. This method allows researchers to validate their findings, verify if the results observed using both methods are complementary, and explain any unexpected results obtained from one method by using the other method.

What are the types of sampling designs in research methodology?

Sampling 4 is an important part of a research methodology and involves selecting a representative sample of the population to conduct the study, making statistical inferences about them, and estimating the characteristics of the whole population based on these inferences. There are two types of sampling designs in research methodology—probability and nonprobability.

- Probability sampling

In this type of sampling design, a sample is chosen from a larger population using some form of random selection, that is, every member of the population has an equal chance of being selected. The different types of probability sampling are:

- Systematic —sample members are chosen at regular intervals. It requires selecting a starting point for the sample and sample size determination that can be repeated at regular intervals. This type of sampling method has a predefined range; hence, it is the least time consuming.

- Stratified —researchers divide the population into smaller groups that don’t overlap but represent the entire population. While sampling, these groups can be organized, and then a sample can be drawn from each group separately.

- Cluster —the population is divided into clusters based on demographic parameters like age, sex, location, etc.

- Convenience —selects participants who are most easily accessible to researchers due to geographical proximity, availability at a particular time, etc.

- Purposive —participants are selected at the researcher’s discretion. Researchers consider the purpose of the study and the understanding of the target audience.

- Snowball —already selected participants use their social networks to refer the researcher to other potential participants.

- Quota —while designing the study, the researchers decide how many people with which characteristics to include as participants. The characteristics help in choosing people most likely to provide insights into the subject.

What are data collection methods?

During research, data are collected using various methods depending on the research methodology being followed and the research methods being undertaken. Both qualitative and quantitative research have different data collection methods, as listed below.

Qualitative research 5

- One-on-one interviews: Helps the interviewers understand a respondent’s subjective opinion and experience pertaining to a specific topic or event

- Document study/literature review/record keeping: Researchers’ review of already existing written materials such as archives, annual reports, research articles, guidelines, policy documents, etc.

- Focus groups: Constructive discussions that usually include a small sample of about 6-10 people and a moderator, to understand the participants’ opinion on a given topic.

- Qualitative observation : Researchers collect data using their five senses (sight, smell, touch, taste, and hearing).

Quantitative research 6

- Sampling: The most common type is probability sampling.

- Interviews: Commonly telephonic or done in-person.

- Observations: Structured observations are most commonly used in quantitative research. In this method, researchers make observations about specific behaviors of individuals in a structured setting.

- Document review: Reviewing existing research or documents to collect evidence for supporting the research.

- Surveys and questionnaires. Surveys can be administered both online and offline depending on the requirement and sample size.

Let Paperpal help you write the perfect research methods section. Start now!

What are data analysis methods.

The data collected using the various methods for qualitative and quantitative research need to be analyzed to generate meaningful conclusions. These data analysis methods 7 also differ between quantitative and qualitative research.

Quantitative research involves a deductive method for data analysis where hypotheses are developed at the beginning of the research and precise measurement is required. The methods include statistical analysis applications to analyze numerical data and are grouped into two categories—descriptive and inferential.

Descriptive analysis is used to describe the basic features of different types of data to present it in a way that ensures the patterns become meaningful. The different types of descriptive analysis methods are:

- Measures of frequency (count, percent, frequency)

- Measures of central tendency (mean, median, mode)

- Measures of dispersion or variation (range, variance, standard deviation)

- Measure of position (percentile ranks, quartile ranks)

Inferential analysis is used to make predictions about a larger population based on the analysis of the data collected from a smaller population. This analysis is used to study the relationships between different variables. Some commonly used inferential data analysis methods are:

- Correlation: To understand the relationship between two or more variables.

- Cross-tabulation: Analyze the relationship between multiple variables.

- Regression analysis: Study the impact of independent variables on the dependent variable.

- Frequency tables: To understand the frequency of data.

- Analysis of variance: To test the degree to which two or more variables differ in an experiment.

Qualitative research involves an inductive method for data analysis where hypotheses are developed after data collection. The methods include:

- Content analysis: For analyzing documented information from text and images by determining the presence of certain words or concepts in texts.

- Narrative analysis: For analyzing content obtained from sources such as interviews, field observations, and surveys. The stories and opinions shared by people are used to answer research questions.

- Discourse analysis: For analyzing interactions with people considering the social context, that is, the lifestyle and environment, under which the interaction occurs.

- Grounded theory: Involves hypothesis creation by data collection and analysis to explain why a phenomenon occurred.

- Thematic analysis: To identify important themes or patterns in data and use these to address an issue.

How to choose a research methodology?

Here are some important factors to consider when choosing a research methodology: 8

- Research objectives, aims, and questions —these would help structure the research design.

- Review existing literature to identify any gaps in knowledge.

- Check the statistical requirements —if data-driven or statistical results are needed then quantitative research is the best. If the research questions can be answered based on people’s opinions and perceptions, then qualitative research is most suitable.

- Sample size —sample size can often determine the feasibility of a research methodology. For a large sample, less effort- and time-intensive methods are appropriate.

- Constraints —constraints of time, geography, and resources can help define the appropriate methodology.

Got writer’s block? Kickstart your research paper writing with Paperpal now!

How to write a research methodology .

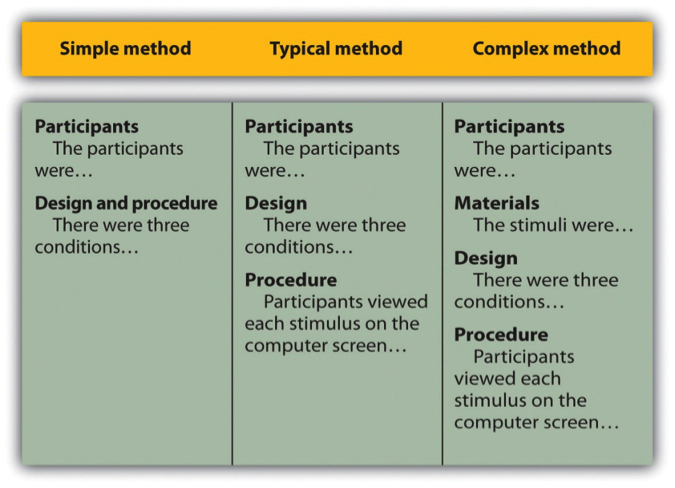

A research methodology should include the following components: 3,9

- Research design —should be selected based on the research question and the data required. Common research designs include experimental, quasi-experimental, correlational, descriptive, and exploratory.

- Research method —this can be quantitative, qualitative, or mixed-method.

- Reason for selecting a specific methodology —explain why this methodology is the most suitable to answer your research problem.

- Research instruments —explain the research instruments you plan to use, mainly referring to the data collection methods such as interviews, surveys, etc. Here as well, a reason should be mentioned for selecting the particular instrument.

- Sampling —this involves selecting a representative subset of the population being studied.

- Data collection —involves gathering data using several data collection methods, such as surveys, interviews, etc.

- Data analysis —describe the data analysis methods you will use once you’ve collected the data.

- Research limitations —mention any limitations you foresee while conducting your research.

- Validity and reliability —validity helps identify the accuracy and truthfulness of the findings; reliability refers to the consistency and stability of the results over time and across different conditions.

- Ethical considerations —research should be conducted ethically. The considerations include obtaining consent from participants, maintaining confidentiality, and addressing conflicts of interest.

Streamline Your Research Paper Writing Process with Paperpal

The methods section is a critical part of the research papers, allowing researchers to use this to understand your findings and replicate your work when pursuing their own research. However, it is usually also the most difficult section to write. This is where Paperpal can help you overcome the writer’s block and create the first draft in minutes with Paperpal Copilot, its secure generative AI feature suite.

With Paperpal you can get research advice, write and refine your work, rephrase and verify the writing, and ensure submission readiness, all in one place. Here’s how you can use Paperpal to develop the first draft of your methods section.

- Generate an outline: Input some details about your research to instantly generate an outline for your methods section

- Develop the section: Use the outline and suggested sentence templates to expand your ideas and develop the first draft.

- P araph ras e and trim : Get clear, concise academic text with paraphrasing that conveys your work effectively and word reduction to fix redundancies.

- Choose the right words: Enhance text by choosing contextual synonyms based on how the words have been used in previously published work.

- Check and verify text : Make sure the generated text showcases your methods correctly, has all the right citations, and is original and authentic. .

You can repeat this process to develop each section of your research manuscript, including the title, abstract and keywords. Ready to write your research papers faster, better, and without the stress? Sign up for Paperpal and start writing today!

Frequently Asked Questions

Q1. What are the key components of research methodology?

A1. A good research methodology has the following key components:

- Research design

- Data collection procedures

- Data analysis methods

- Ethical considerations

Q2. Why is ethical consideration important in research methodology?

A2. Ethical consideration is important in research methodology to ensure the readers of the reliability and validity of the study. Researchers must clearly mention the ethical norms and standards followed during the conduct of the research and also mention if the research has been cleared by any institutional board. The following 10 points are the important principles related to ethical considerations: 10

- Participants should not be subjected to harm.

- Respect for the dignity of participants should be prioritized.

- Full consent should be obtained from participants before the study.

- Participants’ privacy should be ensured.

- Confidentiality of the research data should be ensured.

- Anonymity of individuals and organizations participating in the research should be maintained.

- The aims and objectives of the research should not be exaggerated.

- Affiliations, sources of funding, and any possible conflicts of interest should be declared.

- Communication in relation to the research should be honest and transparent.

- Misleading information and biased representation of primary data findings should be avoided.

Q3. What is the difference between methodology and method?

A3. Research methodology is different from a research method, although both terms are often confused. Research methods are the tools used to gather data, while the research methodology provides a framework for how research is planned, conducted, and analyzed. The latter guides researchers in making decisions about the most appropriate methods for their research. Research methods refer to the specific techniques, procedures, and tools used by researchers to collect, analyze, and interpret data, for instance surveys, questionnaires, interviews, etc.

Research methodology is, thus, an integral part of a research study. It helps ensure that you stay on track to meet your research objectives and answer your research questions using the most appropriate data collection and analysis tools based on your research design.

Accelerate your research paper writing with Paperpal. Try for free now!

- Research methodologies. Pfeiffer Library website. Accessed August 15, 2023. https://library.tiffin.edu/researchmethodologies/whatareresearchmethodologies

- Types of research methodology. Eduvoice website. Accessed August 16, 2023. https://eduvoice.in/types-research-methodology/

- The basics of research methodology: A key to quality research. Voxco. Accessed August 16, 2023. https://www.voxco.com/blog/what-is-research-methodology/

- Sampling methods: Types with examples. QuestionPro website. Accessed August 16, 2023. https://www.questionpro.com/blog/types-of-sampling-for-social-research/

- What is qualitative research? Methods, types, approaches, examples. Researcher.Life blog. Accessed August 15, 2023. https://researcher.life/blog/article/what-is-qualitative-research-methods-types-examples/

- What is quantitative research? Definition, methods, types, and examples. Researcher.Life blog. Accessed August 15, 2023. https://researcher.life/blog/article/what-is-quantitative-research-types-and-examples/

- Data analysis in research: Types & methods. QuestionPro website. Accessed August 16, 2023. https://www.questionpro.com/blog/data-analysis-in-research/#Data_analysis_in_qualitative_research

- Factors to consider while choosing the right research methodology. PhD Monster website. Accessed August 17, 2023. https://www.phdmonster.com/factors-to-consider-while-choosing-the-right-research-methodology/

- What is research methodology? Research and writing guides. Accessed August 14, 2023. https://paperpile.com/g/what-is-research-methodology/

- Ethical considerations. Business research methodology website. Accessed August 17, 2023. https://research-methodology.net/research-methodology/ethical-considerations/

Paperpal is a comprehensive AI writing toolkit that helps students and researchers achieve 2x the writing in half the time. It leverages 21+ years of STM experience and insights from millions of research articles to provide in-depth academic writing, language editing, and submission readiness support to help you write better, faster.

Get accurate academic translations, rewriting support, grammar checks, vocabulary suggestions, and generative AI assistance that delivers human precision at machine speed. Try for free or upgrade to Paperpal Prime starting at US$19 a month to access premium features, including consistency, plagiarism, and 30+ submission readiness checks to help you succeed.

Experience the future of academic writing – Sign up to Paperpal and start writing for free!

Related Reads:

- Dangling Modifiers and How to Avoid Them in Your Writing

- Research Outlines: How to Write An Introduction Section in Minutes with Paperpal Copilot

- How to Paraphrase Research Papers Effectively

- What is a Literature Review? How to Write It (with Examples)

Language and Grammar Rules for Academic Writing

Climatic vs. climactic: difference and examples, you may also like, dissertation printing and binding | types & comparison , what is a dissertation preface definition and examples , how to write a research proposal: (with examples..., how to write your research paper in apa..., how to choose a dissertation topic, how to write a phd research proposal, how to write an academic paragraph (step-by-step guide), maintaining academic integrity with paperpal’s generative ai writing..., research funding basics: what should a grant proposal..., how to write an abstract in research papers....

Still have questions? Leave a comment

Add Comment

Checklist: Dissertation Proposal

Enter your email id to get the downloadable right in your inbox!

Examples: Edited Papers

Need editing and proofreading services, research methodology guide: writing tips, types, & examples.

- Tags: Academic Research , Research

No dissertation or research paper is complete without the research methodology section. Since this is the chapter where you explain how you carried out your research, this is where all the meat is! Here’s where you clearly lay out the steps you have taken to test your hypothesis or research problem.

Through this blog, we’ll unravel the complexities and meaning of research methodology in academic writing , from its fundamental principles and ethics to the diverse types of research methodology in use today. Alongside offering research methodology examples, we aim to guide you on how to write research methodology, ensuring your research endeavors are both impactful and impeccably grounded!

Ensure your research methodology is foolproof. Learn more

Let’s first take a closer look at a simple research methodology definition:

Defining what is research methodology

Research methodology is the set of procedures and techniques used to collect, analyze, and interpret data to understand and solve a research problem. Methodology in research not only includes the design and methods but also the basic principles that guide the choice of specific methods.

Grasping the concept of methodology in research is essential for students and scholars, as it demonstrates the thorough and structured method used to explore a hypothesis or research question. Understanding the definition of methodology in research aids in identifying the methods used to collect data. Be it through any type of research method approach, ensuring adherence to the proper research paper format is crucial.

Now let’s explore some research methodology types:

Types of research methodology

1. qualitative research methodology.

Qualitative research methodology is aimed at understanding concepts, thoughts, or experiences. This approach is descriptive and is often utilized to gather in-depth insights into people’s attitudes, behaviors, or cultures. Qualitative research methodology involves methods like interviews, focus groups, and observation. The strength of this methodology lies in its ability to provide contextual richness.

2. Quantitative research methodology

Quantitative research methodology, on the other hand, is focused on quantifying the problem by generating numerical data or data that can be transformed into usable statistics. It uses measurable data to formulate facts and uncover patterns in research. Quantitative research methodology typically involves surveys, experiments, or statistical analysis. This methodology is appreciated for its ability to produce objective results that are generalizable to a larger population.

3. Mixed-Methods research methodology

Mixed-methods research combines both qualitative and quantitative research methodologies to provide a more comprehensive understanding of the research problem. This approach leverages the strengths of both methodologies to provide a deeper insight into the research question of a research paper .

Research methodology vs. research methods

The research methodology or design is the overall strategy and rationale that you used to carry out the research. Whereas, research methods are the specific tools and processes you use to gather and understand the data you need to test your hypothesis.

Research methodology examples and application

To further understand research methodology, let’s explore some examples of research methodology:

a. Qualitative research methodology example: A study exploring the impact of author branding on author popularity might utilize in-depth interviews to gather personal experiences and perspectives.

b. Quantitative research methodology example: A research project investigating the effects of a book promotion technique on book sales could employ a statistical analysis of profit margins and sales before and after the implementation of the method.

c. Mixed-Methods research methodology example: A study examining the relationship between social media use and academic performance might combine both qualitative and quantitative approaches. It could include surveys to quantitatively assess the frequency of social media usage and its correlation with grades, alongside focus groups or interviews to qualitatively explore students’ perceptions and experiences regarding how social media affects their study habits and academic engagement.

These examples highlight the meaning of methodology in research and how it guides the research process, from data collection to analysis, ensuring the study’s objectives are met efficiently.

Importance of methodology in research papers

When it comes to writing your study, the methodology in research papers or a dissertation plays a pivotal role. A well-crafted methodology section of a research paper or thesis not only enhances the credibility of your research but also provides a roadmap for others to replicate or build upon your work.

How to structure the research methods chapter

Wondering how to write the research methodology section? Follow these steps to create a strong methods chapter:

Step 1: Explain your research methodology

At the start of a research paper , you would have provided the background of your research and stated your hypothesis or research problem. In this section, you will elaborate on your research strategy.

Begin by restating your research question and proceed to explain what type of research you opted for to test it. Depending on your research, here are some questions you can consider:

a. Did you use qualitative or quantitative data to test the hypothesis?

b. Did you perform an experiment where you collected data or are you writing a dissertation that is descriptive/theoretical without data collection?

c. Did you use primary data that you collected or analyze secondary research data or existing data as part of your study?

These questions will help you establish the rationale for your study on a broader level, which you will follow by elaborating on the specific methods you used to collect and understand your data.

Step 2: Explain the methods you used to test your hypothesis

Now that you have told your reader what type of research you’ve undertaken for the dissertation, it’s time to dig into specifics. State what specific methods you used and explain the conditions and variables involved. Explain what the theoretical framework behind the method was, what samples you used for testing it, and what tools and materials you used to collect the data.

Step 3: Explain how you analyzed the results

Once you have explained the data collection process, explain how you analyzed and studied the data. Here, your focus is simply to explain the methods of analysis rather than the results of the study.

Here are some questions you can answer at this stage:

a. What tools or software did you use to analyze your results?

b. What parameters or variables did you consider while understanding and studying the data you’ve collected?

c. Was your analysis based on a theoretical framework?

Your mode of analysis will change depending on whether you used a quantitative or qualitative research methodology in your study. If you’re working within the hard sciences or physical sciences, you are likely to use a quantitative research methodology (relying on numbers and hard data). If you’re doing a qualitative study, in the social sciences or humanities, your analysis may rely on understanding language and socio-political contexts around your topic. This is why it’s important to establish what kind of study you’re undertaking at the onset.

Step 4: Defend your choice of methodology

Now that you have gone through your research process in detail, you’ll also have to make a case for it. Justify your choice of methodology and methods, explaining why it is the best choice for your research question. This is especially important if you have chosen an unconventional approach or you’ve simply chosen to study an existing research problem from a different perspective. Compare it with other methodologies, especially ones attempted by previous researchers, and discuss what contributions using your methodology makes.

Step 5: Discuss the obstacles you encountered and how you overcame them

No matter how thorough a methodology is, it doesn’t come without its hurdles. This is a natural part of scientific research that is important to document so that your peers and future researchers are aware of it. Writing in a research paper about this aspect of your research process also tells your evaluator that you have actively worked to overcome the pitfalls that came your way and you have refined the research process.

Tips to write an effective methodology chapter

1. Remember who you are writing for. Keeping sight of the reader/evaluator will help you know what to elaborate on and what information they are already likely to have. You’re condensing months’ work of research in just a few pages, so you should omit basic definitions and information about general phenomena people already know.

2. Do not give an overly elaborate explanation of every single condition in your study.

3. Skip details and findings irrelevant to the results.

4. Cite references that back your claim and choice of methodology.

5. Consistently emphasize the relationship between your research question and the methodology you adopted to study it.

To sum it up, what is methodology in research? It’s the blueprint of your research, essential for ensuring that your study is systematic, rigorous, and credible. Whether your focus is on qualitative research methodology, quantitative research methodology, or a combination of both, understanding and clearly defining your methodology is key to the success of your research.

Once you write the research methodology and complete writing the entire research paper, the next step is to edit your paper. As experts in research paper editing and proofreading services , we’d love to help you perfect your paper!

Here are some other articles that you might find useful:

- Essential Research Tips for Essay Writing

- How to Write a Lab Report: Examples from Academic Editors

- The Essential Types of Editing Every Writer Needs to Know

- Editing and Proofreading Academic Papers: A Short Guide

- The Top 10 Editing and Proofreading Services of 2023

Frequently Asked Questions

What does research methodology mean, what types of research methodologies are there, what is qualitative research methodology, how to determine sample size in research methodology, what is action research methodology.

Found this article helpful?

One comment on “ Research Methodology Guide: Writing Tips, Types, & Examples ”

This is very simplified and direct. Very helpful to understand the research methodology section of a dissertation

Leave a Comment: Cancel reply

Your email address will not be published.

Your vs. You’re: When to Use Your and You’re

Your organization needs a technical editor: here’s why, your guide to the best ebook readers in 2024, writing for the web: 7 expert tips for web content writing.

Subscribe to our Newsletter

Get carefully curated resources about writing, editing, and publishing in the comfort of your inbox.

How to Copyright Your Book?

If you’ve thought about copyrighting your book, you’re on the right path.

© 2024 All rights reserved

- Terms of service

- Privacy policy

- Self Publishing Guide

- Pre-Publishing Steps

- Fiction Writing Tips

- Traditional Publishing

- Additional Resources

- Dissertation Writing Guide

- Essay Writing Guide

- Academic Writing and Publishing

- Citation and Referencing

- Partner with us

- Annual report

- Website content

- Marketing material

- Job Applicant

- Cover letter

- Resource Center

- Case studies

Instant insights, infinite possibilities

Research report guide: Definition, types, and tips

Last updated

5 March 2024

Reviewed by

Short on time? Get an AI generated summary of this article instead

From successful product launches or software releases to planning major business decisions, research reports serve many vital functions. They can summarize evidence and deliver insights and recommendations to save companies time and resources. They can reveal the most value-adding actions a company should take.

However, poorly constructed reports can have the opposite effect! Taking the time to learn established research-reporting rules and approaches will equip you with in-demand skills. You’ll be able to capture and communicate information applicable to numerous situations and industries, adding another string to your resume bow.

- What are research reports?

A research report is a collection of contextual data, gathered through organized research, that provides new insights into a particular challenge (which, for this article, is business-related). Research reports are a time-tested method for distilling large amounts of data into a narrow band of focus.

Their effectiveness often hinges on whether the report provides:

Strong, well-researched evidence

Comprehensive analysis

Well-considered conclusions and recommendations

Though the topic possibilities are endless, an effective research report keeps a laser-like focus on the specific questions or objectives the researcher believes are key to achieving success. Many research reports begin as research proposals, which usually include the need for a report to capture the findings of the study and recommend a course of action.

A description of the research method used, e.g., qualitative, quantitative, or other

Statistical analysis

Causal (or explanatory) research (i.e., research identifying relationships between two variables)

Inductive research, also known as ‘theory-building’

Deductive research, such as that used to test theories

Action research, where the research is actively used to drive change

- Importance of a research report

Research reports can unify and direct a company's focus toward the most appropriate strategic action. Of course, spending resources on a report takes up some of the company's human and financial resources. Choosing when a report is called for is a matter of judgment and experience.

Some development models used heavily in the engineering world, such as Waterfall development, are notorious for over-relying on research reports. With Waterfall development, there is a linear progression through each step of a project, and each stage is precisely documented and reported on before moving to the next.

The pace of the business world is faster than the speed at which your authors can produce and disseminate reports. So how do companies strike the right balance between creating and acting on research reports?

The answer lies, again, in the report's defined objectives. By paring down your most pressing interests and those of your stakeholders, your research and reporting skills will be the lenses that keep your company's priorities in constant focus.

Honing your company's primary objectives can save significant amounts of time and align research and reporting efforts with ever-greater precision.

Some examples of well-designed research objectives are:

Proving whether or not a product or service meets customer expectations

Demonstrating the value of a service, product, or business process to your stakeholders and investors

Improving business decision-making when faced with a lack of time or other constraints

Clarifying the relationship between a critical cause and effect for problematic business processes

Prioritizing the development of a backlog of products or product features

Comparing business or production strategies

Evaluating past decisions and predicting future outcomes

- Features of a research report

Research reports generally require a research design phase, where the report author(s) determine the most important elements the report must contain.

Just as there are various kinds of research, there are many types of reports.

Here are the standard elements of almost any research-reporting format:

Report summary. A broad but comprehensive overview of what readers will learn in the full report. Summaries are usually no more than one or two paragraphs and address all key elements of the report. Think of the key takeaways your primary stakeholders will want to know if they don’t have time to read the full document.

Introduction. Include a brief background of the topic, the type of research, and the research sample. Consider the primary goal of the report, who is most affected, and how far along the company is in meeting its objectives.

Methods. A description of how the researcher carried out data collection, analysis, and final interpretations of the data. Include the reasons for choosing a particular method. The methods section should strike a balance between clearly presenting the approach taken to gather data and discussing how it is designed to achieve the report's objectives.

Data analysis. This section contains interpretations that lead readers through the results relevant to the report's thesis. If there were unexpected results, include here a discussion on why that might be. Charts, calculations, statistics, and other supporting information also belong here (or, if lengthy, as an appendix). This should be the most detailed section of the research report, with references for further study. Present the information in a logical order, whether chronologically or in order of importance to the report's objectives.

Conclusion. This should be written with sound reasoning, often containing useful recommendations. The conclusion must be backed by a continuous thread of logic throughout the report.

- How to write a research paper

With a clear outline and robust pool of research, a research paper can start to write itself, but what's a good way to start a research report?

Research report examples are often the quickest way to gain inspiration for your report. Look for the types of research reports most relevant to your industry and consider which makes the most sense for your data and goals.

The research report outline will help you organize the elements of your report. One of the most time-tested report outlines is the IMRaD structure:

Introduction

...and Discussion

Pay close attention to the most well-established research reporting format in your industry, and consider your tone and language from your audience's perspective. Learn the key terms inside and out; incorrect jargon could easily harm the perceived authority of your research paper.

Along with a foundation in high-quality research and razor-sharp analysis, the most effective research reports will also demonstrate well-developed:

Internal logic

Narrative flow

Conclusions and recommendations

Readability, striking a balance between simple phrasing and technical insight

How to gather research data for your report

The validity of research data is critical. Because the research phase usually occurs well before the writing phase, you normally have plenty of time to vet your data.

However, research reports could involve ongoing research, where report authors (sometimes the researchers themselves) write portions of the report alongside ongoing research.

One such research-report example would be an R&D department that knows its primary stakeholders are eager to learn about a lengthy work in progress and any potentially important outcomes.

However you choose to manage the research and reporting, your data must meet robust quality standards before you can rely on it. Vet any research with the following questions in mind:

Does it use statistically valid analysis methods?

Do the researchers clearly explain their research, analysis, and sampling methods?

Did the researchers provide any caveats or advice on how to interpret their data?

Have you gathered the data yourself or were you in close contact with those who did?

Is the source biased?

Usually, flawed research methods become more apparent the further you get through a research report.

It's perfectly natural for good research to raise new questions, but the reader should have no uncertainty about what the data represents. There should be no doubt about matters such as:

Whether the sampling or analysis methods were based on sound and consistent logic

What the research samples are and where they came from

The accuracy of any statistical functions or equations

Validation of testing and measuring processes

When does a report require design validation?

A robust design validation process is often a gold standard in highly technical research reports. Design validation ensures the objects of a study are measured accurately, which lends more weight to your report and makes it valuable to more specialized industries.

Product development and engineering projects are the most common research-report examples that typically involve a design validation process. Depending on the scope and complexity of your research, you might face additional steps to validate your data and research procedures.

If you’re including design validation in the report (or report proposal), explain and justify your data-collection processes. Good design validation builds greater trust in a research report and lends more weight to its conclusions.

Choosing the right analysis method

Just as the quality of your report depends on properly validated research, a useful conclusion requires the most contextually relevant analysis method. This means comparing different statistical methods and choosing the one that makes the most sense for your research.

Most broadly, research analysis comes down to quantitative or qualitative methods (respectively: measurable by a number vs subjectively qualified values). There are also mixed research methods, which bridge the need for merging hard data with qualified assessments and still reach a cohesive set of conclusions.

Some of the most common analysis methods in research reports include:

Significance testing (aka hypothesis analysis), which compares test and control groups to determine how likely the data was the result of random chance.

Regression analysis , to establish relationships between variables, control for extraneous variables , and support correlation analysis.

Correlation analysis (aka bivariate testing), a method to identify and determine the strength of linear relationships between variables. It’s effective for detecting patterns from complex data, but care must be exercised to not confuse correlation with causation.

With any analysis method, it's important to justify which method you chose in the report. You should also provide estimates of the statistical accuracy (e.g., the p-value or confidence level of quantifiable data) of any data analysis.

This requires a commitment to the report's primary aim. For instance, this may be achieving a certain level of customer satisfaction by analyzing the cause and effect of changes to how service is delivered. Even better, use statistical analysis to calculate which change is most positively correlated with improved levels of customer satisfaction.

- Tips for writing research reports

There's endless good advice for writing effective research reports, and it almost all depends on the subjective aims of the people behind the report. Due to the wide variety of research reports, the best tips will be unique to each author's purpose.

Consider the following research report tips in any order, and take note of the ones most relevant to you:

No matter how in depth or detailed your report might be, provide a well-considered, succinct summary. At the very least, give your readers a quick and effective way to get up to speed.

Pare down your target audience (e.g., other researchers, employees, laypersons, etc.), and adjust your voice for their background knowledge and interest levels

For all but the most open-ended research, clarify your objectives, both for yourself and within the report.

Leverage your team members’ talents to fill in any knowledge gaps you might have. Your team is only as good as the sum of its parts.

Justify why your research proposal’s topic will endure long enough to derive value from the finished report.

Consolidate all research and analysis functions onto a single user-friendly platform. There's no reason to settle for less than developer-grade tools suitable for non-developers.

What's the format of a research report?

The research-reporting format is how the report is structured—a framework the authors use to organize their data, conclusions, arguments, and recommendations. The format heavily determines how the report's outline develops, because the format dictates the overall structure and order of information (based on the report's goals and research objectives).

What's the purpose of a research-report outline?

A good report outline gives form and substance to the report's objectives, presenting the results in a readable, engaging way. For any research-report format, the outline should create momentum along a chain of logic that builds up to a conclusion or interpretation.

What's the difference between a research essay and a research report?

There are several key differences between research reports and essays:

Research report:

Ordered into separate sections

More commercial in nature

Often includes infographics

Heavily descriptive

More self-referential

Usually provides recommendations

Research essay

Does not rely on research report formatting

More academically minded

Normally text-only

Less detailed

Omits discussion of methods

Usually non-prescriptive

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 18 April 2023

Last updated: 27 February 2023

Last updated: 22 August 2024

Last updated: 5 February 2023

Last updated: 16 August 2024

Last updated: 9 March 2023

Last updated: 30 April 2024

Last updated: 12 December 2023

Last updated: 11 March 2024

Last updated: 4 July 2024

Last updated: 6 March 2024

Last updated: 5 March 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next, log in or sign up.

Get started for free

What Is Research Methodology?

I f you’re new to formal academic research, it’s quite likely that you’re feeling a little overwhelmed by all the technical lingo that gets thrown around. And who could blame you – “research methodology”, “research methods”, “sampling strategies”… it all seems never-ending!

In this post, we’ll demystify the landscape with plain-language explanations and loads of examples (including easy-to-follow videos), so that you can approach your dissertation, thesis or research project with confidence. Let’s get started.

Research Methodology 101

- What exactly research methodology means

- What qualitative , quantitative and mixed methods are

- What sampling strategy is

- What data collection methods are

- What data analysis methods are

- How to choose your research methodology

- Example of a research methodology

What is research methodology?

Research methodology simply refers to the practical “how” of a research study. More specifically, it’s about how a researcher systematically designs a study to ensure valid and reliable results that address the research aims, objectives and research questions . Specifically, how the researcher went about deciding:

- What type of data to collect (e.g., qualitative or quantitative data )

- Who to collect it from (i.e., the sampling strategy )

- How to collect it (i.e., the data collection method )

- How to analyse it (i.e., the data analysis methods )

Within any formal piece of academic research (be it a dissertation, thesis or journal article), you’ll find a research methodology chapter or section which covers the aspects mentioned above. Importantly, a good methodology chapter explains not just what methodological choices were made, but also explains why they were made. In other words, the methodology chapter should justify the design choices, by showing that the chosen methods and techniques are the best fit for the research aims, objectives and research questions.

So, it’s the same as research design?

Not quite. As we mentioned, research methodology refers to the collection of practical decisions regarding what data you’ll collect, from who, how you’ll collect it and how you’ll analyse it. Research design, on the other hand, is more about the overall strategy you’ll adopt in your study. For example, whether you’ll use an experimental design in which you manipulate one variable while controlling others. You can learn more about research design and the various design types here .

Need a helping hand?

What are qualitative, quantitative and mixed-methods?

Qualitative, quantitative and mixed-methods are different types of methodological approaches, distinguished by their focus on words , numbers or both . This is a bit of an oversimplification, but its a good starting point for understanding.

Let’s take a closer look.

Qualitative research refers to research which focuses on collecting and analysing words (written or spoken) and textual or visual data, whereas quantitative research focuses on measurement and testing using numerical data . Qualitative analysis can also focus on other “softer” data points, such as body language or visual elements.

It’s quite common for a qualitative methodology to be used when the research aims and research questions are exploratory in nature. For example, a qualitative methodology might be used to understand peoples’ perceptions about an event that took place, or a political candidate running for president.

Contrasted to this, a quantitative methodology is typically used when the research aims and research questions are confirmatory in nature. For example, a quantitative methodology might be used to measure the relationship between two variables (e.g. personality type and likelihood to commit a crime) or to test a set of hypotheses .

As you’ve probably guessed, the mixed-method methodology attempts to combine the best of both qualitative and quantitative methodologies to integrate perspectives and create a rich picture. If you’d like to learn more about these three methodological approaches, be sure to watch our explainer video below.

What is sampling strategy?

Simply put, sampling is about deciding who (or where) you’re going to collect your data from . Why does this matter? Well, generally it’s not possible to collect data from every single person in your group of interest (this is called the “population”), so you’ll need to engage a smaller portion of that group that’s accessible and manageable (this is called the “sample”).

How you go about selecting the sample (i.e., your sampling strategy) will have a major impact on your study. There are many different sampling methods you can choose from, but the two overarching categories are probability sampling and non-probability sampling .

Probability sampling involves using a completely random sample from the group of people you’re interested in. This is comparable to throwing the names all potential participants into a hat, shaking it up, and picking out the “winners”. By using a completely random sample, you’ll minimise the risk of selection bias and the results of your study will be more generalisable to the entire population.

Non-probability sampling , on the other hand, doesn’t use a random sample . For example, it might involve using a convenience sample, which means you’d only interview or survey people that you have access to (perhaps your friends, family or work colleagues), rather than a truly random sample. With non-probability sampling, the results are typically not generalisable .

To learn more about sampling methods, be sure to check out the video below.

What are data collection methods?

As the name suggests, data collection methods simply refers to the way in which you go about collecting the data for your study. Some of the most common data collection methods include:

- Interviews (which can be unstructured, semi-structured or structured)

- Focus groups and group interviews

- Surveys (online or physical surveys)

- Observations (watching and recording activities)

- Biophysical measurements (e.g., blood pressure, heart rate, etc.)

- Documents and records (e.g., financial reports, court records, etc.)

The choice of which data collection method to use depends on your overall research aims and research questions , as well as practicalities and resource constraints. For example, if your research is exploratory in nature, qualitative methods such as interviews and focus groups would likely be a good fit. Conversely, if your research aims to measure specific variables or test hypotheses, large-scale surveys that produce large volumes of numerical data would likely be a better fit.

What are data analysis methods?

Data analysis methods refer to the methods and techniques that you’ll use to make sense of your data. These can be grouped according to whether the research is qualitative (words-based) or quantitative (numbers-based).

Popular data analysis methods in qualitative research include:

- Qualitative content analysis

- Thematic analysis

- Discourse analysis

- Narrative analysis

- Interpretative phenomenological analysis (IPA)

- Visual analysis (of photographs, videos, art, etc.)

Qualitative data analysis all begins with data coding , after which an analysis method is applied. In some cases, more than one analysis method is used, depending on the research aims and research questions . In the video below, we explore some common qualitative analysis methods, along with practical examples.

- Descriptive statistics (e.g. means, medians, modes )

- Inferential statistics (e.g. correlation, regression, structural equation modelling)

How do I choose a research methodology?

As you’ve probably picked up by now, your research aims and objectives have a major influence on the research methodology . So, the starting point for developing your research methodology is to take a step back and look at the big picture of your research, before you make methodology decisions. The first question you need to ask yourself is whether your research is exploratory or confirmatory in nature.

If your research aims and objectives are primarily exploratory in nature, your research will likely be qualitative and therefore you might consider qualitative data collection methods (e.g. interviews) and analysis methods (e.g. qualitative content analysis).

Conversely, if your research aims and objective are looking to measure or test something (i.e. they’re confirmatory), then your research will quite likely be quantitative in nature, and you might consider quantitative data collection methods (e.g. surveys) and analyses (e.g. statistical analysis).

Designing your research and working out your methodology is a large topic, which we cover extensively on the blog . For now, however, the key takeaway is that you should always start with your research aims, objectives and research questions (the golden thread). Every methodological choice you make needs align with those three components.

Example of a research methodology chapter

In the video below, we provide a detailed walkthrough of a research methodology from an actual dissertation, as well as an overview of our free methodology template .

Learn More About Methodology

Triangulation: The Ultimate Credibility Enhancer

Triangulation is one of the best ways to enhance the credibility of your research. Learn about the different options here.

Research Limitations 101: What You Need To Know

Learn everything you need to know about research limitations (AKA limitations of the study). Includes practical examples from real studies.

In Vivo Coding 101: Full Explainer With Examples

Learn about in vivo coding, a popular qualitative coding technique ideal for studies where the nuances of language are central to the aims.

Process Coding 101: Full Explainer With Examples

Learn about process coding, a popular qualitative coding technique ideal for studies exploring processes, actions and changes over time.

Qualitative Coding 101: Inductive, Deductive & Hybrid Coding

Inductive, Deductive & Abductive Coding Qualitative Coding Approaches Explained...

📄 FREE TEMPLATES

Research Topic Ideation

Proposal Writing

Literature Review

Methodology & Analysis

Academic Writing

Referencing & Citing

Apps, Tools & Tricks

The Grad Coach Podcast

199 Comments

Thank you for this simple yet comprehensive and easy to digest presentation. God Bless!

You’re most welcome, Leo. Best of luck with your research!

I found it very useful. many thanks

This is really directional. A make-easy research knowledge.

Thank you for this, I think will help my research proposal

Thanks for good interpretation,well understood.

Good morning sorry I want to the search topic

Thank u more

Thank you, your explanation is simple and very helpful.

Very educative a.nd exciting platform. A bigger thank you and I’ll like to always be with you

That’s the best analysis

So simple yet so insightful. Thank you.

This really easy to read as it is self-explanatory. Very much appreciated…

Thanks for this. It’s so helpful and explicit. For those elements highlighted in orange, they were good sources of referrals for concepts I didn’t understand. A million thanks for this.

Good morning, I have been reading your research lessons through out a period of times. They are important, impressive and clear. Want to subscribe and be and be active with you.

Thankyou So much Sir Derek…

Good morning thanks so much for the on line lectures am a student of university of Makeni.select a research topic and deliberate on it so that we’ll continue to understand more.sorry that’s a suggestion.

Beautiful presentation. I love it.

please provide a research mehodology example for zoology

It’s very educative and well explained

Thanks for the concise and informative data.

This is really good for students to be safe and well understand that research is all about

Thank you so much Derek sir🖤🙏🤗

Very simple and reliable

This is really helpful. Thanks alot. God bless you.

very useful, Thank you very much..

thanks a lot its really useful

in a nutshell..thank you!

Thanks for updating my understanding on this aspect of my Thesis writing.

thank you so much my through this video am competently going to do a good job my thesis

Thanks a lot. Very simple to understand. I appreciate 🙏

Very simple but yet insightful Thank you

This has been an eye opening experience. Thank you grad coach team.

Very useful message for research scholars

Really very helpful thank you

yes you are right and i’m left

Research methodology with a simplest way i have never seen before this article.

wow thank u so much

Good morning thanks so much for the on line lectures am a student of university of Makeni.select a research topic and deliberate on is so that we will continue to understand more.sorry that’s a suggestion.

Very precise and informative.

Thanks for simplifying these terms for us, really appreciate it.

Thanks this has really helped me. It is very easy to understand.

I found the notes and the presentation assisting and opening my understanding on research methodology

Good presentation

Im so glad you clarified my misconceptions. Im now ready to fry my onions. Thank you so much. God bless

Thank you a lot.

thanks for the easy way of learning and desirable presentation.

Thanks a lot. I am inspired

Well written

I am writing a APA Format paper . I using questionnaire with 120 STDs teacher for my participant. Can you write me mthology for this research. Send it through email sent. Just need a sample as an example please. My topic is ” impacts of overcrowding on students learning

Thanks for your comment.

We can’t write your methodology for you. If you’re looking for samples, you should be able to find some sample methodologies on Google. Alternatively, you can download some previous dissertations from a dissertation directory and have a look at the methodology chapters therein.

All the best with your research.

Thank you so much for this!! God Bless

Thank you. Explicit explanation

Thank you, Derek and Kerryn, for making this simple to understand. I’m currently at the inception stage of my research.

Thnks a lot , this was very usefull on my assignment

excellent explanation

I’m currently working on my master’s thesis, thanks for this! I’m certain that I will use Qualitative methodology.

Thanks a lot for this concise piece, it was quite relieving and helpful. God bless you BIG…

I am currently doing my dissertation proposal and I am sure that I will do quantitative research. Thank you very much it was extremely helpful.

Very interesting and informative yet I would like to know about examples of Research Questions as well, if possible.

I’m about to submit a research presentation, I have come to understand from your simplification on understanding research methodology. My research will be mixed methodology, qualitative as well as quantitative. So aim and objective of mixed method would be both exploratory and confirmatory. Thanks you very much for your guidance.

OMG thanks for that, you’re a life saver. You covered all the points I needed. Thank you so much ❤️ ❤️ ❤️

Thank you immensely for this simple, easy to comprehend explanation of data collection methods. I have been stuck here for months 😩. Glad I found your piece. Super insightful.

I’m going to write synopsis which will be quantitative research method and I don’t know how to frame my topic, can I kindly get some ideas..

Thanks for this, I was really struggling.

This was really informative I was struggling but this helped me.

Thanks a lot for this information, simple and straightforward. I’m a last year student from the University of South Africa UNISA South Africa.

its very much informative and understandable. I have enlightened.

An interesting nice exploration of a topic.

Thank you. Accurate and simple🥰

This article was really helpful, it helped me understanding the basic concepts of the topic Research Methodology. The examples were very clear, and easy to understand. I would like to visit this website again. Thank you so much for such a great explanation of the subject.

Thanks dude

Thank you Doctor Derek for this wonderful piece, please help to provide your details for reference purpose. God bless.

Many compliments to you

Great work , thank you very much for the simple explanation

Thank you. I had to give a presentation on this topic. I have looked everywhere on the internet but this is the best and simple explanation.

thank you, its very informative.

Well explained. Now I know my research methodology will be qualitative and exploratory. Thank you so much, keep up the good work

Well explained, thank you very much.

This is good explanation, I have understood the different methods of research. Thanks a lot.

Great work…very well explanation

Thanks Derek. Kerryn was just fantastic!

Great to hear that, Hyacinth. Best of luck with your research!

Its a good templates very attractive and important to PhD students and lectuter

Thanks for the feedback, Matobela. Good luck with your research methodology.

Thank you. This is really helpful.

You’re very welcome, Elie. Good luck with your research methodology.

Well explained thanks

This is a very helpful site especially for young researchers at college. It provides sufficient information to guide students and equip them with the necessary foundation to ask any other questions aimed at deepening their understanding.

Thanks for the kind words, Edward. Good luck with your research!

Thank you. I have learned a lot.

Great to hear that, Ngwisa. Good luck with your research methodology!

Thank you for keeping your presentation simples and short and covering key information for research methodology. My key takeaway: Start with defining your research objective the other will depend on the aims of your research question.

My name is Zanele I would like to be assisted with my research , and the topic is shortage of nursing staff globally want are the causes , effects on health, patients and community and also globally

Thanks for making it simple and clear. It greatly helped in understanding research methodology. Regards.

This is well simplified and straight to the point

Thank you Dr

I was given an assignment to research 2 publications and describe their research methodology? I don’t know how to start this task can someone help me?

Sure. You’re welcome to book an initial consultation with one of our Research Coaches to discuss how we can assist – https://gradcoach.com/book/new/ .

Thanks a lot I am relieved of a heavy burden.keep up with the good work

I’m very much grateful Dr Derek. I’m planning to pursue one of the careers that really needs one to be very much eager to know. There’s a lot of research to do and everything, but since I’ve gotten this information I will use it to the best of my potential.

Thank you so much, words are not enough to explain how helpful this session has been for me!

Thanks this has thought me alot.

Very concise and helpful. Thanks a lot

Thank Derek. This is very helpful. Your step by step explanation has made it easier for me to understand different concepts. Now i can get on with my research.

I wish i had come across this sooner. So simple but yet insightful

really nice explanation thank you so much

I’m so grateful finding this site, it’s really helpful…….every term well explained and provide accurate understanding especially to student going into an in-depth research for the very first time, even though my lecturer already explained this topic to the class, I think I got the clear and efficient explanation here, much thanks to the author.

It is very helpful material

I would like to be assisted with my research topic : Literature Review and research methodologies. My topic is : what is the relationship between unemployment and economic growth?

Its really nice and good for us.

THANKS SO MUCH FOR EXPLANATION, ITS VERY CLEAR TO ME WHAT I WILL BE DOING FROM NOW .GREAT READS.

Short but sweet.Thank you

Informative article. Thanks for your detailed information.

I’m currently working on my Ph.D. thesis. Thanks a lot, Derek and Kerryn, Well-organized sequences, facilitate the readers’ following.

great article for someone who does not have any background can even understand

I am a bit confused about research design and methodology. Are they the same? If not, what are the differences and how are they related?

Thanks in advance.

concise and informative.

Thank you very much

How can we site this article is Harvard style?

Very well written piece that afforded better understanding of the concept. Thank you!

Am a new researcher trying to learn how best to write a research proposal. I find your article spot on and want to download the free template but finding difficulties. Can u kindly send it to my email, the free download entitled, “Free Download: Research Proposal Template (with Examples)”.

Thank too much

Thank you very much for your comprehensive explanation about research methodology so I like to thank you again for giving us such great things.

Good very well explained.Thanks for sharing it.

Thank u sir, it is really a good guideline.

so helpful thank you very much.

Thanks for the video it was very explanatory and detailed, easy to comprehend and follow up. please, keep it up the good work

It was very helpful, a well-written document with precise information.

how do i reference this?

MLA Jansen, Derek, and Kerryn Warren. “What (Exactly) Is Research Methodology?” Grad Coach, June 2021, gradcoach.com/what-is-research-methodology/.

APA Jansen, D., & Warren, K. (2021, June). What (Exactly) Is Research Methodology? Grad Coach. https://gradcoach.com/what-is-research-methodology/

Your explanation is easily understood. Thank you

Very help article. Now I can go my methodology chapter in my thesis with ease

I feel guided ,Thank you

This simplification is very helpful. It is simple but very educative, thanks ever so much

The write up is informative and educative. It is an academic intellectual representation that every good researcher can find useful. Thanks

Wow, this is wonderful long live.

Nice initiative

thank you the video was helpful to me.

Thank you very much for your simple and clear explanations I’m really satisfied by the way you did it By now, I think I can realize a very good article by following your fastidious indications May God bless you

Thanks very much, it was very concise and informational for a beginner like me to gain an insight into what i am about to undertake. I really appreciate.

very informative sir, it is amazing to understand the meaning of question hidden behind that, and simple language is used other than legislature to understand easily. stay happy.

This one is really amazing. All content in your youtube channel is a very helpful guide for doing research. Thanks, GradCoach.

research methodologies

Please send me more information concerning dissertation research.

Nice piece of knowledge shared….. #Thump_UP

This is amazing, it has said it all. Thanks to Gradcoach

This is wonderful,very elaborate and clear.I hope to reach out for your assistance in my research very soon.

This is the answer I am searching about…

realy thanks a lot

Thank you very much for this awesome, to the point and inclusive article.

Thank you very much I need validity and reliability explanation I have exams

Thank you for a well explained piece. This will help me going forward.

Very simple and well detailed Many thanks

This is so very simple yet so very effective and comprehensive. An Excellent piece of work.

I wish I saw this earlier on! Great insights for a beginner(researcher) like me. Thanks a mil!

Thank you very much, for such a simplified, clear and practical step by step both for academic students and general research work. Holistic, effective to use and easy to read step by step. One can easily apply the steps in practical terms and produce a quality document/up-to standard

Thanks for simplifying these terms for us, really appreciated.

Thanks for a great work. well understood .

This was very helpful. It was simple but profound and very easy to understand. Thank you so much!

Great and amazing research guidelines. Best site for learning research

hello sir/ma’am, i didn’t find yet that what type of research methodology i am using. because i am writing my report on CSR and collect all my data from websites and articles so which type of methodology i should write in dissertation report. please help me. i am from India.

how does this really work?

perfect content, thanks a lot

As a researcher, I commend you for the detailed and simplified information on the topic in question. I would like to remain in touch for the sharing of research ideas on other topics. Thank you

Impressive. Thank you, Grad Coach 😍

Thank you Grad Coach for this piece of information. I have at least learned about the different types of research methodologies.

Very useful content with easy way

Thank you very much for the presentation. I am an MPH student with the Adventist University of Africa. I have successfully completed my theory and starting on my research this July. My topic is “Factors associated with Dental Caries in (one District) in Botswana. I need help on how to go about this quantitative research

I am so grateful to run across something that was sooo helpful. I have been on my doctorate journey for quite some time. Your breakdown on methodology helped me to refresh my intent. Thank you.

thanks so much for this good lecture. student from university of science and technology, Wudil. Kano Nigeria.

It’s profound easy to understand I appreciate

Thanks a lot for sharing superb information in a detailed but concise manner. It was really helpful and helped a lot in getting into my own research methodology.

Comment * thanks very much

This was sooo helpful for me thank you so much i didn’t even know what i had to write thank you!

You’re most welcome 🙂

Simple and good. Very much helpful. Thank you so much.

This is very good work. I have benefited.

Thank you so much for sharing

This is powerful thank you so much guys

I am nkasa lizwi doing my research proposal on honors with the university of Walter Sisulu Komani I m on part 3 now can you assist me.my topic is: transitional challenges faced by educators in intermediate phase in the Alfred Nzo District.

Appreciate the presentation. Very useful step-by-step guidelines to follow.

I appreciate sir

wow! This is super insightful for me. Thank you!

Indeed this material is very helpful! Kudos writers/authors.

I want to say thank you very much, I got a lot of info and knowledge. Be blessed.

I want present a seminar paper on Optimisation of Deep learning-based models on vulnerability detection in digital transactions.

Need assistance

Dear Sir, I want to be assisted on my research on Sanitation and Water management in emergencies areas.

I am deeply grateful for the knowledge gained. I will be getting in touch shortly as I want to be assisted in my ongoing research.

The information shared is informative, crisp and clear. Kudos Team! And thanks a lot!

hello i want to study

Hello!! Grad coach teams. I am extremely happy in your tutorial or consultation. i am really benefited all material and briefing. Thank you very much for your generous helps. Please keep it up. If you add in your briefing, references for further reading, it will be very nice.

All I have to say is, thank u gyz.

Good, l thanks

thank you, it is very useful

Trackbacks/Pingbacks

- What Is A Literature Review (In A Dissertation Or Thesis) - Grad Coach - […] the literature review is to inform the choice of methodology for your own research. As we’ve discussed on the Grad Coach blog,…

- Free Download: Research Proposal Template (With Examples) - Grad Coach - […] Research design (methodology) […]

- Dissertation vs Thesis: What's the difference? - Grad Coach - […] and thesis writing on a daily basis – everything from how to find a good research topic to which…

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Submit Comment

- Print Friendly

Uncomplicated Reviews of Educational Research Methods

- Writing a Research Report

.pdf version of this page

This review covers the basic elements of a research report. This is a general guide for what you will see in journal articles or dissertations. This format assumes a mixed methods study, but you can leave out either quantitative or qualitative sections if you only used a single methodology.

This review is divided into sections for easy reference. There are five MAJOR parts of a Research Report:

1. Introduction 2. Review of Literature 3. Methods 4. Results 5. Discussion